Mastering GLM-5 API Calls: 5-Minute Getting Started Guide for the 744B MoE Open Source Flagship Model

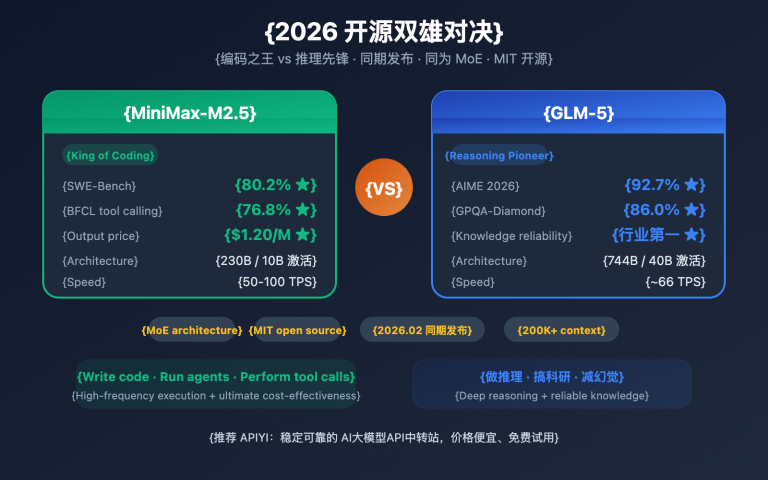

Zhipu AI officially released GLM-5 on February 11, 2026. It's currently one of the largest open-source Large Language Models by parameter count. GLM-5 utilizes a 744B MoE (Mixture of Experts) architecture, activating 40B parameters per inference, and has reached the top tier for open-source models in reasoning, coding, and Agent tasks. Core Value: By the…