Author's Note: A deep dive into the core upgrades of ByteDance Jimeng's Seedance 2.0 video generation and Seedream 5.0 image generation models, featuring API integration tutorials and real-world comparisons.

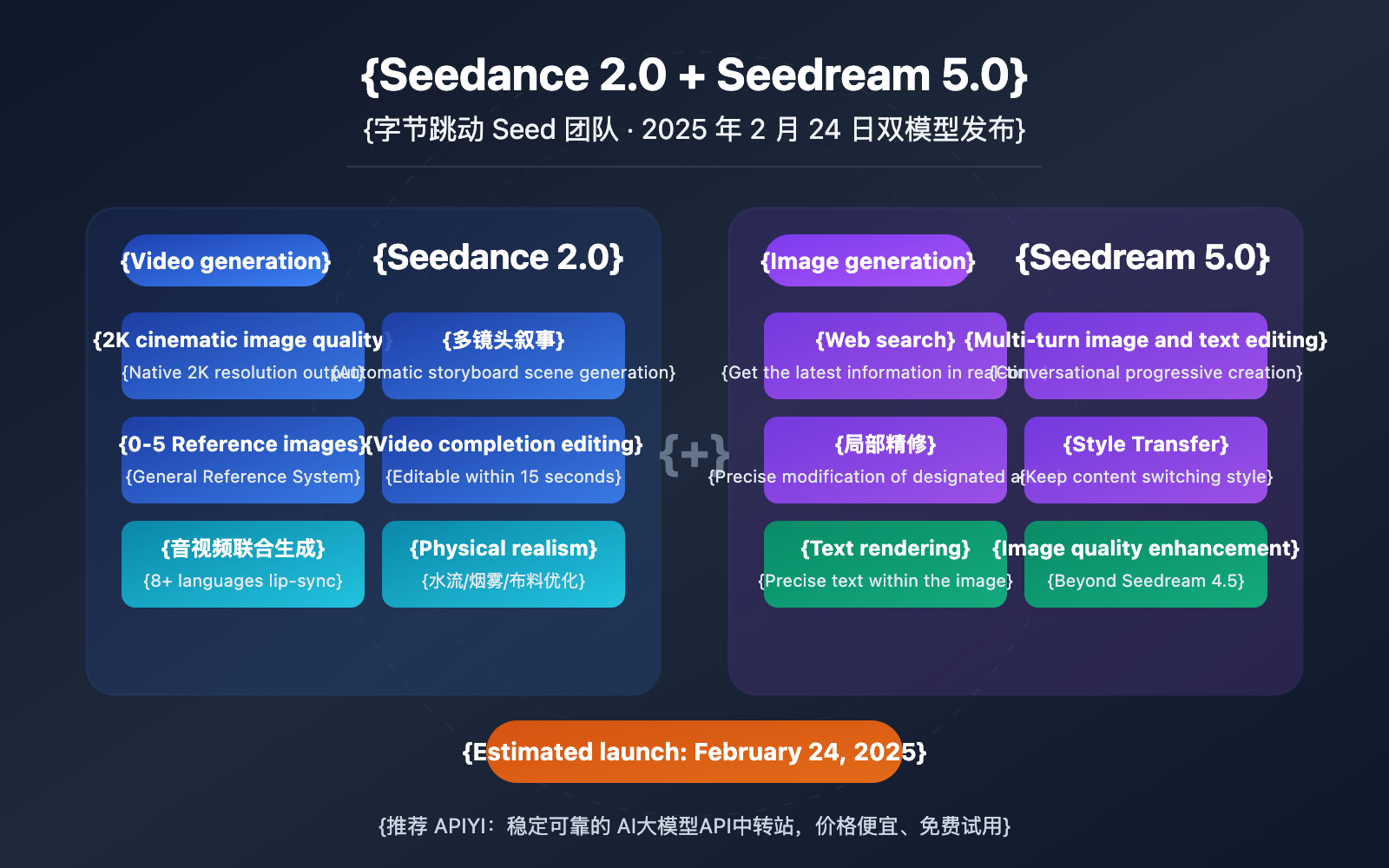

According to reliable sources, ByteDance's Seed team is set to release the Seedance 2.0 video generation model and the Seedream 5.0 image generation model simultaneously on February 24th. This marks the most significant dual-model upgrade for the Jimeng platform to date. Seedance 2.0 brings heavy-hitting features like native 2K resolution, multi-shot storytelling, and multi-reference image input. Meanwhile, Seedream 5.0 introduces web search and multi-round image-text editing for the first time, signaling that AI image generation has entered the era of interactive creation.

Core Value: Spend 3 minutes to get up to speed on all the upgrade highlights for Seedance 2.0 and Seedream 5.0, learn the ropes of API integration, and be among the first to experience next-gen AI creative tools.

Seedance 2.0 and Seedream 5.0: Core Info at a Glance

| Feature | Seedance 2.0 (Video Generation) | Seedream 5.0 (Image Generation) |

|---|---|---|

| Publisher | ByteDance Seed Team | ByteDance Seed Team |

| Expected Release | February 24, 2025 | February 24, 2025 |

| Core Upgrades | Multi-reference images + Video completion + 2K resolution | Web search + Multi-turn text/image editing |

| Previous Version | Seedance 1.5 Pro | Seedream 4.5 |

| Platforms | Jimeng / Volcengine / Third-party API | Jimeng / Volcengine / Third-party API |

The Industry Impact of the Seedance 2.0 and Seedream 5.0 Dual Release

ByteDance's decision to drop both video and image generation models at the same time highlights its "Video + Image" dual-engine strategy in the AIGC space. Seedance 2.0 is a massive step up from 1.5 Pro—everything from image quality and physical realism to multimodal input has been precision-engineered to solve real-world creative pain points. Meanwhile, Seedream 5.0 skips the traditional versioning logic by introducing web search capabilities and multi-turn editing. This shifts image generation from a "one-and-done" task to a conversational process where you can refine results back and forth.

For developers and creators, this means you can now access a complete creative pipeline—from text-to-video and sketch-to-final-cut—through a single API call. It's a huge move toward lowering the barrier to entry for high-quality multimodal content.

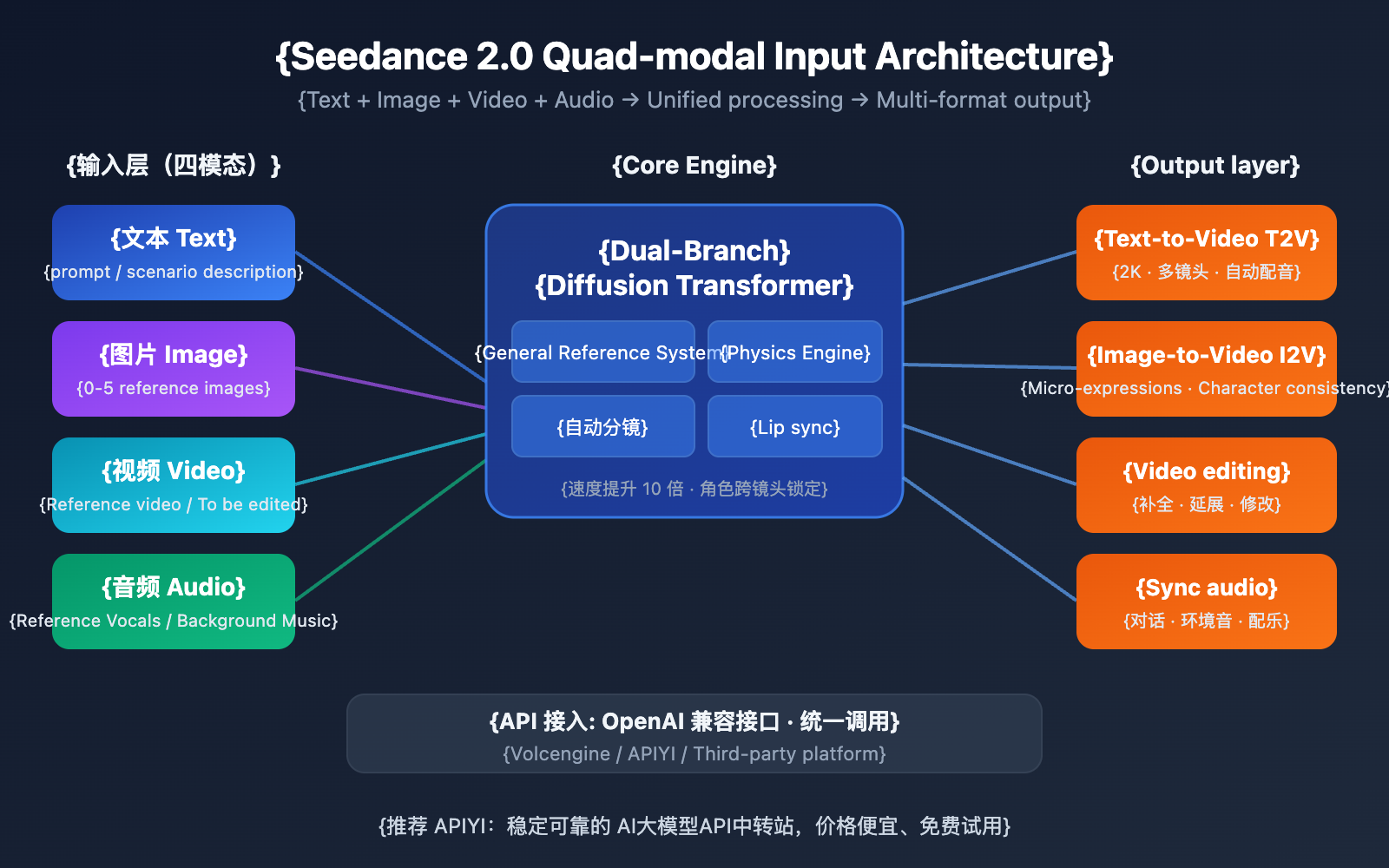

Seedance 2.0: 7 Core Upgrades for Jimeng Video Generation

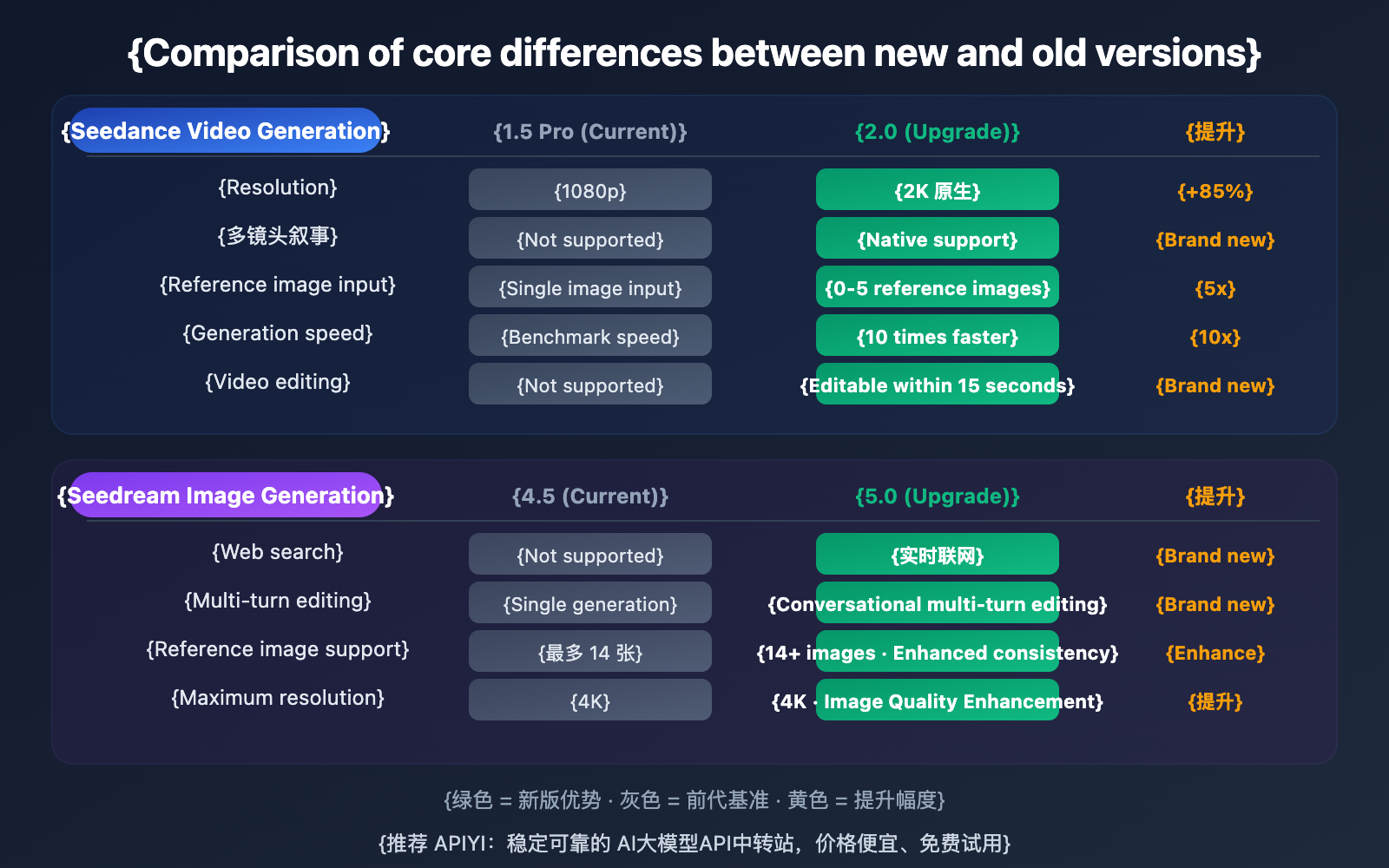

Compared to the previous 1.5 Pro version, Seedance 2.0 has made a leap from "single video clip generation" to "complete scene narrative." Here are the seven core improvements:

Seedance 2.0 Text-to-Video (T2V) Upgrades

Major Boost in Physical Realism: Seedance 2.0 features a retrained physics engine module, making complex physical effects like water flow, smoke, and fabric much more realistic. Character movements now include fine-tuned inertia, gravity response, and collision feedback, saying goodbye to the "floating" sensation common in AI videos.

A Leap in Aesthetics and Image Quality: It now natively supports 2K cinema-grade resolution output—a massive jump from 1080p to 2K. There are also significant improvements in color science, lighting/shadow rendering, and depth-of-field control, giving generated footage a true cinematic texture.

Automated Storyboarding and Scene Generation: This is one of Seedance 2.0's most groundbreaking features. By inputting a single text description, the model automatically splits it into multiple shots while maintaining character consistency, lighting continuity, and plot coherence. It's a shift from "generating a clip" to "generating a full scene."

Seedance 2.0 Image-to-Video (I2V) Upgrades

| Upgrade Dimension | Seedance 1.5 Pro | Seedance 2.0 | Improvement Level |

|---|---|---|---|

| Micro-expressions | Basic expression changes | Precise micro-expression capture | Significant |

| Motion Continuity | Occasional frame skipping | Smooth, natural transitions | Massive |

| Character Consistency | Consistent within a single shot | Cross-shot identity locking | Major Breakthrough |

| Product Details | Blurry or lost details | High-fidelity restoration | Significant |

| Video Editing | Not supported | Editable videos up to 15s | Brand New Feature |

Seedance 2.0 Multi-modal Input and Voice Enhancement

Multi-Reference Image Input (0-5 images): Seedance 2.0 introduces a "Universal Reference" system that supports inputting anywhere from 0 to 5 reference images. The system accurately replicates composition, camera movement, and character actions from the references, fundamentally solving character consistency and physical continuity issues.

Simultaneous Video and Image Input: You can now provide both images and videos as reference materials in a single generation, allowing for more precise style transfer and content control.

Video Completion and Extension: The model can complete or extend existing video clips, making it perfect for creating long-form videos or repairing missing segments.

Realistic Voice Support: It synthesizes speech based on real human voices and supports multi-character dialogue scenes with 2 or more roles. Accuracy in multilingual scenarios (Chinese, English, Spanish, etc.) has been significantly improved, with more natural lip-syncing.

🎯 Tech Tip: Seedance 2.0's multi-reference and video editing features are perfect for commercial content production. Once the model is live, developers can quickly access it for testing via the unified API interface on the APIYI (apiyi.com) platform.

Seedream 5.0: Core Breakthroughs in Jimeng Image Generation

Seedream 5.0 is the latest masterpiece from ByteDance's Seed team in the field of image generation. Compared to Seedream 4.5, it achieves several "0 to 1" breakthroughs.

Seedream 5.0 Web Search Capability

For the first time, Seedream 5.0 introduces web search capabilities to an image generation model. This means the model is no longer limited to the knowledge in its training data. When you ask it to generate the "latest 2025 Tesla Model Y," the model can search for the newest product photos and specs in real-time to generate an image that highly aligns with reality.

This capability is especially crucial for:

- News Illustration: Generating accurate images related to current events.

- Product Marketing: Creating promotional materials for the latest products.

- Trend-based Creation: Creative content that stays ahead of the curve.

Seedream 5.0 Multi-turn Image-Text Editing

Traditional image generation is a "one-shot" deal—if you're not happy with the result, you usually have to rewrite the prompt and start over. Seedream 5.0 changes this paradigm by introducing multi-turn conversational editing:

| Editing Capability | Description | Use Case |

|---|---|---|

| Localized Modification | Precise edits in specific areas | Adjusting expressions, replacing backgrounds |

| Style Transfer | Change style while keeping content | Photo to illustration, realism to anime |

| Element Add/Remove | Add or remove specific objects | Adding a product, removing clutter |

| Text Rendering | Accurate text rendering within images | Poster design, logo creation |

| Continuous Adjustment | Edit based on previous results | Progressive creation, fine-tuning |

Core Upgrades: Seedream 5.0 vs. Seedream 4.5

While Seedream 4.5 already performed excellently in multi-image synthesis and character consistency (supporting up to 14 reference images), Seedream 5.0 adds the two core pillars of web search and interactive editing, while further pushing the envelope on overall image quality.

💡 Selection Advice: Seedream 5.0's web search and multi-turn editing are ideal for creative workflows that require frequent iteration. We recommend trying it out via APIYI (apiyi.com) to compare how different models perform in your specific use cases.

Seedance 2.0 API Quick Integration Guide

A Simple Example

Here's the basic code for calling Seedance 2.0 text-to-video using an OpenAI-compatible interface:

import openai

client = openai.OpenAI(

api_key="YOUR_API_KEY",

base_url="https://vip.apiyi.com/v1" # Use the APIYI unified interface

)

# Seedance 2.0 text-to-video call

response = client.chat.completions.create(

model="seedance-2.0",

messages=[

{

"role": "user",

"content": "A golden retriever running on the beach at sunset, slow-motion close-up, cinematic quality"

}

]

)

print(response.choices[0].message.content)

View Seedance 2.0 Image-to-Video Full Code

import openai

import base64

from pathlib import Path

def seedance_image_to_video(

image_path: str,

prompt: str,

reference_images: list = None,

duration: int = 5

) -> str:

"""

Seedance 2.0 Image-to-Video API call

Args:

image_path: Path to the main image

prompt: Video prompt/description

reference_images: List of reference images (0-5)

duration: Video duration (seconds)

Returns:

Video URL or Base64 data

"""

client = openai.OpenAI(

api_key="YOUR_API_KEY",

base_url="https://vip.apiyi.com/v1" # APIYI unified interface

)

# Read and encode the main image

image_data = base64.b64encode(

Path(image_path).read_bytes()

).decode()

messages = [

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": f"data:image/png;base64,{image_data}"

}

},

{"type": "text", "text": prompt}

]

}

]

response = client.chat.completions.create(

model="seedance-2.0-i2v",

messages=messages,

max_tokens=4096

)

return response.choices[0].message.content

# Usage example

result = seedance_image_to_video(

image_path="product.png",

prompt="Product slowly rotating on a desk for display, soft lighting, commercial advertisement style",

duration=8

)

print(result)

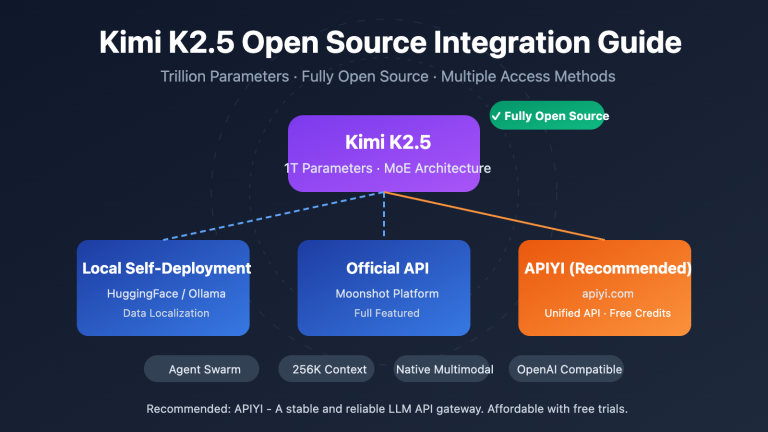

🚀 Quick Start: We recommend using the APIYI (apiyi.com) platform for quick access to Seedance 2.0 and Seedream 5.0. The platform provides a unified OpenAI-compatible interface, so you don't have to deal with multiple providers—you can get integrated in just 5 minutes.

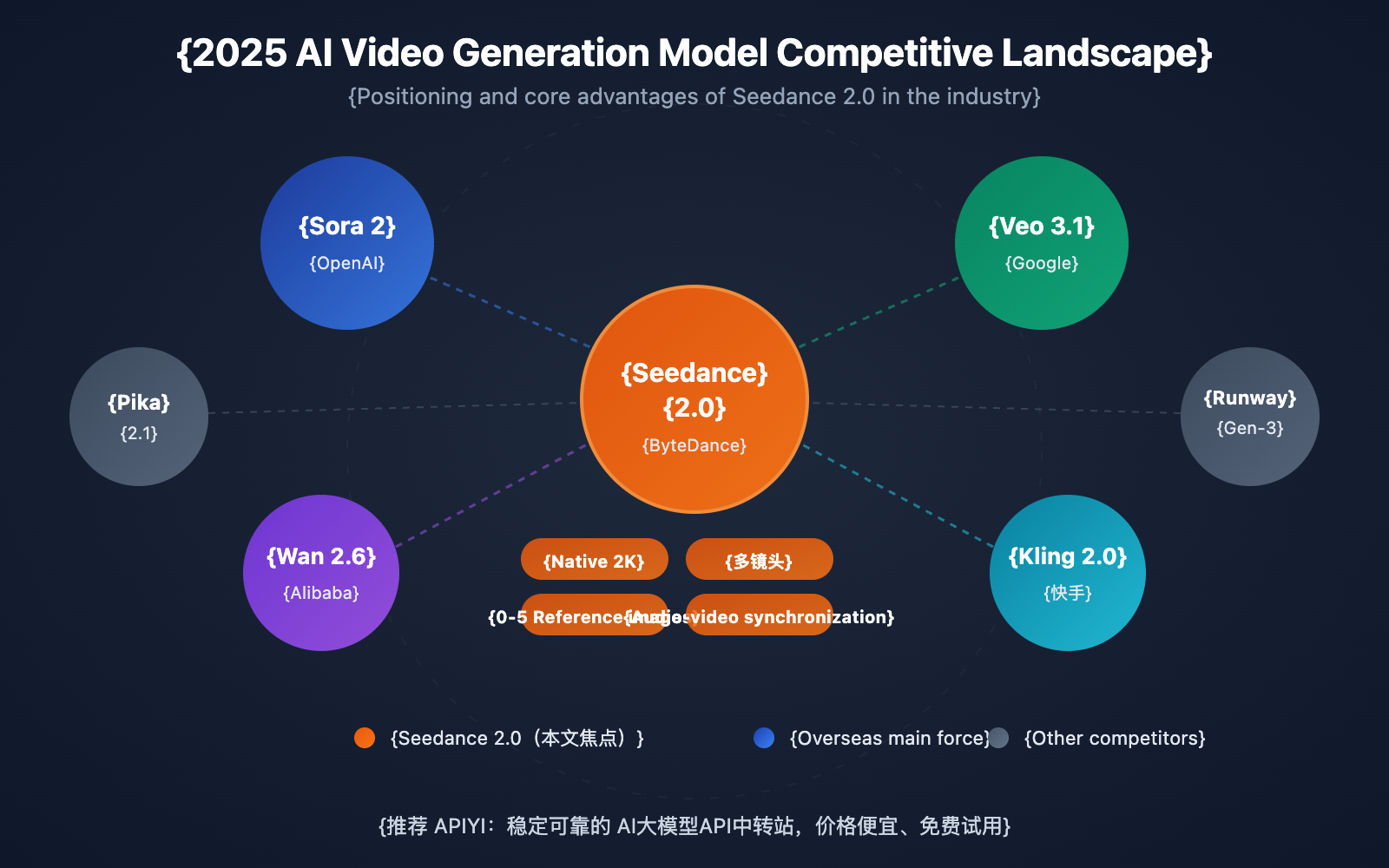

Seedance 2.0 vs. Competitor Video Models

| Comparison Dimension | Seedance 2.0 | Sora 2 | Veo 3.1 | Wan 2.6 |

|---|---|---|---|---|

| Max Resolution | Native 2K | 1080p | 1080p | 1080p |

| Multi-shot Narrative | Native Support | Limited Support | Not Supported | Not Supported |

| Audio-Video Sync | Native Joint Gen | Requires Post-prod | Native Support | Not Supported |

| Multi-reference Images | 0-5 images | Not Supported | Not Supported | Limited Support |

| Lip Sync | 8+ Languages | Primarily English | Multi-language | Chinese & English |

| Video Editing | Supported (within 15s) | Limited Support | Not Supported | Not Supported |

| Generation Speed | 10x faster than 1.5 Pro | Slower | Medium | Faster |

| Available Platforms | Jimeng / APIYI, etc. | OpenAI Official | Google Official | Open Source Community |

Comparison Notes: The above data is based on official parameters and public test results; actual performance may vary depending on specific scenarios. We recommend conducting side-by-side testing once the models are fully released.

The Impact of Seedance 2.0 and Seedream 5.0 on Developers

Impact on AI Video Creators

Seedance 2.0's multi-shot storytelling and character consistency lock shift AI video creation from "generating short clips" to "generating complete stories." For ad production, short video creation, and educational content, this means:

- Production Efficiency Boost: A single prompt can generate a coherent multi-shot video, eliminating the need to generate segments one by one and stitch them together.

- Character Consistency: Series content, IP characters, and brand spokespersons can maintain consistency across different videos.

- Significant Cost Reduction: The cost of commercial-grade video production is expected to drop from tens of thousands of dollars to just a few bucks.

Impact on AI Image Creators

Seedream 5.0's multi-turn editing capabilities change the image creation workflow—moving from "one-off generation" to "continuous conversational creation." This represents a fundamental efficiency boost for designers, marketing teams, and content creators.

Impact on Developers and API Users

Both models will provide API services through Volcano Engine (Volcano Ark). For developers already using the Seedance 1.5 Pro or Seedream 4.5 APIs, the migration cost to the new versions is extremely low—the API interfaces remain highly compatible.

💰 Cost Tip: There are usually promotional activities during the initial launch of new models. Accessing them through APIYI (apiyi.com) can provide more flexible billing options, which is perfect for small to medium teams looking to do thorough testing before a full rollout.

FAQ

Q1: When will Seedance 2.0 and Seedream 5.0 be available?

According to insider info, both models are expected to officially launch on February 24, 2025. At that time, they'll be opened simultaneously via the Jimeng platform and Volcano Engine API, with third-party API aggregation platforms following suit shortly after.

Q2: How do I use Seedance 2.0’s multi-reference image feature?

Seedance 2.0 supports inputting anywhere from 0 to 5 reference images. When making an API call, just include the Base64 encoding or URL of the reference images in your request. The system will automatically extract and replicate the composition, character traits, and camera movements from the reference images. We recommend getting some free credits from APIYI (apiyi.com) to test it out.

Q3: Will Seedream 5.0’s web search feature increase latency?

Web searching will slightly increase generation time (expected to add about 2-5 seconds), but in exchange, you get image content that's highly accurate to the real world. For scenarios where you don't need live info, you can turn off the search feature to maintain original speeds.

Q4: Do I need to change my code to upgrade from Seedance 1.5 Pro to 2.0?

The API interfaces remain highly compatible. The main change is just the model name parameter. If you're using an OpenAI-compatible interface, you usually only need to change the model parameter from seedance-1.5-pro to seedance-2.0, and the rest of your code can stay exactly as it is.

Summary

Here are the key takeaways for Seedance 2.0 and Seedream 5.0:

- Seedance 2.0 Video Generation: Native 2K resolution, multi-shot storytelling, support for 0-5 reference images, video completion editing, and multilingual lip-syncing.

- Seedream 5.0 Image Generation: Introduces web search capabilities for the first time, multi-turn conversational image/text editing, and continuous improvements in image quality.

- Release Date: Expected to launch simultaneously on February 24, 2025, via the Jimeng (Dreamina) platform and Volcengine APIs.

- Developer Friendly: APIs are backward compatible, meaning migration costs are low and there's a wealth of ecosystem tools available.

ByteDance's dual-model upgrade strategy shows their commitment to building a complete "video + image" creative ecosystem. Whether you're into commercial content production or personal creative expression, these two models are set to deliver significant boosts in both efficiency and quality.

We recommend using APIYI (apiyi.com) to quickly verify the performance of Seedance 2.0 and Seedream 5.0. The platform offers free testing credits and a unified interface for multiple models, helping you experience the latest AI creative tools right away.

References

⚠️ Link Format Note: All external links use the

Resource Name: domain.comformat. They're easy to copy but aren't clickable to avoid SEO weight loss.

-

ByteDance Seed Official Page: Introduction to Seedance and Seedream series models.

- Link:

seed.bytedance.com/en/seedance - Description: Official technical documentation and model specifications.

- Link:

-

Seedance 1.5 Pro Technical Documentation: Full technical parameters for the current version.

- Link:

seed.bytedance.com/en/seedance1_5_pro - Description: A baseline reference for understanding the 2.0 upgrade.

- Link:

-

Seedream 4.5 Official Page: Technical specs for the current image generation model.

- Link:

seed.bytedance.com/en/seedream4_5 - Description: A baseline reference for understanding the 5.0 upgrade.

- Link:

-

Volcengine API Documentation: Integration guide for the Seedance series API.

- Link:

byteplus.com/en/product/seedance - Description: Official reference for enterprise-level API integration.

- Link:

Author: APIYI Team

Technical Discussion: Feel free to discuss your experience with Seedance 2.0 and Seedream 5.0 in the comments. For more AI model API integration guides, visit the APIYI (apiyi.com) technical community.