Author's Note: A detailed analysis of the capability differences and applicable scenarios of OpenAI's 6 small models, including GPT-4.1-mini, GPT-4.1-nano, GPT-4o-mini, etc., to help developers choose the most suitable lightweight model solution.

Choosing the right AI model is one of the core challenges developers face. OpenAI's small model series provides cost-effective solutions for cost-sensitive applications. This article will systematically introduce the capability characteristics and best application scenarios of 6 lightweight models including GPT-4.1-mini, GPT-4.1-nano, and GPT-4o-mini.

Core Value: After reading this article, you will master the selection strategy for OpenAI's small models and be able to choose the most cost-effective model solution based on specific business requirements.

OpenAI Small Models Key Points

| Model | Context Window | Core Advantage | Use Cases |

|---|---|---|---|

| GPT-4.1-mini | 1M tokens | Performance close to GPT-4.1, 50% lower latency | Complex reasoning, long document processing |

| GPT-4.1-nano | 1M tokens | Lowest cost, fastest speed | Classification, filtering, simple dialogue |

| GPT-4o-mini | 128K tokens | Mature and stable, complete ecosystem | Daily conversations, basic tasks |

OpenAI Small Model Family Overview

OpenAI's small model strategy has evolved from GPT-4o-mini to the GPT-4.1 series through iterative upgrades. GPT-4o-mini, released in July 2024, pioneered the era of cost-effective small models, while the GPT-4.1 series, released in April 2025, elevated small model capabilities to new heights.

GPT-4.1-mini performs excellently in multiple benchmark tests, with an MMLU score of 87.5%, a significant improvement over GPT-4o-mini's 82%. More notably, GPT-4.1-mini's performance in coding tasks slightly outperforms the full version of GPT-4.1, making it the preferred choice for code assistance scenarios.

OpenAI Small Model Technical Features

The biggest technical breakthrough of the GPT-4.1 series is the 1 million token context window, giving small models the ability to process ultra-long documents for the first time. In needle-in-haystack tests, GPT-4.1 series models achieved 100% accuracy, proving their long context understanding capability stands up to real-world testing.

Another important feature is the GPT-4.1 series' more precise "literal understanding" of instructions. OpenAI officially notes: "prompt migration is likely required" – developers need to retest existing prompts, as the new models will execute instructions more strictly without "inferring" implicit intentions.

OpenAI Small Models Complete List

Below is detailed information about the 6 OpenAI small models covered in this article:

| Model Name | Release Date | Input Price | Output Price | Max Output |

|---|---|---|---|---|

| gpt-4.1-mini | 2025-04-14 | $0.40/million | $1.60/million | 32K tokens |

| gpt-4.1-mini-2025-04-14 | 2025-04-14 | $0.40/million | $1.60/million | 32K tokens |

| gpt-4.1-nano | 2025-04-14 | $0.10/million | $0.40/million | 32K tokens |

| gpt-4.1-nano-2025-04-14 | 2025-04-14 | $0.10/million | $0.40/million | 32K tokens |

| gpt-4o-mini | 2024-07-18 | $0.15/million | $0.60/million | 16K tokens |

| gpt-4o-mini-2024-07-18 | 2024-07-18 | $0.15/million | $0.60/million | 16K tokens |

🎯 Special Offer: APIyi Platform launches SpecialPerks group, offering 50% discount on the above small models with high concurrency and official routing. Visit apiyi.com for details.

Quick Start with OpenAI Small Models

Minimal Example

Here's the simplest code to call OpenAI small models, running in just 10 lines:

import openai

client = openai.OpenAI(

api_key="YOUR_API_KEY",

base_url="https://vip.apiyi.com/v1"

)

response = client.chat.completions.create(

model="gpt-4.1-mini",

messages=[{"role": "user", "content": "Explain what a Token is"}]

)

print(response.choices[0].message.content)

View Complete Implementation Code (with Model Switching)

import openai

from typing import Optional, Literal

ModelType = Literal[

"gpt-4.1-mini",

"gpt-4.1-nano",

"gpt-4o-mini"

]

def call_small_model(

prompt: str,

model: ModelType = "gpt-4.1-mini",

system_prompt: Optional[str] = None,

max_tokens: int = 2000

) -> str:

"""

Wrapper function for calling OpenAI small models

Args:

prompt: User input

model: Model name, supports gpt-4.1-mini/nano, gpt-4o-mini

system_prompt: System prompt

max_tokens: Maximum output tokens

Returns:

Model response content

"""

client = openai.OpenAI(

api_key="YOUR_API_KEY",

base_url="https://vip.apiyi.com/v1"

)

messages = []

if system_prompt:

messages.append({"role": "system", "content": system_prompt})

messages.append({"role": "user", "content": prompt})

try:

response = client.chat.completions.create(

model=model,

messages=messages,

max_tokens=max_tokens

)

return response.choices[0].message.content

except Exception as e:

return f"Error: {str(e)}"

# Usage example: Compare different models

models = ["gpt-4.1-mini", "gpt-4.1-nano", "gpt-4o-mini"]

for m in models:

result = call_small_model("Explain machine learning in one sentence", model=m)

print(f"{m}: {result[:100]}...")

Recommendation: Get free testing credits through APIyi at apiyi.com. The platform supports unified API calls for all the above small models, and the SpecialPerks group offers a 50% discount.

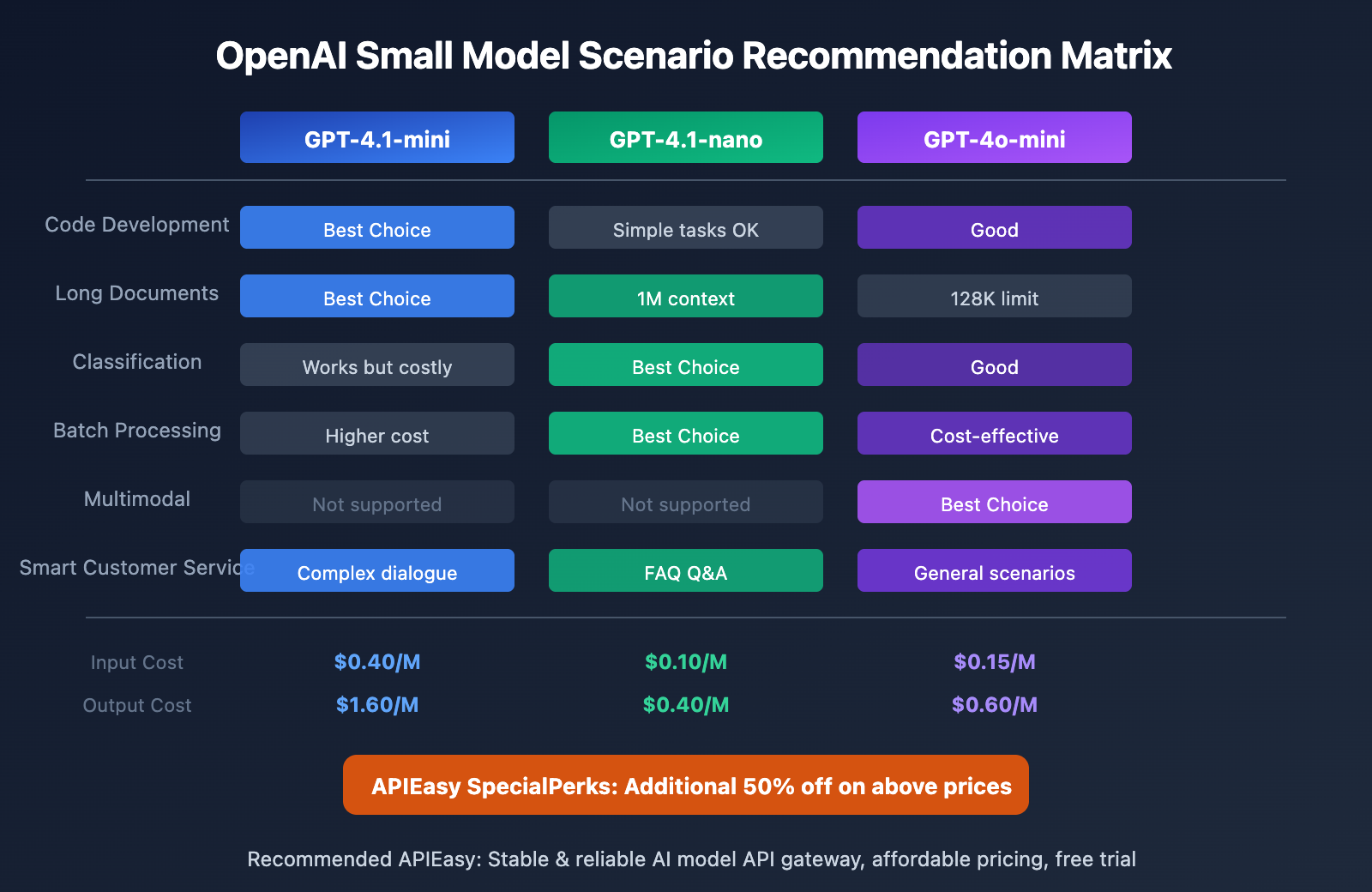

OpenAI Small Model Application Scenarios

GPT-4.1-mini Best Scenarios

GPT-4.1-mini is the most comprehensive small model, suitable for the following scenarios:

- Code Development Assistance: Code completion, code review, bug analysis

- Long Document Processing: Contract analysis, paper summarization, technical documentation understanding

- Complex Conversational Systems: Customer service bots, intelligent assistants, knowledge Q&A

- Data Analysis: Data interpretation, report generation, trend analysis

GPT-4.1-nano Best Scenarios

GPT-4.1-nano is the most cost-effective choice, suitable for high-throughput scenarios:

- Content Classification: Sentiment analysis, tag classification, spam filtering

- Data Extraction: Entity recognition, keyword extraction, format conversion

- Simple Conversations: FAQ responses, guided dialogues, form filling

- Batch Processing: Large-scale text cleaning, data annotation assistance

GPT-4o-mini Best Scenarios

GPT-4o-mini is the most mature and stable choice, suitable for:

- Mature Business Systems: Validated production environments, stability-first scenarios

- Multimodal Tasks: Image understanding, visual Q&A (GPT-4.1-mini/nano do not support yet)

- Budget-Sensitive Projects: The lowest input cost option

OpenAI Small Model Performance Comparison

| Metric | GPT-4.1-mini | GPT-4.1-nano | GPT-4o-mini |

|---|---|---|---|

| MMLU Score | 87.5% | ~80% | 82% |

| Context Window | 1M | 1M | 128K |

| Output Length | 32K | 32K | 16K |

| Response Speed | Fast | Fastest | Medium |

| Training Data Cutoff | 2024-06 | 2024-06 | 2023-10 |

| Instruction Following | Precise Literal | Precise Literal | Moderate Inference |

Cost-Effectiveness Analysis

Assuming daily processing of 1M input tokens + 500K output tokens:

| Model | Daily Cost | Monthly Cost | Relative Cost |

|---|---|---|---|

| GPT-4.1-nano | $0.30 | $9.00 | Lowest (Baseline) |

| GPT-4o-mini | $0.45 | $13.50 | 1.5x |

| GPT-4.1-mini | $1.20 | $36.00 | 4x |

Money-Saving Tip: APIYI SpecialPerks tier offers 50% discount, allowing the above costs to be halved. Visit apiyi.com to activate SpecialPerks tier.

OpenAI Small Model Selection Decision

Decision Process

- Identify Core Requirements: Are you prioritizing quality, speed, or cost?

- Evaluate Context Length: Do you need to process content exceeding 128K tokens?

- Consider Multimodal Needs: Do you require image understanding capabilities?

- Test Real-World Performance: Validate model performance with actual data

Quick Selection Guide

| Priority | Recommended Model | Rationale |

|---|---|---|

| Comprehensive Capability | GPT-4.1-mini | Strongest performance, largest context |

| Ultimate Cost Efficiency | GPT-4.1-nano | Lowest price, fastest speed |

| Stable & Reliable | GPT-4o-mini | Mature ecosystem, multimodal support |

| Long Documents | GPT-4.1-mini/nano | 1M context window |

🎯 Selection Recommendation: We recommend conducting actual testing comparisons through the APIYI apiyi.com platform, which supports unified API calls for multiple models, facilitating quick validation of different models' actual performance in your scenarios.

FAQ

Q1: GPT-4.1-mini vs GPT-4o-mini – Which Should You Choose?

If you need to handle long documents or pursue higher reasoning quality, choose GPT-4.1-mini; if you need multimodal capabilities or lower input costs, choose GPT-4o-mini. It's recommended to test with your actual business data before making a decision.

Q2: What Tasks Can GPT-4.1-nano Handle?

GPT-4.1-nano is suitable for classification, extraction, simple Q&A, and similar tasks. It's not recommended for complex reasoning or creative writing. Its biggest advantage is extremely low cost (75% cheaper than GPT-4.1-mini), making it ideal for large-scale batch processing.

Q3: How to Quickly Test These Small Models?

We recommend using the API Yi platform for testing:

- Visit API Yi at apiyi.com and register an account

- Activate the SpecialPerks tier to enjoy 50% discount

- Obtain an API Key and use the code examples in this article for quick validation

- Compare the actual performance of different models in your business scenario

Summary

Key takeaways about OpenAI's small models:

- GPT-4.1-mini is the Performance Champion: 87.5% MMLU score, 1 million context window, coding capabilities even surpassing GPT-4.1

- GPT-4.1-nano is the Cost-Effective Choice: Priced at only 25% of GPT-4.1-mini, ideal for large-scale simple tasks

- GPT-4o-mini is the Stable Choice: Most mature ecosystem, multimodal support, lowest input cost

When selecting a small model, you should find the right balance between quality, cost, and speed based on your specific business needs.

We recommend quickly validating performance through API Yi at apiyi.com. The SpecialPerks tier offers 50% discount and high-concurrency official routing, making it an ideal choice for both testing and production deployment.

References

⚠️ Link Format Notice: All external links use the

Resource Name: domain.comformat for easy copying but non-clickable, avoiding SEO weight loss.

-

OpenAI Official Pricing Page: Latest pricing information for OpenAI API models

- Link:

openai.com/api/pricing - Description: View official latest prices and quota limits

- Link:

-

OpenAI Model Comparison Documentation: Official model capability comparison and selection recommendations

- Link:

platform.openai.com/docs/models - Description: Learn about technical specifications and applicable scenarios for each model

- Link:

-

The Complete Guide to GPT-4.1: Detailed introduction and prompting tips for GPT-4.1 series models

- Link:

prompthub.us/blog/the-complete-guide-to-gpt-4-1 - Description: Deep dive into technical details and best practices of the GPT-4.1 family

- Link:

Author: Technical Team

Technical Discussion: Welcome to discuss in the comments section. For more resources, visit APIYI apiyi.com technical community