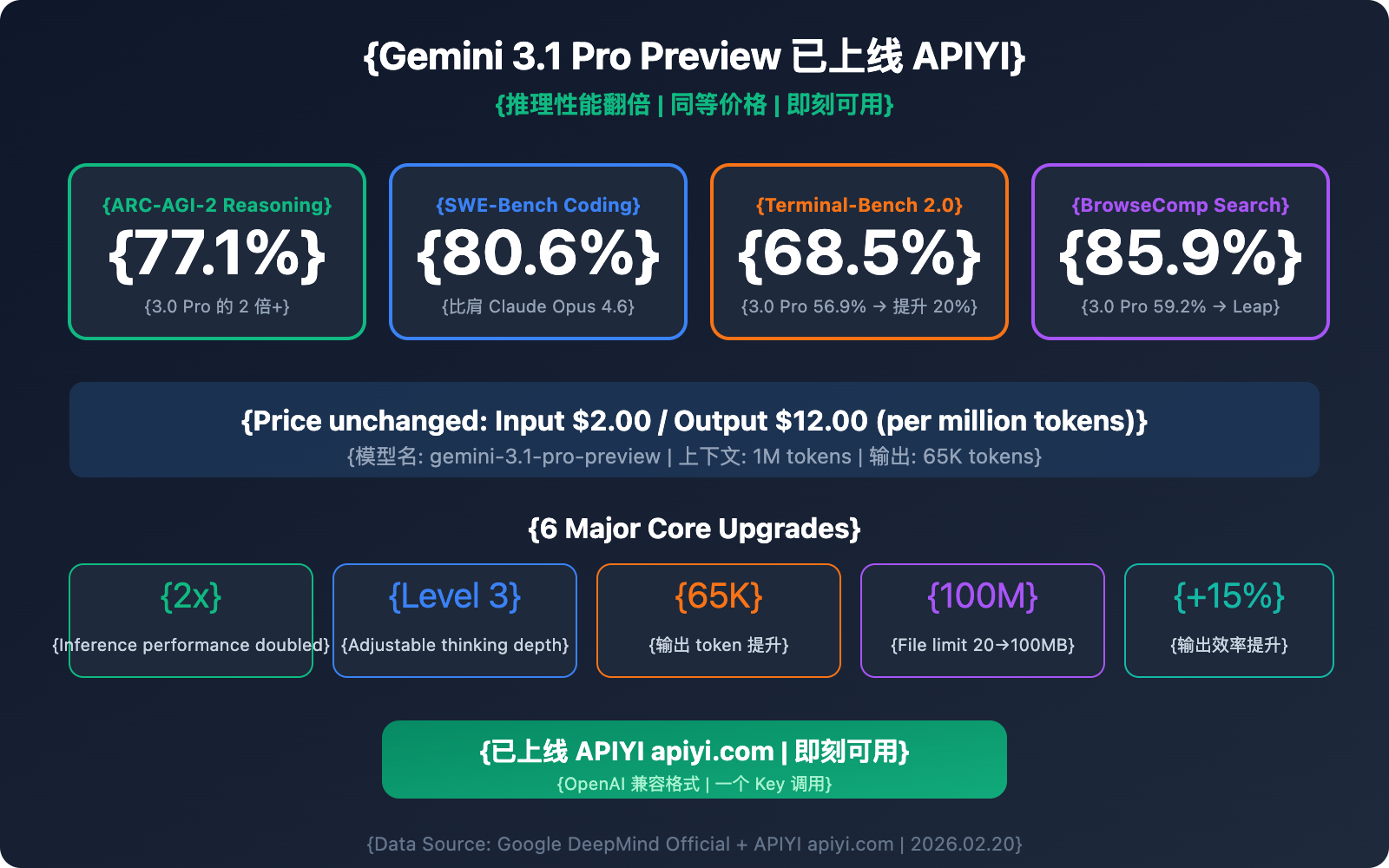

Great news—Gemini 3.1 Pro Preview is now live on APIYI and available for API calls. The model name is gemini-3.1-pro-preview, with prompt pricing at $2.00/1M tokens and completion pricing at $12.00/1M tokens—exactly the same as Gemini 3.0 Pro Preview.

But don't let the price fool you; the performance is on a whole different level. Gemini 3.1 Pro hit 77.1% on the ARC-AGI-2 reasoning benchmark, more than double that of 3.0 Pro. Its SWE-Bench Verified coding score reached 80.6%, putting it in direct competition with Claude Opus 4.6 (80.9%) for the first time. Plus, output efficiency has improved by 15%, giving you more reliable results with fewer tokens.

Core Value: This article dives deep into the 6 major upgrades of Gemini 3.1 Pro Preview, how to call the API, a detailed comparison with competitors, and best practices for various scenarios.

Gemini 3.1 Pro Preview: Key Specifications

| Parameter | Details |

|---|---|

| Model Name | gemini-3.1-pro-preview |

| Release Date | February 19, 2026 |

| Prompt Price (≤200K tokens) | $2.00 / 1M tokens |

| Completion Price (≤200K tokens) | $12.00 / 1M tokens |

| Prompt Price (>200K tokens) | $4.00 / 1M tokens |

| Completion Price (>200K tokens) | $18.00 / 1M tokens |

| Context Window | 1,000,000 tokens (1M) |

| Max Output | 65,000 tokens (65K) |

| File Upload Limit | 100MB (previously 20MB) |

| Knowledge Cutoff | January 2025 |

| APIYI Availability | ✅ Live |

🚀 Try it now: Gemini 3.1 Pro Preview is live on APIYI (apiyi.com). You can call it using the OpenAI-compatible format—no Google account required. Get integrated in just 5 minutes.

6 Core Upgrades of Gemini 3.1 Pro Preview

Upgrade 1: Reasoning Performance Doubled — ARC-AGI-2 Reaches 77.1%

This is the most eye-catching upgrade. In the ARC-AGI-2 benchmark (which evaluates a model's ability to solve entirely new logic patterns), Gemini 3.1 Pro reached 77.1%, which is more than double the score of Gemini 3.0 Pro.

Meanwhile, on the MCP Atlas benchmark (measuring multi-step workflow capabilities using the Model Context Protocol), 3.1 Pro hit 69.2%, a 15-percentage-point jump from 3.0 Pro's 54.1%.

This means that in scenarios involving complex reasoning, multi-step logic chains, and Agent workflows, Gemini 3.1 Pro has made a massive leap forward.

Upgrade 2: Three-Level Thinking Depth System — Deep Think Mini

Gemini 3.1 Pro introduces a brand-new three-level thinking depth system, allowing developers to flexibly adjust the "reasoning budget" based on task complexity:

| Thinking Level | Behavioral Characteristics | Use Cases | Latency Impact |

|---|---|---|---|

| high | A mini version of Gemini Deep Think; deep reasoning | Math proofs, complex debugging, strategic planning | Higher |

| medium | Equivalent to 3.0 Pro's "high" level | Code reviews, technical analysis, architecture design | Moderate |

| low | Fast response, minimal reasoning overhead | Data extraction, format conversion, simple Q&A | Lowest |

Key point: The high level in 3.1 Pro redefines the term—it's now a "mini version" of Gemini Deep Think, with reasoning depth far exceeding 3.0 Pro's high level. Since 3.1's medium is roughly equal to 3.0's high, you can get the original top-tier reasoning quality even when using the medium setting.

Upgrade 3: Coding Skills Join the Elite — SWE-Bench 80.6%

Gemini 3.1 Pro's performance in the coding domain is nothing short of a breakthrough:

| Coding Benchmark | Gemini 3.0 Pro | Gemini 3.1 Pro | Improvement |

|---|---|---|---|

| SWE-Bench Verified | 76.8% | 80.6% | +3.8% |

| Terminal-Bench 2.0 | 56.9% | 68.5% | +11.6% |

| LiveCodeBench Pro | — | Elo 2887 | New Benchmark |

An 80.6% score on SWE-Bench Verified means Gemini 3.1 Pro is now neck-and-neck with Claude Opus 4.6 (80.9%) on software engineering tasks, with a gap of only 0.3 percentage points.

Terminal-Bench 2.0 evaluates an Agent's terminal coding capabilities—the jump from 56.9% to 68.5% shows that 3.1 Pro's reliability in agentic scenarios has been significantly enhanced.

Upgrade 4: Comprehensive Boost in Output and Efficiency

| Feature | Gemini 3.0 Pro | Gemini 3.1 Pro | Improvement |

|---|---|---|---|

| Max Output Tokens | Unknown | 65,000 (65K) | Massive increase |

| File Upload Limit | 20MB | 100MB | 5x increase |

| YouTube URL Support | ❌ | ✅ | New feature |

| Output Efficiency | Baseline | +15% | Fewer tokens for more reliable results |

The 65K output limit means the model can generate entire long documents, large blocks of code, or detailed analysis reports in one go, without needing multiple requests to piece things together.

File uploads have expanded from 20MB to 100MB. Combined with the 1M token context window, you can directly analyze large code repositories, long videos, or massive document sets.

Direct YouTube URL input is a super handy new feature—developers can pass a YouTube link directly into the prompt, and the model will automatically analyze the video content without you having to manually download and upload it.

Upgrade 5: Dedicated customtools Endpoint — A Power Tool for Agent Devs

Google also launched the gemini-3.1-pro-preview-customtools dedicated endpoint, a version deeply optimized for Agent development scenarios:

- Optimized Tool Call Priority: Specifically tuned the calling priority for tools developers use most, like

view_fileandsearch_code. - Bash + Custom Function Hybrid: Perfectly suited for Agent workflows that need to switch between bash commands and custom functions.

- Agentic Scenario Stability: Offers higher reliability in multi-step Agent tasks compared to the general-purpose version.

This means if you're building AI programming assistants, code review bots, or automated DevOps Agents, the customtools endpoint is your best bet.

Upgrade 6: Web Search Breakthrough — BrowseComp 85.9%

The BrowseComp benchmark evaluates a model's Agent web search capabilities. Gemini 3.1 Pro reached 85.9%, while 3.0 Pro was only at 59.2%—a massive jump of 26.7 percentage points.

This capability is huge for applications that require real-time information retrieval, such as research assistants, competitive analysis, and news summarization.

💡 Tech Insight: Gemini 3.1 Pro also introduced the specialized

gemini-3.1-pro-preview-customtoolsendpoint. It's optimized for developers mixing bash commands and custom functions, with specifically tuned priorities for tools likeview_fileandsearch_code. You can call this dedicated endpoint directly via APIYI (apiyi.com).

Hands-on with Gemini 3.1 Pro Preview API

Simple Call Example (Python)

import openai

client = openai.OpenAI(

api_key="YOUR_API_KEY",

base_url="https://api.apiyi.com/v1" # APIYI unified interface

)

response = client.chat.completions.create(

model="gemini-3.1-pro-preview",

messages=[

{"role": "user", "content": "Analyze the time complexity of this code and provide optimization suggestions:\n\ndef two_sum(nums, target):\n for i in range(len(nums)):\n for j in range(i+1, len(nums)):\n if nums[i] + nums[j] == target:\n return [i, j]"}

]

)

print(response.choices[0].message.content)

View full call example (including reasoning depth control and multimodal)

import openai

import base64

client = openai.OpenAI(

api_key="YOUR_API_KEY",

base_url="https://api.apiyi.com/v1" # APIYI unified interface

)

# Example 1: High Reasoning Depth - Complex Mathematical Reasoning

response_math = client.chat.completions.create(

model="gemini-3.1-pro-preview",

messages=[{

"role": "user",

"content": "Prove: For all positive integers n, n^3 - n is always divisible by 6. Please provide a rigorous mathematical proof."

}],

temperature=0.2,

max_tokens=4096

)

# Example 2: Multimodal Analysis - Image Understanding

with open("architecture.png", "rb") as f:

img_data = base64.b64encode(f.read()).decode()

response_vision = client.chat.completions.create(

model="gemini-3.1-pro-preview",

messages=[{

"role": "user",

"content": [

{"type": "text", "text": "Analyze this system architecture diagram in detail, pointing out potential performance bottlenecks and improvement suggestions."},

{"type": "image_url", "image_url": {"url": f"data:image/png;base64,{img_data}"}}

]

}],

max_tokens=8192

)

# Example 3: Long Context Code Analysis

with open("large_codebase.txt", "r") as f:

code_content = f.read() # Can be up to hundreds of thousands of tokens

response_code = client.chat.completions.create(

model="gemini-3.1-pro-preview",

messages=[

{"role": "system", "content": "You are a senior software architect. Please carefully analyze the entire code repository."},

{"role": "user", "content": f"Here is the complete code repository:\n\n{code_content}\n\nPlease analyze:\n1. Overall architectural design\n2. Potential bugs\n3. Performance optimization suggestions\n4. Code refactoring plan"}

],

max_tokens=16384 # Utilizing the 65K output capability

)

print(f"Math Reasoning: {response_math.choices[0].message.content[:200]}...")

print(f"Vision Analysis: {response_vision.choices[0].message.content[:200]}...")

print(f"Code Analysis: {response_code.choices[0].message.content[:200]}...")

🎯 Integration Tip: You can call Gemini 3.1 Pro Preview through APIYI (apiyi.com) using the standard OpenAI SDK—no need to install extra dependencies. If you have an existing OpenAI-format project, just swap the

base_urlandmodelparameters to switch over.