Author's Note: A comprehensive breakdown of xAI's latest Grok 4.20 Beta release, diving deep into the 4 Agents multi-agent collaborative architecture, real-world performance, and use cases to help beginners quickly get up to speed with this cutting-edge AI model.

xAI officially launched Grok 4.20 (Beta) in mid-February 2026, marking the most breakthrough version in the Grok series to date. Its biggest highlight isn't just a simple increase in parameters, but the introduction of the 4 Agents multi-agent collaboration system—four specialized AI agents working simultaneously to tackle complex problems from different angles.

Core Value: By the end of this article, you'll have a full understanding of Grok 4.20 Beta's technical architecture, how the 4 Agents mechanism works, actual performance data, and the core differences between it and other AI models.

Grok 4.20 Beta Key Highlights

| Key Point | Description | Value |

|---|---|---|

| 4 Agents Collaboration | 4 specialized agents thinking in parallel + real-time discussion | Massive boost in complex problem-solving |

| 200k GPU Training | Driven by the Colossus supercluster | Industry-leading inference capabilities |

| 256K+ Context | Supports up to 2M context window | Handles ultra-long docs and complex code |

| Native Multimodal | Unified processing of text + image + video | One model covers multiple input scenarios |

| Real-world Validation | Only profitable AI in Alpha Arena competition | Real-world application proven by hard cash |

Grok 4.20 Beta Quick Info

Grok 4.20 (Beta) is currently in an internal Beta rollout phase, available only to SuperGrok (approx. $30/month) and X Premium+ users. The official x.ai blog hasn't posted a formal announcement yet; the latest official record remains the Grok 4.1 version from November 2025.

However, Elon Musk has publicly confirmed the existence of Grok 4.20 on X multiple times, stating that this version "is starting to correctly answer open-ended engineering questions" and performs significantly better than 4.1.

From a technical standpoint, Grok 4.20 inherits the powerful foundation of the Grok 4 series:

- Training Cluster: Colossus supercluster, 200,000 GPUs

- Training Method: Large-scale Reinforcement Learning (RL) directly at the pre-training scale, improving computational efficiency by about 6x

- Parameter Scale: Based on an approx. 3T parameter model (exact numbers not yet public)

- Context Window: At least 256K tokens, with some API versions reaching 2M tokens

- Multimodal Capabilities: Native support for text, image, and video input

🎯 Heads up: The Grok 4.20 Beta API isn't open to the public yet. Once xAI officially releases the API interface, APIYI (apiyi.com) will be the first to integrate it. At that point, developers will be able to quickly experience the power of Grok 4.20 through a unified interface.

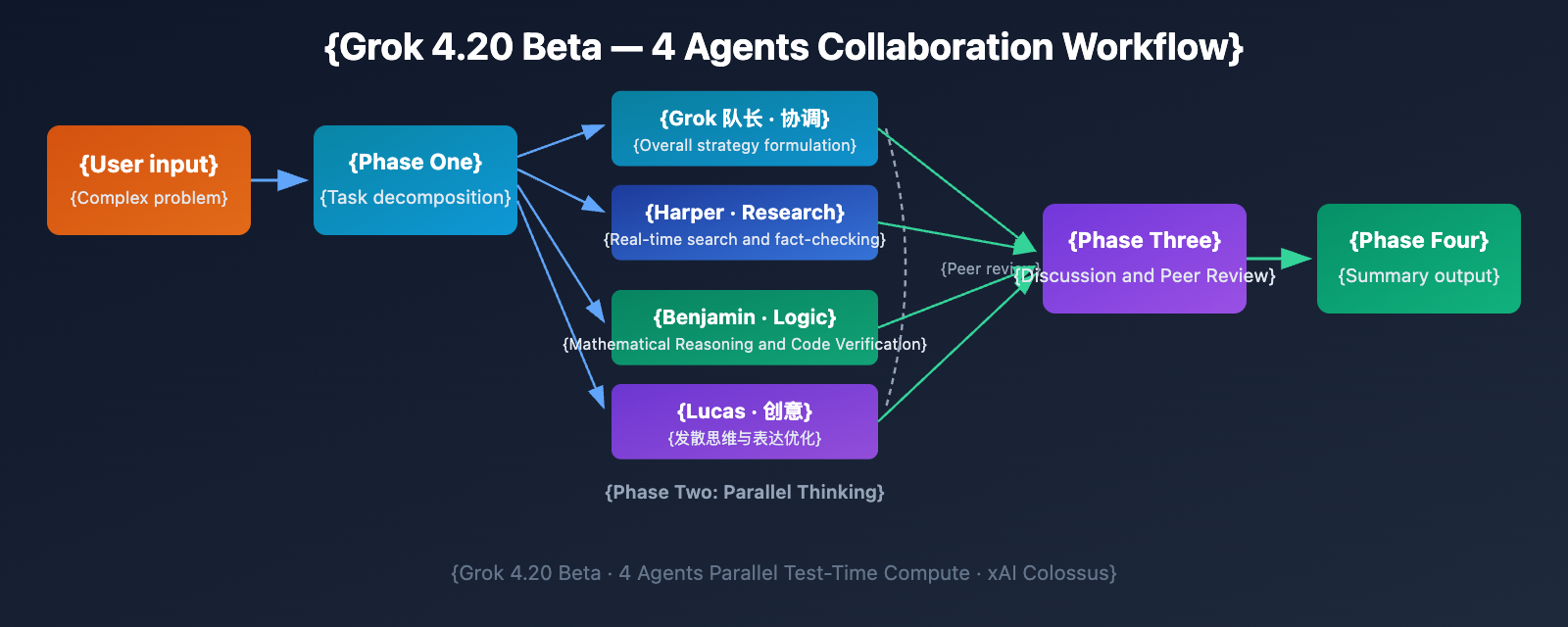

Grok 4.20 Beta 4 Agents Multi-Agent Architecture Explained

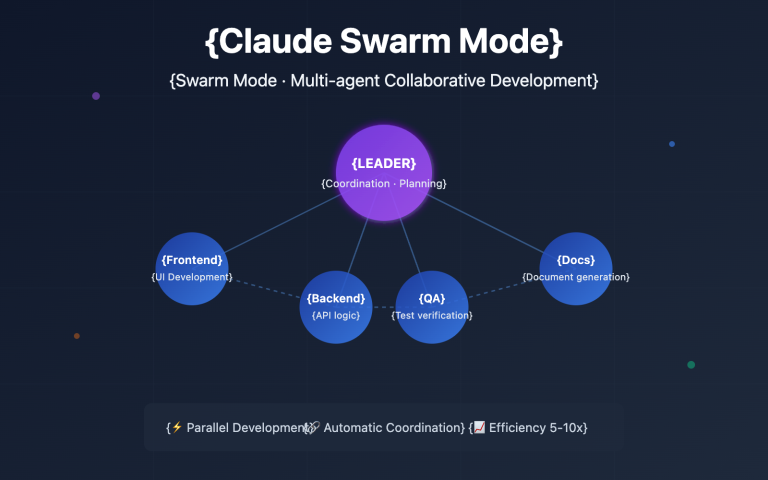

Grok 4.20's most groundbreaking innovation is the 4 Agents multi-agent collaboration system. This isn't just a simple model call; it's four AI agents with distinct professional roles working in parallel in real-time.

Grok 4.20 Beta: The Four Agent Roles

| Agent | Role | Primary Responsibilities | Workflow |

|---|---|---|---|

| Grok (Captain) | Coordinator / Aggregator | Overall strategy formulation, final answer synthesis | Coordinating the other 3 agents |

| Harper | Research & Facts Expert | Real-time search, data verification, evidence integration | Accessing X Firehose real-time data |

| Benjamin | Math/Code/Logic Expert | Rigorous reasoning, programming, computational verification | Mathematical proof-level precision |

| Lucas | Creative & Balance Expert | Divergent thinking, writing optimization, user experience | Creative planning and expression optimization |

Grok 4.20 Beta Multi-Agent Workflow

The collaboration between the 4 agents isn't just a simple "divide and conquer then stitch together" approach; it's a sophisticated real-time collaborative process:

Phase 1: Task Decomposition

After a user inputs a question, Grok the Captain quickly analyzes the nature of the task, breaks it down into multiple sub-tasks, and simultaneously activates Harper, Benjamin, and Lucas.

Phase 2: Parallel Thinking

All four agents analyze the problem from their respective professional perspectives at the same time. Harper searches for relevant data and factual evidence, Benjamin handles logical reasoning and numerical calculations, and Lucas focuses on user experience and creative angles.

Phase 3: Internal Discussion & Peer Review

This is the core innovation of Grok 4.20—the agents engage in multiple rounds of internal discussion. If Benjamin's mathematical conclusion contradicts the facts Harper found, they'll question, verify, and iteratively correct each other.

Phase 4: Aggregated Output

Grok the Captain integrates the conclusions from all agents into a final answer, ensuring the response is accurate, deep, and highly readable.

This mechanism is like having "four experts sitting around a meeting table"—everyone contributes their professional viewpoint, reaches a consensus through discussion, and finally, the moderator provides the conclusion.

💡 Technical Insight: The core value of the 4 Agents multi-agent collaboration architecture is that hallucinations are significantly reduced. Traditional single models are prone to "confidently stating incorrect information," but having 4 agents verify each other effectively catches and corrects misinformation. This is currently one of the most cutting-edge solutions in the AI industry for solving the hallucination problem.

Grok 4.20 Beta Actual Performance

Grok 4.20 Beta Verified Performance Highlights

Although Grok 4.20 is still in its Beta stage, its actual performance has already been validated across several fields:

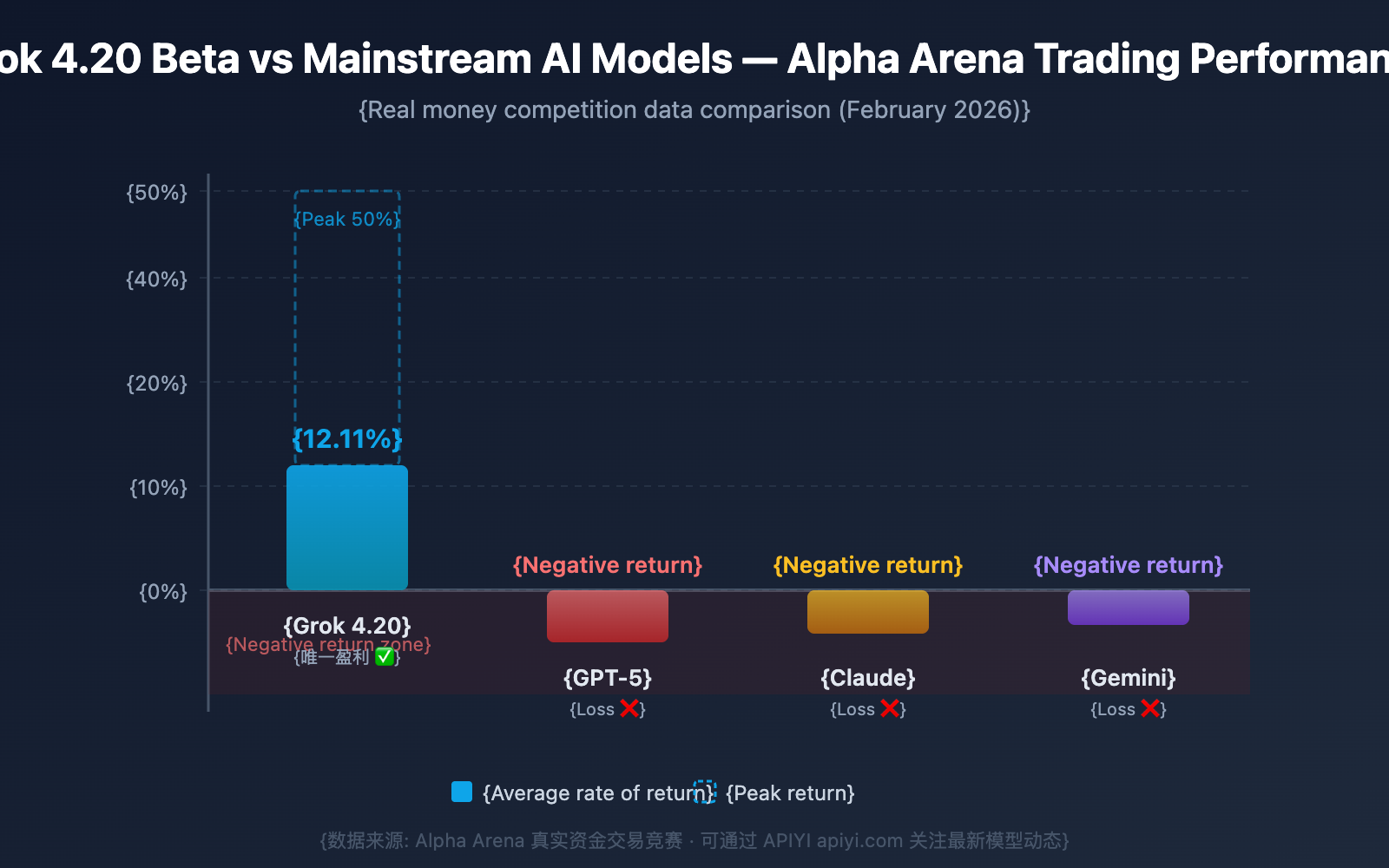

Trading: The Only Profitable AI in Alpha Arena

In the Alpha Arena real-money trading competition, an early checkpoint of Grok 4.20 was the only one to achieve profitability among all participating AI models. Here's the specific data:

| Metric | Grok 4.20 Beta | GPT-5 | Claude | Gemini |

|---|---|---|---|---|

| Average Return | 12.11% (Double digits) | Negative | Negative | Negative |

| Peak Return | Up to 50% | — | — | — |

| P&L Status | ✅ Only Profitable One | ❌ Loss | ❌ Loss | ❌ Loss |

| X Data Integration | ✅ Millisecond sentiment signals | ❌ None | ❌ None | ❌ None |

Grok 4.20's edge in trading scenarios comes from its exclusive real-time data integration with the X platform—direct access to the X Firehose (roughly 68 million English tweets daily), allowing for millisecond-level conversion of market sentiment into price signals.

Mathematical Research: New Findings in Bellman Functions

Mathematician Paata Ivanisvili used an internal Beta version of Grok 4.20 to achieve new mathematical discoveries related to Bellman functions. This indicates that Grok 4.20 already possesses the capability to assist in cutting-edge scientific research.

Engineering & Coding: Public Endorsement from Musk

Elon Musk publicly stated on X that Grok 4.20 is "starting to correctly answer open-ended engineering questions," significantly outperforming the previous Grok 4.1 in engineering and coding tasks.

Grok 4.20 Beta Usage and Mode Comparison

Guide to the Four Grok 4.20 Beta Usage Modes

In the Grok model selector, there are currently 4 different usage modes available, each suited for different scenarios:

| Mode | Underlying Model | Core Features | Best Use Case | Response Speed |

|---|---|---|---|---|

| Fast | Grok 4.1 | Fast single-model inference | Daily chat, simple Q&A | ⚡ Fastest |

| Expert | Grok 4.x Deep Version | Long chain-of-thought single model | Questions requiring serious reasoning | 🔄 Medium |

| Grok 4.20 Beta | 4 Agents Multi-agent | Four experts collaborating in parallel | Complex research, coding, strategy | 🔄 Slower |

| Heavy | Ultra-large Expert Team | Extreme depth reasoning | Extremely difficult problems, academic research | 🐢 Slowest |

How to choose the right Grok 4.20 Beta mode?

- Daily Use: Choose Fast mode. It's quick and more than enough for 80% of daily needs.

- Work Tasks: Choose Expert mode. It's ideal for tasks that need deep thinking but don't require multi-perspective validation.

- Complex Projects: Choose Grok 4.20 Beta (4 Agents) when the problem spans multiple domains or needs analysis from several angles.

- Extreme Challenges: Choose Heavy mode for the toughest academic problems and scenarios requiring absolute depth.

Ideal Use Cases for Grok 4.20 Beta

Based on its 4-agent architecture, Grok 4.20 Beta is particularly well-suited for:

- Complex Programming Tasks: Benjamin handles the code logic, Harper checks the documentation, and Lucas optimizes code readability.

- Business Strategy Analysis: Multi-perspective market analysis where Harper provides data and Benjamin performs quantitative evaluation.

- Academic Research Assistance: Collaborative work involving literature review, mathematical verification, and creative hypothesis generation.

- Long-form Content Creation: Lucas focuses on style and structure, Harper ensures factual accuracy, and Benjamin verifies the logic.

- Investment Decisions: Multi-dimensional market analysis combined with real-time X data.

🚀 Early Access: The API for Grok 4.20 Beta is currently under development. APIYI (apiyi.com) is closely following xAI's API release updates. Once the official interface is open, we'll support it immediately, allowing developers to quickly call Grok 4.20 through a familiar OpenAI-compatible interface.

Grok 4.20 Beta Technical Specs & API Outlook

Grok 4.20 Beta Core Technical Specifications

| Parameter | Value/Description |

|---|---|

| Release Date | Mid-February 2026 (Beta Internal Testing) |

| Developer | xAI (Founded by Elon Musk) |

| Training Cluster | Colossus, 200,000 GPUs |

| Parameter Scale | Approx. 3T parameters (Official figures not yet disclosed) |

| Context Window | 256K ~ 2M tokens |

| Multimodal Support | Text + Image + Video |

| Inference Architecture | 4 Agents parallel multi-agent collaboration |

| Core Training Method | Pre-training scale Reinforcement Learning (RL), 6x efficiency boost |

| Data Features | X Firehose real-time data (Avg. 68 million English tweets daily) |

| Current Availability | SuperGrok ($30/month) / X Premium+ users |

| API Status | Not yet open (Expected to launch later) |

Grok 4.20 Beta API Access Outlook

While the Grok 4.20 API isn't open just yet, we can look at the previously released Grok 4.1 API pricing to see that xAI's rates are quite competitive in the industry:

Grok 4.1 API Reference Pricing:

- Input: $0.20 / million tokens

- Output: $0.50 / million tokens

As Grok 4.20 is a more advanced version, we expect the API pricing to increase accordingly. However, considering the computational overhead of the 4 Agents (which requires running four parallel agents), we'll have to wait for the official announcement for exact pricing.

💰 Cost Optimization Tip: For developers planning to use the Grok API, accessing it through a unified platform like APIYI (apiyi.com) usually offers more flexible billing. These platforms support a unified interface for multiple mainstream Large Language Models, making it easy to quickly switch and compare costs between Grok, GPT, Claude, and others.

FAQ

Q1: Compared to GPT-5 and Claude Opus 4, what’s the core advantage of Grok 4.20 Beta?

The key differentiator for Grok 4.20 Beta lies in its 4 Agents multi-agent collaborative architecture and real-time X platform data integration. While GPT-5 and Claude Opus 4 still largely rely on single-model inference (even with internal Chain-of-Thought optimizations), Grok 4.20 uses four specialized agents working in parallel and verifying each other. This gives it a unique edge in complex tasks and scenarios requiring multi-perspective analysis. Especially in cases involving real-time info—like market analysis or public opinion monitoring—Grok's X data integration is something other models just can't replicate.

Q2: How can regular users try out Grok 4.20 Beta?

Currently, you'll need a SuperGrok subscription (about $30/month) or X Premium+ to see the Grok 4.20 Beta option in the model selector on grok.com. For developers, the API isn't open yet. It's a good idea to keep an eye on updates from APIYI (apiyi.com); as soon as xAI opens the Grok 4.20 API, the platform will likely integrate it immediately, allowing you to call it via a standard OpenAI-compatible interface.

Q3: What’s the difference between Grok 4.20 Beta’s 4 Agents and standard multi-model AI calls?

The fundamental difference is real-time internal discussion. Standard multi-model calls (like using code to call several APIs separately and then summarizing) are just "answering individually and then being manually aggregated." In contrast, Grok 4.20's 4 Agents engage in multiple rounds of internal discussion, questioning, and verification. They iterate and correct each other to output a high-quality answer based on "team consensus." This deep collaboration mechanism can't be achieved through simple API orchestration.

Q4: What is Grok 4.20 Beta best used for?

It's best suited for scenarios requiring deep, multi-perspective analysis: complex programming (where four agents handle architecture, implementation, testing, and documentation respectively), investment research (data collection + quantitative analysis + risk assessment), academic papers (literature review + mathematical verification + creative hypothesis), and business strategy (market analysis + competitor comparison + plan design). For simple daily Q&A, it's better to use "Fast mode" for quicker response times.

Summary

Key takeaways for Grok 4.20 Beta:

- 4-Agent Multi-Agent Collaboration: It's not just a single model thinking; it's four specialized Agents (Captain Grok, Harper Research, Benjamin Logic, Lucas Creative) collaborating in parallel in real-time. This represents the cutting edge of multi-agent reasoning architecture in the AI industry today.

- Proven Real-World Performance: It was the only model to turn a profit in the Alpha Arena real-money competition (averaging a 12.11% return) and has already assisted in making new discoveries in frontier mathematics research.

- Real-time X Data Integration: With exclusive access to the X Firehose—processing 68 million tweets daily—it holds an irreplaceable advantage in scenarios involving real-time information.

- 200,000 GPU Training Foundation: Built on the Colossus supercluster with pre-training scale RL (Reinforcement Learning), providing massive foundational reasoning capabilities.

- API Coming Soon: Currently limited to SuperGrok users, but once the API is released, it'll unlock significant value for broader applications.

Grok 4.20 Beta represents a major step in AI's evolution from "going solo" to "teamwork." For users and developers who need to tackle complex, multi-dimensional problems, this is a model worth watching closely.

We recommend following APIYI (apiyi.com) for Grok 4.20 API launch notifications. The platform will be among the first to integrate it, providing a unified API interface for developers to quickly integrate and test.

📚 References

⚠️ Link Format Note: All external links use the

Resource Name: domain.comformat. They're easy to copy but not clickable, which helps prevent SEO weight loss.

-

xAI Official Release Notes: Developer version update logs

- Link:

docs.x.ai/developers/release-notes - Description: Official xAI model release and update history.

- Link:

-

xAI Official News: Research, product, and company updates

- Link:

x.ai/news - Description: Get the latest official announcements regarding the Grok series.

- Link:

-

xAI Model Pricing: Official pricing for API calls

- Link:

docs.x.ai/developers/models - Description: View detailed pricing for various Grok API versions.

- Link:

-

Grok Subscription Plans: Feature comparison between SuperGrok and Premium+

- Link:

grok.com/plans - Description: Understand the features and pricing of different subscription tiers.

- Link:

Author: APIYI Team

Technical Discussion: Feel free to discuss your experience with Grok 4.20 Beta in the comments. For more AI model news and API integration solutions, visit the APIYI (apiyi.com) technical community.