Seedance 2.0 vs Sora 2: Which one should you pick? This is one of the most common questions AI video creators and developers are asking in 2026. In this post, we'll dive deep into these two top-tier video generation models across 8 core dimensions to help you make a clear choice based on your actual needs.

Core Value: By the end of this article, you'll clearly understand the technical strengths and best use cases for both Seedance 2.0 and Sora 2, so you can stop stressing over which one to use.

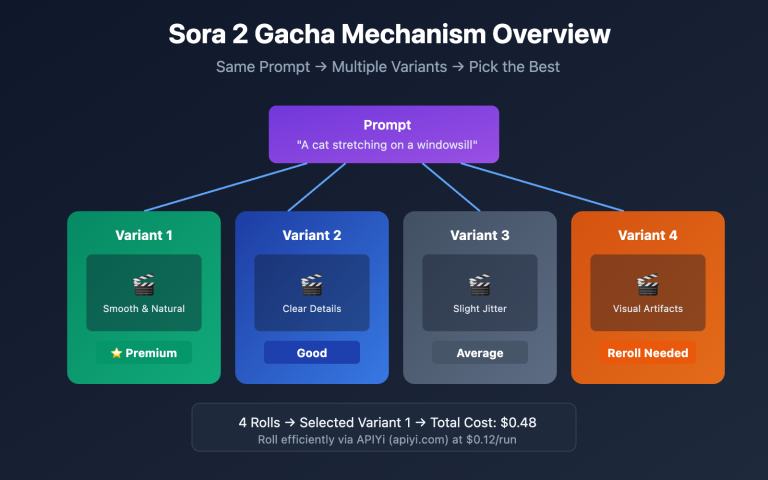

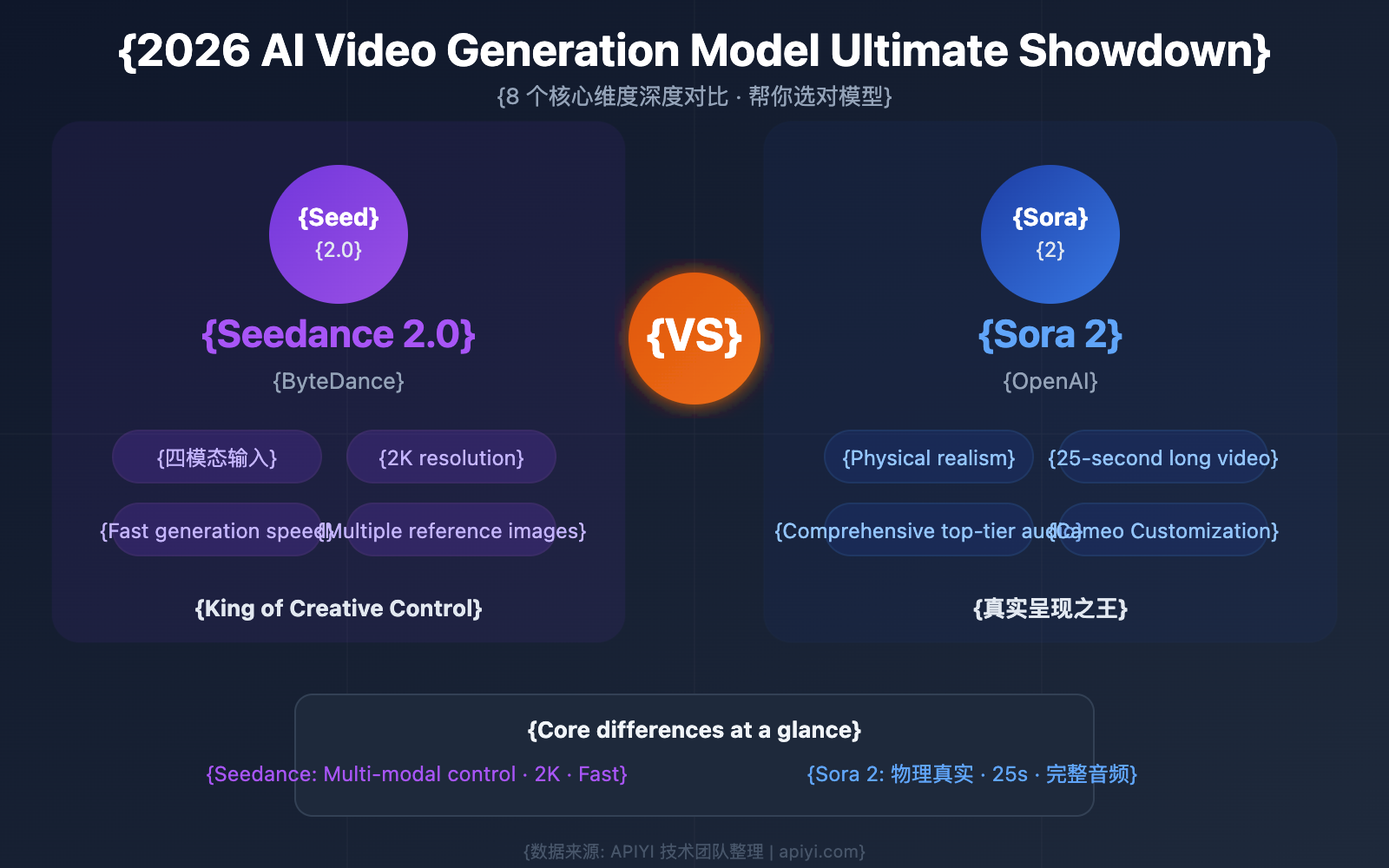

Seedance 2.0 vs Sora 2: Basic Info Comparison

Before we dive into the details, let's look at the fundamentals of these two models.

| Comparison Item | Seedance 2.0 | Sora 2 |

|---|---|---|

| Developer | ByteDance | OpenAI |

| Release Date | February 2026 | September 2025 (Sora 2 Pro subsequent updates) |

| Model Positioning | Multi-modal controllable video generation | Physical realism video generation |

| Max Resolution | 2K | 1080p (Pro supports 1792×1024) |

| Video Duration | 4-15 seconds | 5-25 seconds |

| Input Modalities | Text + Image + Video + Audio (Quad-modal) | Text + Image (Dual-modal) |

| Native Audio | Supported (Dialogue + SFX + Ambient) | Supported (Dialogue + SFX + Ambient + Music) |

| API Status | Expected launch on February 24, 2025 | Online |

| Primary Platforms | Dreamina, Volcengine | OpenAI Official, ChatGPT |

| Available Platforms | Volcengine, APIYI (apiyi.com) | OpenAI API, APIYI (apiyi.com) |

🎯 Quick Take: If you need multi-asset mixed creation and 2K resolution, go with Seedance 2.0. If you're after ultimate physical realism and long-form video storytelling, Sora 2 is your best bet.

8 Core Differences: Seedance 2.0 vs. Sora 2

Difference 1: Output Resolution Comparison

Resolution is one of the key benchmarks for any video generation model.

| Resolution Specs | Seedance 2.0 | Sora 2 / Sora 2 Pro |

|---|---|---|

| Standard Resolution | 1080p | 1080p |

| Max Resolution | 2K (approx. 2048×1152) | 1080p (Pro: 1792×1024) |

| Supported Aspect Ratios | 16:9, 9:16, 4:3, 3:4, 21:9, 1:1 | 16:9, 9:16, 1:1 |

| Visual Texture | Cinematic aesthetics, vibrant colors | Cinematic realism, refined lighting |

Conclusion: Seedance 2.0 takes the lead in resolution, offering native 2K output and a wider variety of aspect ratio options. While Sora 2 maxes out at 1080p, it remains top-tier in terms of lighting details and overall visual texture.

If you're creating content for large-scale displays, high-definition advertising, or print materials, Seedance 2.0’s 2K resolution offers a clear advantage.

Difference 2: Video Duration Comparison

Video length directly impacts a model's storytelling capabilities.

Sora 2 holds a significant advantage in this category:

- Sora 2: Supports 5–25 seconds, a 4x increase over Sora 1’s 6-second limit.

- Seedance 2.0: Supports 4–15 seconds, making it ideal for short-form video and clip production.

For ads or short films that require a complete narrative arc, Sora 2’s 25-second duration gives you more creative breathing room. Meanwhile, Seedance 2.0’s 4–15 second range is better suited for social media clips and product showcases.

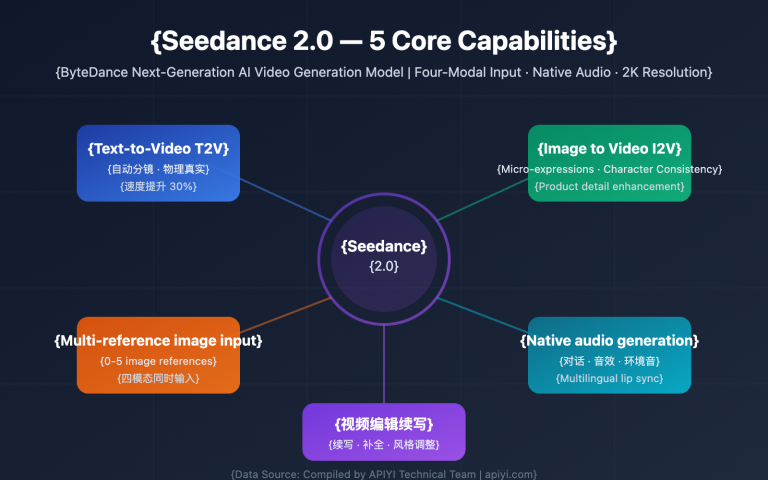

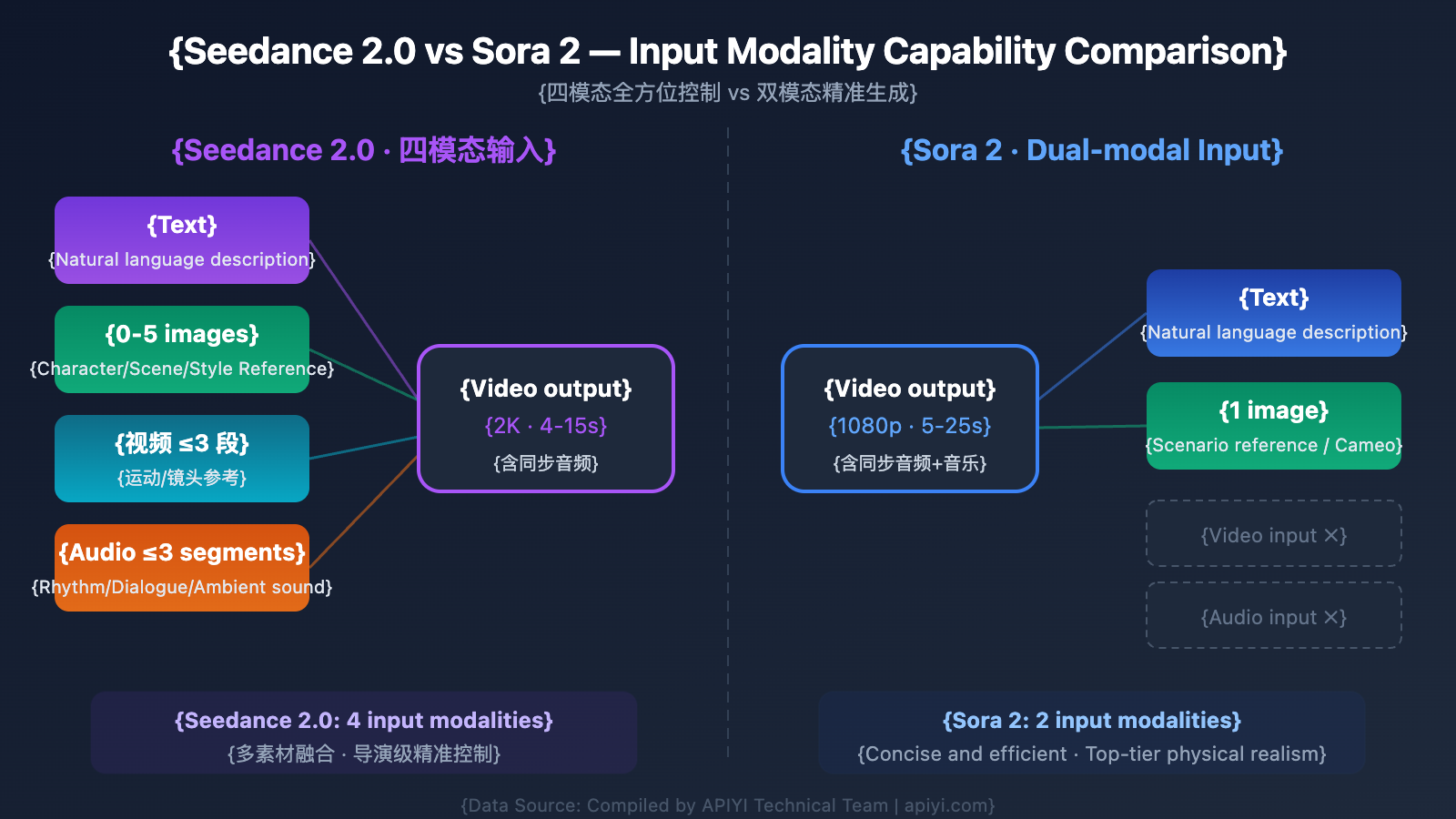

Difference 3: Multimodal Input Comparison

This is where Seedance 2.0 shows its most unique strengths.

| Input Capability | Seedance 2.0 | Sora 2 |

|---|---|---|

| Text Input | ✅ Natural language prompts | ✅ Natural language prompts |

| Image Input | ✅ 0-5 images (up to 9) | ✅ Single image |

| Video Input | ✅ Up to 3 clips (total ≤15s) | ❌ Not supported |

| Audio Input | ✅ Up to 3 clips (MP3, ≤15s) | ❌ Not supported |

| Multi-Ref Image Search | ✅ Multi-image feature fusion | ❌ Not supported |

| Character Cameo | ❌ Not supported | ✅ Supports face customization |

Seedance 2.0’s quad-modal input system means you can simultaneously provide a face photo, a dance video, and a musical beat, and the model will fuse these elements into one coherent video. This "director-level control" is currently unmatched by other models.

Sora 2’s Cameo feature, on the other hand, allows you to upload your own photo so the AI can "place" you into the generated video, enabling personalized character integration.

Difference 4: Physical Realism Comparison

Physical realism is a critical metric for evaluating the quality of video generation models.

Sora 2 is the undisputed gold standard in this dimension:

- Sora 2: Offers the highest precision in simulating physical laws like gravity, momentum, collisions, fluids, and light refraction. When you need a basketball to bounce realistically, water to flow naturally, or fabric to flutter in the wind, Sora 2 is the most convincing.

- Seedance 2.0: Shows significant improvement over version 1.5, reaching excellent levels in gravity, momentum, and causality. However, it still lags slightly behind Sora 2 in highly complex physical interaction scenarios.

In real-world tests, Seedance 2.0’s generated scenes—like falling cherry blossoms or swimming koi—are already very realistic and fluid, with natural trajectories and accurate lighting. But for extreme scenarios involving multi-object collisions or fluid simulations, Sora 2’s physics engine still reigns supreme.

Difference 5: Native Audio Comparison

Both models support native audio generation, but they have different focuses.

| Audio Capability | Seedance 2.0 | Sora 2 |

|---|---|---|

| Dialogue/Speech | ✅ Multilingual (CN/EN/ES, etc.) | ✅ Multilingual |

| Lip-Sync | ✅ Precise synchronization | ✅ Pro version is more precise |

| Ambient Sound Effects | ✅ Auto-matches scene | ✅ Auto-matches scene |

| Action Sound Effects | ✅ Synchronized generation | ✅ Synchronized generation |

| Background Music | ❌ Not supported | ✅ Supports generation |

| Audio Reference Input | ✅ Supported (Exclusive) | ❌ Not supported |

| Multi-Subject Voice Ref | ✅ Supports 2+ subjects | ❌ Not supported |

| Overall Audio Quality | Excellent | Top-tier |

Key Difference: Seedance 2.0 supports audio reference input. You can upload a real voice clip or a musical rhythm, and the model will generate the video's audio based on that reference. This is incredibly valuable for commercial dubbing and maintaining brand audio consistency.

Sora 2 excels in overall audio quality, particularly its ability to generate background music. It can produce dialogue, sound effects, and a score all in a single inference pass, significantly reducing post-production work.

Difference 6: Multi-Shot Storytelling Comparison

Multi-shot capability determines how well a model can generate long-form, coherent content.

- Seedance 2.0: Features a built-in automatic storyboarding system that can break down a narrative prompt into multiple coherent shots. Character appearance, clothing, and settings remain highly consistent across shots.

- Sora 2: Also supports multi-scene inference with enhanced narrative continuity. It performs at a top-tier level in temporal consistency, ensuring characters don't "change faces" between shots.

Both perform exceptionally well here, but their approaches differ. Seedance 2.0 relies more on reference materials to ensure consistency (e.g., providing a character reference image), while Sora 2 relies more on the model’s internal understanding to maintain it.

Difference 7: Generation Speed Comparison

Generation speed directly affects workflow efficiency, which is crucial for teams producing content at scale.

| Speed Metric | Seedance 2.0 | Sora 2 |

|---|---|---|

| 5s Video | < 60 seconds | Slower (varies by load) |

| Speed Increase | 30% faster than v1.5 | – |

| Short Clip Gen | As fast as 2-5s (short clips) | Moderate speed |

| Batch Gen Efficiency | High | Moderate |

| Underlying Arch | Volcengine Infrastructure | OpenAI Infrastructure |

Seedance 2.0 has a clear edge in generation speed, thanks to optimizations within ByteDance’s Volcengine computing infrastructure. For workflows requiring rapid iteration and batch production, this speed gap can significantly impact productivity.

Difference 8: API Pricing & Availability Comparison

API pricing and availability are major considerations for developers choosing a platform.

| Pricing & Availability | Seedance 2.0 | Sora 2 / Sora 2 Pro |

|---|---|---|

| API Status | Expected launch Feb 24 | Live |

| Pricing Model | Per video duration/resolution | Per second ($0.10-$0.50/sec) |

| 720p Unit Price | TBD | $0.30/sec |

| 1080p Unit Price | TBD | $0.50/sec (Pro) |

| 10s Video Cost | TBD | $3.00 – $5.00 |

| Free Trial | Free on Jimeng website | Requires Plus ($20/mo) or Pro ($200/mo) |

| 1.x Compatibility | Highly compatible, low migration cost | – |

💰 Cost Tip: Sora 2’s official API pricing is relatively high (approx. $5 for a 10-second 1080p video). For budget-sensitive projects, you can access both models via the APIYI (apiyi.com) platform, which offers more flexible billing options suitable for small to medium teams looking to control costs.

Seedance 2.0 vs Sora 2: Comprehensive Performance Comparison

Based on the analysis across these 8 dimensions, both models lead in 4 categories each:

Where Seedance 2.0 Leads:

- Output Resolution — Native 2K, the highest in its class.

- Multimodal Input — A unique four-modal input system, offering a distinct advantage.

- Generation Speed — Generates a 5-second video in under 60 seconds.

- Accessibility — Free to use on the Jimeng (Dreamina) website.

Where Sora 2 Leads:

- Physical Realism — Widely recognized as the industry benchmark for physics simulation.

- Video Duration — Up to 25 seconds, providing much more room for storytelling.

- Native Audio Quality — Includes high-quality background music generation.

- API Ecosystem Maturity — Already live with comprehensive, well-structured documentation.

🎯 Tech Selection Tip: Both models have their strengths, and the right choice depends on your specific use case. We recommend testing them out on the APIYI (apiyi.com) platform. It supports API calls for both Seedance 2.0 and Sora 2, allowing you to compare their results side-by-side using a single interface.

Seedance 2.0 vs Sora 2: Scenario Selection Guide

5 Scenarios to Choose Seedance 2.0

Scenario 1: Batch Production of E-commerce Product Videos

Seedance 2.0's ability to handle multiple reference images (0-5), combined with its enhanced product detail rendering, allows it to accurately recreate product textures, logos, and packaging. Its 2K resolution meets the high-definition requirements of e-commerce platforms, and its high-speed generation is perfect for high-volume output.

Scenario 2: Creative Videos with Mixed Assets

Say you've got a dance video, a piece of music, and a photo of a character, and you want to blend them into something entirely new. Seedance 2.0's four-modal input system is currently the only model that can pull off this kind of complex composite creation.

Scenario 3: Brand Audio Consistency

Need a character in your video to use a specific brand voice? Seedance 2.0 supports audio reference input, allowing you to upload real voice samples to ensure the generated video's audio style stays perfectly aligned with your brand identity.

Scenario 4: Rapid Social Media Short Video Output

With durations ranging from 4 to 15 seconds, it's a perfect match for the requirements of platforms like TikTok and Instagram Reels. Combined with its efficient generation speed, it's ideal for operations teams that need to iterate on content quickly.

Scenario 5: Digital Humans and Virtual Anchors

Seedance 2.0's micro-expression optimization and multi-language lip-syncing (Chinese/English/Spanish), paired with its multi-subject real-voice reference feature, make it the ideal choice for creating digital human videos.

5 Scenarios to Choose Sora 2

Scenario 1: High-Quality Ads and Brand Promos

When physical realism and lighting quality are your top priorities, Sora 2's physics engine delivers cinematic-level credibility for product showcases and scene dramatizations. Its 25-second duration is plenty of time to complete a full advertising narrative.

Scenario 2: Character-Driven Storytelling

Sora 2's "Cameo" feature can naturally integrate real photos into video scenes. Combined with its top-tier temporal consistency, it's great for character-driven content like personalized stories or brand ambassador videos.

Scenario 3: Final Cuts Requiring Full Soundtracks

If your video needs a complete audio layer—dialogue, sound effects, and background music—Sora 2 is currently the only model that can generate all three simultaneously in a single inference. This'll significantly cut down your post-production workload.

Scenario 4: Educational and Scientific Content

Need to demonstrate physical phenomena, chemical reactions, or mechanical movements? Sora 2's precise physics simulation capabilities make scientific content more accurate and believable. The 25-second length also allows for more explanatory depth.

Scenario 5: Projects Requiring Long-Form Narrative

For projects like mini-dramas or creative shorts that need continuous shots longer than 15 seconds, Sora 2's 25-second duration and strong narrative coherence make it the better choice.

Seedance 2.0 vs Sora 2: Quick API Integration

Both models can be integrated into your workflow via API calls. Here’s a quick code example comparing the output of both models:

import requests

import json

# Call both models simultaneously via the unified APIYI interface

API_BASE = "https://api.apiyi.com/v1"

API_KEY = "your-api-key"

def generate_video(model, prompt, duration=5):

"""Unified interface to call different video generation models"""

response = requests.post(

f"{API_BASE}/video/generations",

headers={

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

},

json={

"model": model,

"prompt": prompt,

"duration": duration,

"audio": True

}

)

return response.json()

# Call both models with the same prompt to compare results

prompt = "A white cat stretching on a windowsill, with sunlight filtering through the curtains onto its fur"

seedance_result = generate_video("seedance-2.0", prompt, duration=8)

sora_result = generate_video("sora-2", prompt, duration=8)

print(f"Seedance 2.0: {seedance_result['data']['url']}")

print(f"Sora 2: {sora_result['data']['url']}")

View Seedance 2.0 Multi-Reference Call Code

import requests

import base64

API_BASE = "https://api.apiyi.com/v1"

API_KEY = "your-api-key"

def seedance_multi_ref(image_paths, video_path=None, audio_path=None, prompt=""):

"""

Seedance 2.0 Four-Modal Input Call Example

Taking full advantage of its exclusive multi-image + video + audio capabilities

"""

references = []

# Add reference images (0-5 images)

for img_path in image_paths:

with open(img_path, "rb") as f:

references.append({

"type": "image",

"data": base64.b64encode(f.read()).decode()

})

# Add reference video (optional)

if video_path:

with open(video_path, "rb") as f:

references.append({

"type": "video",

"data": base64.b64encode(f.read()).decode()

})

# Add reference audio (optional)

if audio_path:

with open(audio_path, "rb") as f:

references.append({

"type": "audio",

"data": base64.b64encode(f.read()).decode()

})

response = requests.post(

f"{API_BASE}/video/generations",

headers={

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

},

json={

"model": "seedance-2.0",

"prompt": prompt,

"references": references,

"resolution": "2k",

"audio": True,

"aspect_ratio": "16:9",

"duration": 10

}

)

return response.json()

# Usage example: Face + Dance Video + Music → Blended Video

result = seedance_multi_ref(

image_paths=["character_face.jpg", "outfit_ref.jpg"],

video_path="dance_motion.mp4",

audio_path="music_beat.mp3",

prompt="The character dances to the rhythm of the music, with smooth and natural movements"

)

print(f"Video generated: {result['data']['url']}")

🚀 Quick Start: We recommend using the APIYI (apiyi.com) platform to access both Seedance 2.0 and Sora 2. A single API Key lets you call both models, making it easy to compare generation effects for the same prompt without having to register separate accounts for Volcengine and OpenAI.

Seedance 2.0 vs Sora 2 FAQ

Q1: Which one has better image quality, Seedance 2.0 or Sora 2?

They have different focuses when it comes to image quality. Seedance 2.0 offers higher resolution (2K vs 1080p) with vibrant colors and a strong aesthetic style. Sora 2 excels in lighting details and physical realism, making its output look more like actual cinematography. If you're looking for something "beautiful," both are excellent. If you're chasing "realism," Sora 2 has a slight edge; if you need "high-def," Seedance 2.0 takes the lead. We recommend generating videos with the same theme on the APIYI (apiyi.com) platform to see the difference for yourself.

Q2: Can I use both models at the same time?

Absolutely. Many professional teams mix and match based on their project needs—using Seedance 2.0 for templated assets and multi-material creations, and Sora 2 for high-end content that requires top-tier physical realism. With APIYI's (apiyi.com) unified interface, you can flexibly switch between both models within the same project.

Q3: Which model is better for e-commerce videos?

For e-commerce scenarios, we recommend Seedance 2.0. Here's why: it supports multiple product images as reference inputs (0-5 images), its 2K high-definition output meets platform requirements, it's great at preserving product details, and its fast generation speed is perfect for batch production. Sora 2 can also handle e-commerce, but it doesn't support multi-reference inputs and typically has a higher cost per generation.

Q4: Will the Seedance 2.0 API be cheaper than Sora 2?

Based on ByteDance's previous pricing strategies (like Seedance 1.5 Pro), Seedance 2.0's API pricing is expected to be lower than Sora 2's official rates ($0.30-$0.50/second). Specific pricing will be announced after the February 24th launch. Follow APIYI (apiyi.com) to get the latest pricing information and early access discounts.

Q5: I don’t know how to code. Can I still use these models?

Yes, you can. Seedance 2.0 can be used for free online via the Dreamina (Jimeng) website (jimeng.jianying.com) without writing a single line of code. Sora 2 is available through the ChatGPT Plus/Pro subscription directly in your web browser. Both provide user-friendly visual interfaces.

Seedance 2.0 vs Sora 2 Comparison Summary

After a deep dive across eight dimensions, the positioning and strengths of these two models are very clear:

Seedance 2.0 Core Strengths: Four-modal input system (exclusive), native 2K resolution (highest in its class), multi-reference image search (0-5 images), faster generation speeds, and a lower barrier to entry (free experience on Dreamina).

Sora 2 Core Strengths: The gold standard for physical realism, 25-second long-form storytelling, top-tier integrated audio quality (including background music), Cameo character customization, and a mature API ecosystem.

The Bottom Line: Seedance 2.0 is the best choice for "creative control," while Sora 2 is the best choice for "realistic presentation."

Which model you choose depends on your actual needs. We recommend using the APIYI (apiyi.com) platform to access both models through a single interface. Compare the results side-by-side and let the data help you make the right decision.

This article was written by the APIYI technical team. We stay on top of the latest trends in AI video generation. For more model comparisons and tutorials, visit the APIYI (apiyi.com) Help Center.

References

-

Seedance 2.0 Official Introduction: ByteDance Seed series models

- Link:

seed.bytedance.com/en/seedance

- Link:

-

Sora 2 Official Documentation: OpenAI Sora 2 model description

- Link:

platform.openai.com/docs/models/sora-2

- Link:

-

Dreamina: Seedance 2.0 online experience platform

- Link:

jimeng.jianying.com

- Link:

-

WaveSpeedAI Comparison Review: 2026 comprehensive video generation model comparison

- Link:

wavespeed.ai/blog/posts/seedance-2-0-vs-kling-3-0-sora-2-veo-3-1-video-generation-comparison-2026

- Link: