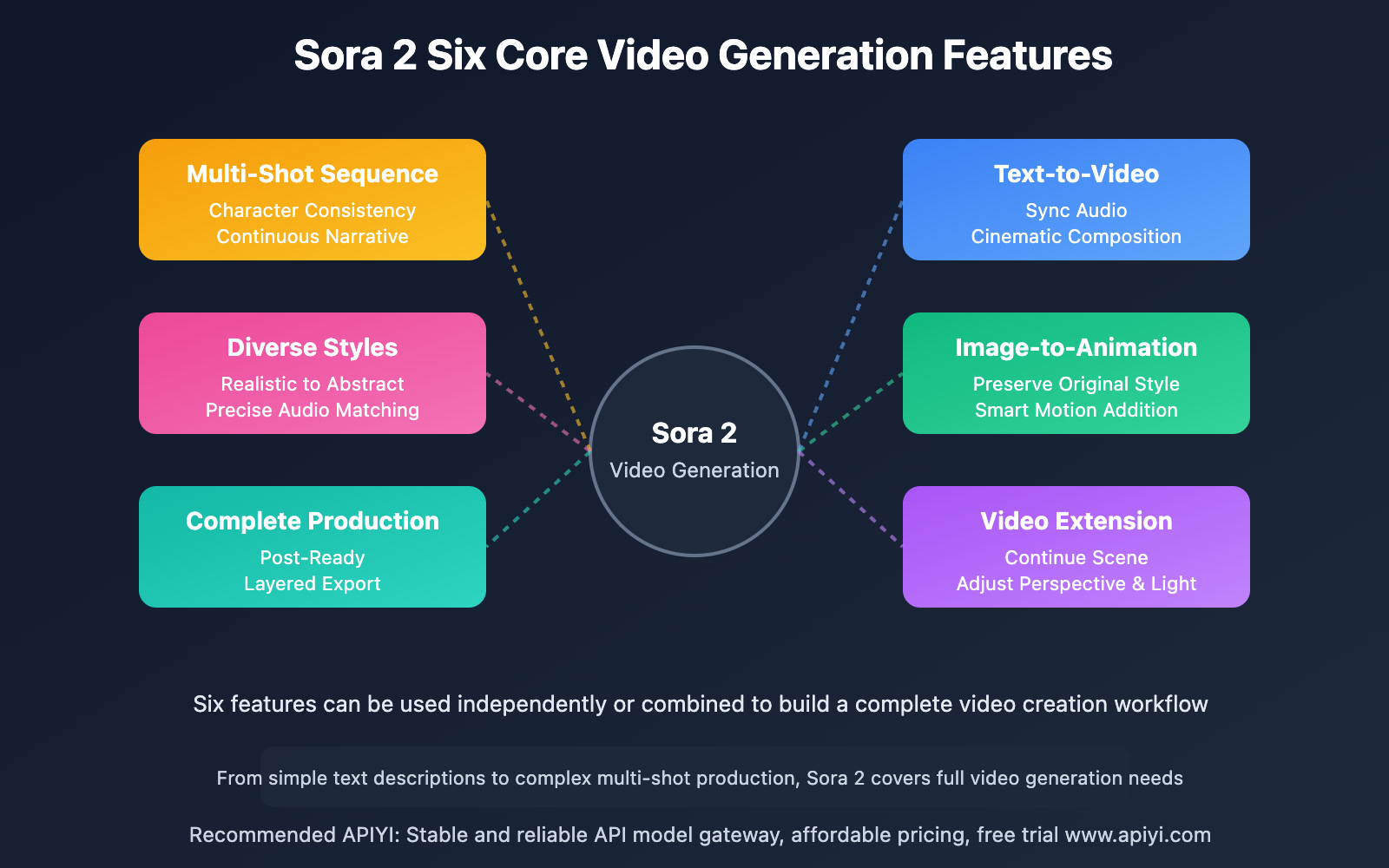

OpenAI's Sora 2 video generation model has not only achieved breakthroughs in video quality, but more importantly, it provides a complete set of video creation tools. From directly generating videos from text, to animating images, to creating complex multi-shot sequences, Sora 2's video generation capabilities cover the core needs of modern content creation. This article will provide an in-depth analysis of these 6 core capabilities and offer practical techniques that can be directly applied.

Sora 2 Video Generation Features Overview

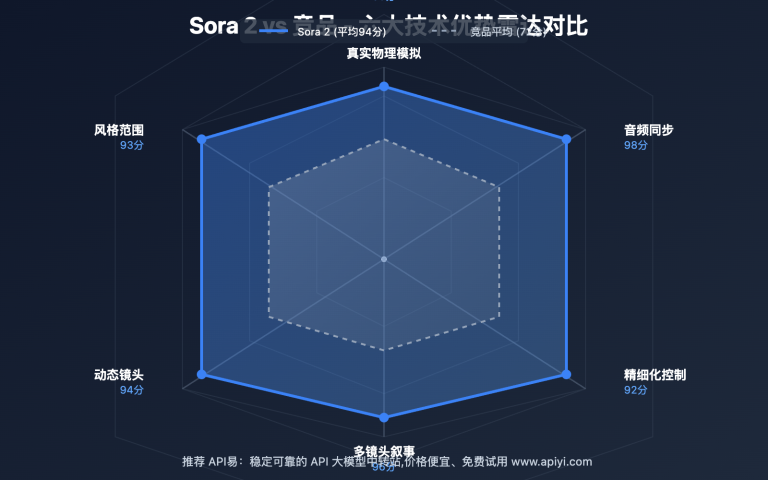

Sora 2's video generation feature system can be summarized into the following six core capabilities:

| Feature Module | Core Capability | Typical Application Scenarios |

|---|---|---|

| Text-to-Video | Directly create complete videos from text descriptions | Ad campaigns, concept previews, educational demonstrations |

| Image-to-Animation | Animate static images | Product showcases, artistic creations, social media |

| Video Extension & Editing | Continue scenes, adjust perspectives and lighting | Film sequels, content supplements, creative variations |

| Multi-Shot Sequences | Generate consistent continuous shots | Short films, storytelling, brand videos |

| Diverse Styles | Full style coverage from realistic to abstract | Art films, stylized ads, experimental imagery |

| Complete Audiovisual Works | Post-ready productions with audio | Quick prototypes, direct publishing, content iteration |

These features are not isolated but can be combined to build a complete video creation workflow.

Feature 1: Text-to-High-Fidelity Video – The Foundation of Sora 2 Video Generation

Core Capabilities Explained

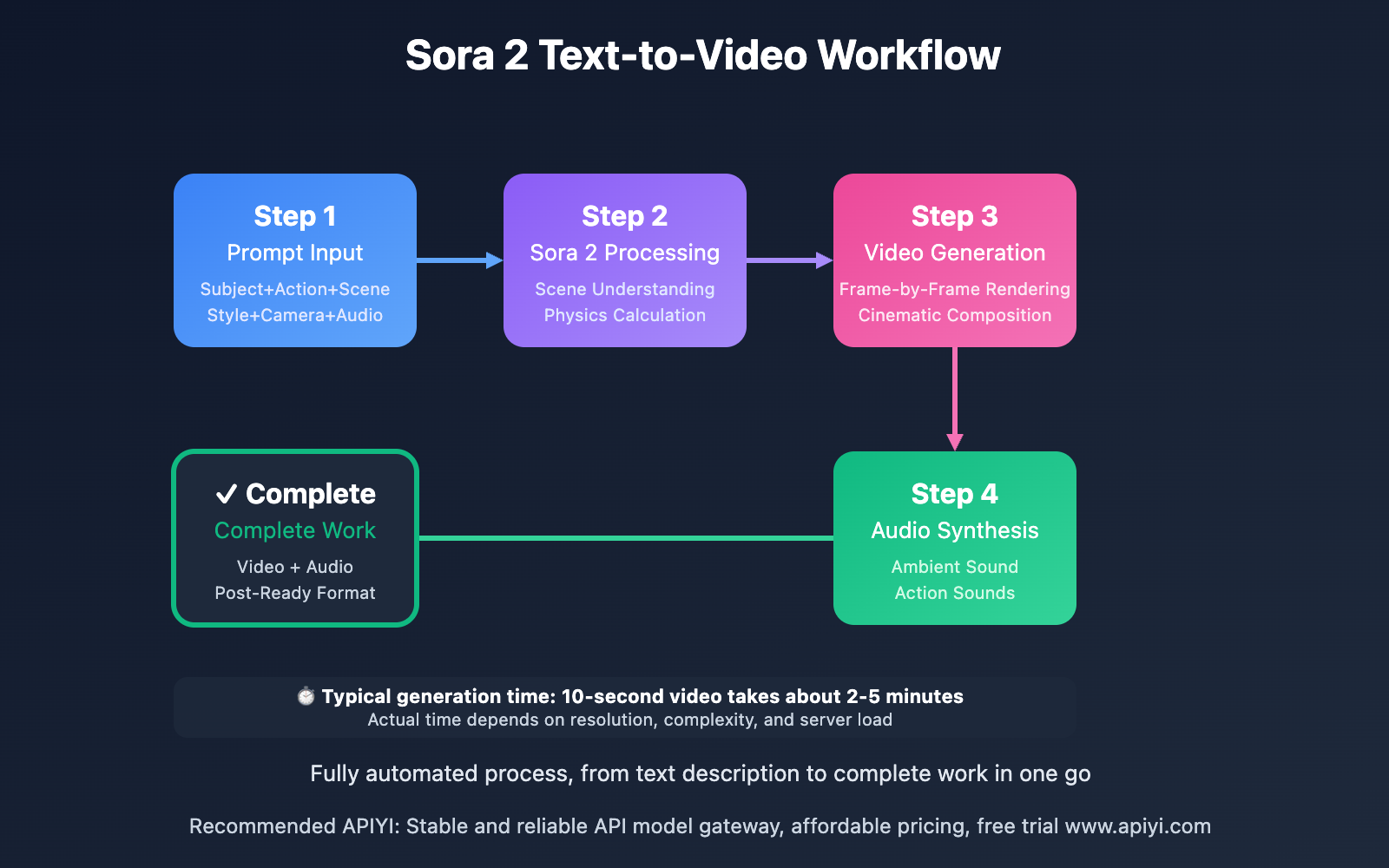

Sora 2's text-to-video feature supports three key capabilities:

- Synchronized Audio Generation – Simultaneous creation of video visuals and audio, including ambient sounds and action sound effects

- Realistic Physical Motion – Motion trajectories and dynamic effects that conform to physical laws

- Cinematic Composition – Automatic application of professional techniques like depth of field, camera movement, and lighting

Prompt Writing Techniques

To fully leverage Sora 2's video generation capabilities, prompts should include the following elements:

Complete Prompt Structure:

[Subject Description] + [Action Details] + [Scene Environment] + [Visual Style] + [Camera Language] + [Audio Requirements]

Practical Case 1: Product Showcase Video

Prompt:

A premium smartphone slowly rotates on display, screen lights up showing colorful interface,

placed on a minimalist white background pedestal, soft studio lighting from the side,

creating a professional product photography atmosphere, using 360-degree orbital camera movement,

accompanied by subtle mechanical rotation sounds and electronic screen activation sounds

Parameter Recommendations:

- duration: 10 seconds

- resolution: 1080p

- style: photorealistic

- camera_movement: orbit

Practical Case 2: Educational Demonstration Video

Prompt:

A transparent globe slowly rotates against a deep blue background,

golden light from one side illuminating continental outlines,

white latitude and longitude grid lines rotate accordingly,

camera gradually pushes from distance to medium shot,

background music features soothing tech-electronic sounds with space atmosphere audio

Parameter Recommendations:

- duration: 15 seconds

- resolution: 720p

- style: semi-realistic

- emphasis: lighting, atmosphere

API Call Example

Call Sora 2 text-to-video feature through APIYI platform:

import requests

def generate_sora2_video(prompt, duration=10, resolution="1080p"):

"""

Create video using Sora 2 text-to-video feature

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/generate"

payload = {

"model": "sora-2",

"prompt": prompt,

"duration": duration,

"resolution": resolution,

"audio": True, # Enable audio generation

"quality": "high"

}

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

response = requests.post(endpoint, json=payload, headers=headers)

if response.status_code == 200:

result = response.json()

return {

"video_url": result["video_url"],

"audio_url": result["audio_url"],

"duration": result["duration"],

"cost": result["cost"]

}

else:

raise Exception(f"Generation failed: {response.text}")

# Usage example

result = generate_sora2_video(

prompt="An orange cat chasing butterflies in snow, sunlight glistening on snow surface, background is blurred winter forest",

duration=8,

resolution="720p"

)

print(f"Video generated successfully: {result['video_url']}")

print(f"Cost consumed: ¥{result['cost']}")

🎯 Usage Recommendation: For projects requiring large-scale video generation, we recommend centralized management through APIYI apiyi.com platform. The platform provides unified API interfaces and cost control tools, which can effectively reduce development and maintenance costs while supporting batch task processing and asynchronous callback mechanisms.

Feature 2: Image-to-Animation – Bringing Static Content to Life

Core Capabilities Explained

Sora 2's image-to-animation feature can:

- Intelligently Recognize Image Content – Understand subject, background, and depth relationships

- Preserve Original Style – Maintain image color tone, texture, and artistic style

- Add Natural Motion – Add reasonable dynamic effects based on the scene

- Generate Environmental Atmosphere – Add dynamic elements like particles, lighting, and depth of field

Application Scenarios

Scenario 1: Dynamic Product Display

Input: Product flat photography

Animation Effect: Add 360-degree rotation + light flow

Application: E-commerce product pages, social media ads

Scenario 2: Artwork Activation

Input: Illustrations, posters, concept art

Animation Effect: Character blinking, background floating, light effects flickering

Application: NFT artworks, digital art exhibitions

Scenario 3: Historical Photo Restoration

Input: Black and white or vintage photos

Animation Effect: Add natural breathing effect, subtle floating

Application: Documentaries, historical educational content

API Implementation Code

def animate_image_with_sora2(image_path, animation_prompt, duration=5):

"""

Convert static image to animation using Sora 2

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/image-to-video"

# Upload image

with open(image_path, 'rb') as f:

image_data = f.read()

files = {

'image': ('input.jpg', image_data, 'image/jpeg')

}

data = {

"animation_prompt": animation_prompt,

"duration": duration,

"preserve_style": True, # Preserve original style

"motion_intensity": "medium" # Motion intensity: low/medium/high

}

headers = {

"Authorization": f"Bearer {api_key}"

}

response = requests.post(

endpoint,

files=files,

data=data,

headers=headers

)

return response.json()

# Usage example

result = animate_image_with_sora2(

image_path="product.jpg",

animation_prompt="Product slowly rotates, lights shine from different angles, highlighting metallic texture",

duration=6

)

Parameter Optimization Recommendations

| Parameter | Recommended Value | Notes |

|---|---|---|

| motion_intensity | low-medium | Too high will damage original style |

| duration | 3-8 seconds | Too long may result in unnatural loops |

| preserve_style | True | Maintain original artistic style |

| loop_seamless | True | Generate seamless loop animation |

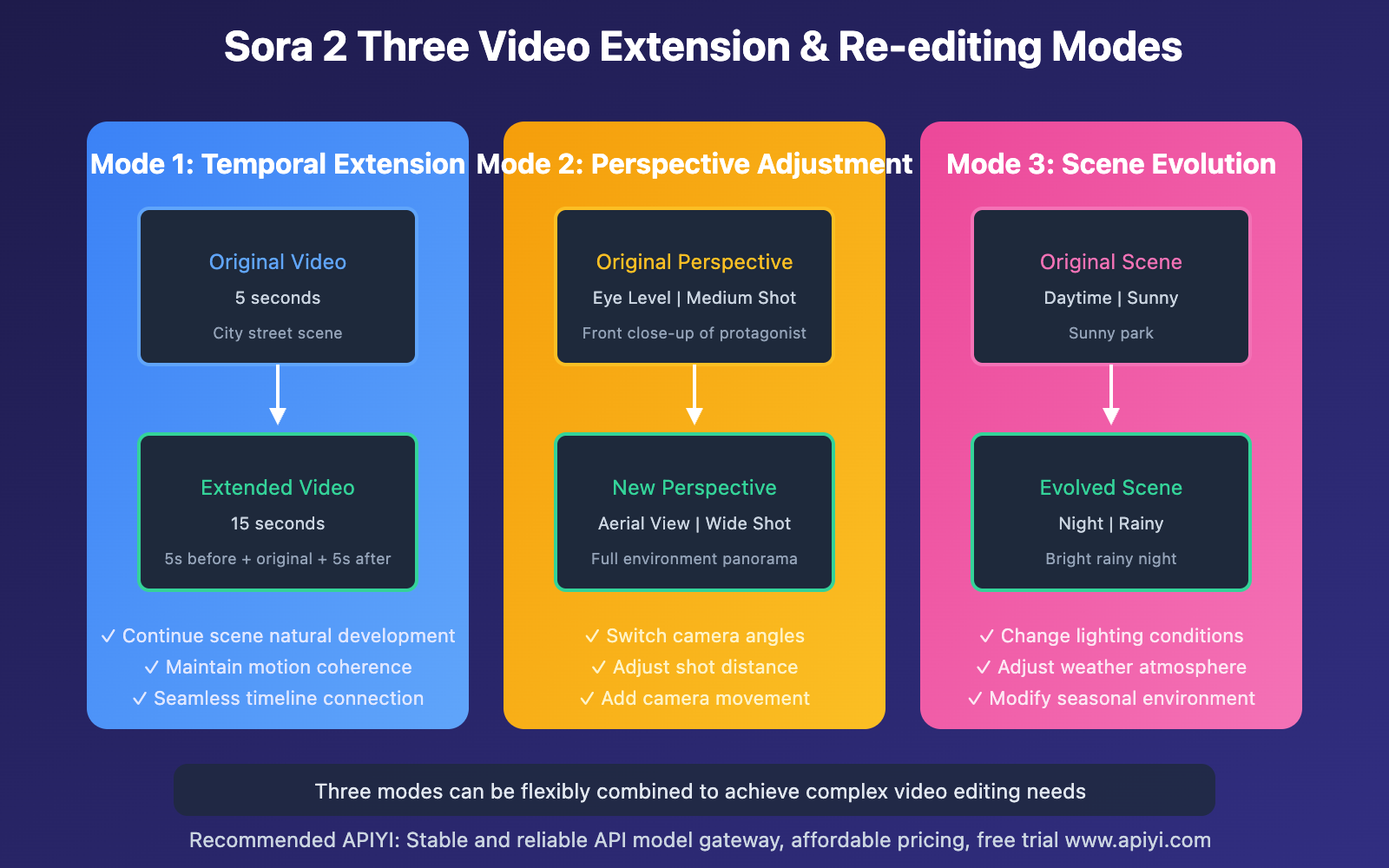

Feature 3: Video Extension & Re-editing – Advanced Applications of Sora 2 Video Generation

Core Capabilities Explained

Sora 2's video extension feature supports three editing modes:

Mode 1: Temporal Extension

- Add content before or after the video

- Continue the natural development of scenes

- Maintain motion coherence

Mode 2: Perspective Adjustment

- Change camera angles and height

- Switch between wide shot/medium shot/close-up

- Add camera movement effects

Mode 3: Scene Evolution

- Change lighting conditions (day → night)

- Adjust weather atmosphere (sunny → rainy)

- Modify seasonal environment (summer → winter)

Practical Case: Video Sequel Generation

def extend_video_with_sora2(original_video, extension_prompt, direction="after"):

"""

Extend existing video using Sora 2

direction: "before" add before video | "after" add after video

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/extend-video"

payload = {

"video_url": original_video,

"extension_prompt": extension_prompt,

"direction": direction,

"extension_duration": 5, # Extension duration (seconds)

"maintain_consistency": True # Maintain style consistency

}

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

response = requests.post(endpoint, json=payload, headers=headers)

return response.json()

# Usage example

extended_video = extend_video_with_sora2(

original_video="https://storage.example.com/original.mp4",

extension_prompt="Camera slowly pulls back, revealing the entire city's night skyline, lights gradually turn on",

direction="after"

)

Perspective Adjustment Implementation

def change_video_perspective(video_url, new_perspective):

"""

Adjust video perspective using Sora 2

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/re-edit"

payload = {

"video_url": video_url,

"edit_type": "perspective_change",

"new_perspective": new_perspective,

"transition_style": "smooth" # smooth/cut/fade

}

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

response = requests.post(endpoint, json=payload, headers=headers)

return response.json()

# Adjust to aerial view

result = change_video_perspective(

video_url="original_scene.mp4",

new_perspective="aerial_view_60deg" # 60-degree aerial view

)

🎯 Cost Optimization Recommendation: Video extension and re-editing features are typically billed based on input and output video duration. Through APIYI apiyi.com platform, you can get batch processing discounts. For projects requiring many video variants, we recommend using asynchronous batch interfaces to reduce overall costs.

Feature 4: Multi-Shot Sequence Generation – Continuous Narrative with Visual Consistency

Core Capabilities Explained

Sora 2's multi-shot sequence feature can ensure:

- Character Consistency – Same character maintains appearance across different shots

- Scene Coherence – Unified environment, props, and lighting

- Narrative Logic – Reasonable temporal and spatial relationships between shots

- Style Uniformity – Consistent color tone, texture, and visual language

Sequence Generation Strategies

Method 1: Storyboard-Based Generation

def generate_multi_shot_sequence(shot_list):

"""

Generate multi-shot sequence based on storyboard script

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/sequence"

payload = {

"shots": shot_list,

"maintain_consistency": {

"characters": True,

"environment": True,

"lighting": True,

"color_grade": True

},

"transition_style": "cut", # cut/fade/dissolve

"reference_image": "character_ref.jpg" # Character reference image

}

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

response = requests.post(endpoint, json=payload, headers=headers)

return response.json()

# Usage example: Product introduction short film

shots = [

{

"shot_number": 1,

"duration": 3,

"prompt": "Wide shot: Modern office panorama, sunlight streaming through floor-to-ceiling windows",

"camera": "wide_shot"

},

{

"shot_number": 2,

"duration": 4,

"prompt": "Medium shot: Young designer working on laptop at desk",

"camera": "medium_shot"

},

{

"shot_number": 3,

"duration": 3,

"prompt": "Close-up: Design software interface on screen, mouse in operation",

"camera": "close_up"

},

{

"shot_number": 4,

"duration": 4,

"prompt": "Medium shot: Designer smiles with satisfaction, closes laptop",

"camera": "medium_shot"

}

]

result = generate_multi_shot_sequence(shots)

print(f"Sequence generation complete, total {len(result['shots'])} shots")

Method 2: Character Reference Image Binding

To ensure character consistency across different shots, you can provide reference images:

def create_character_consistent_shots(character_ref, scene_descriptions):

"""

Generate consistent shots based on character reference image

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/character-sequence"

# Upload character reference image

with open(character_ref, 'rb') as f:

ref_data = f.read()

files = {

'character_reference': ('reference.jpg', ref_data, 'image/jpeg')

}

data = {

"scenes": scene_descriptions,

"character_consistency_weight": 0.9, # 0-1, consistency weight

"allow_costume_change": False # Whether to allow costume changes

}

headers = {

"Authorization": f"Bearer {api_key}"

}

response = requests.post(

endpoint,

files=files,

data=data,

headers=headers

)

return response.json()

Consistency Check and Correction

def check_sequence_consistency(video_urls):

"""

Check consistency of multi-shot sequence

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/consistency-check"

payload = {

"video_urls": video_urls,

"check_items": [

"character_appearance",

"environment_match",

"lighting_continuity",

"color_consistency"

]

}

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

response = requests.post(endpoint, json=payload, headers=headers)

result = response.json()

# If inconsistency found, can request correction

if result["consistency_score"] < 0.85:

print(f"Consistency score: {result['consistency_score']}")

print(f"Problem shots: {result['inconsistent_shots']}")

# Trigger automatic correction

return regenerate_inconsistent_shots(result['inconsistent_shots'])

return result

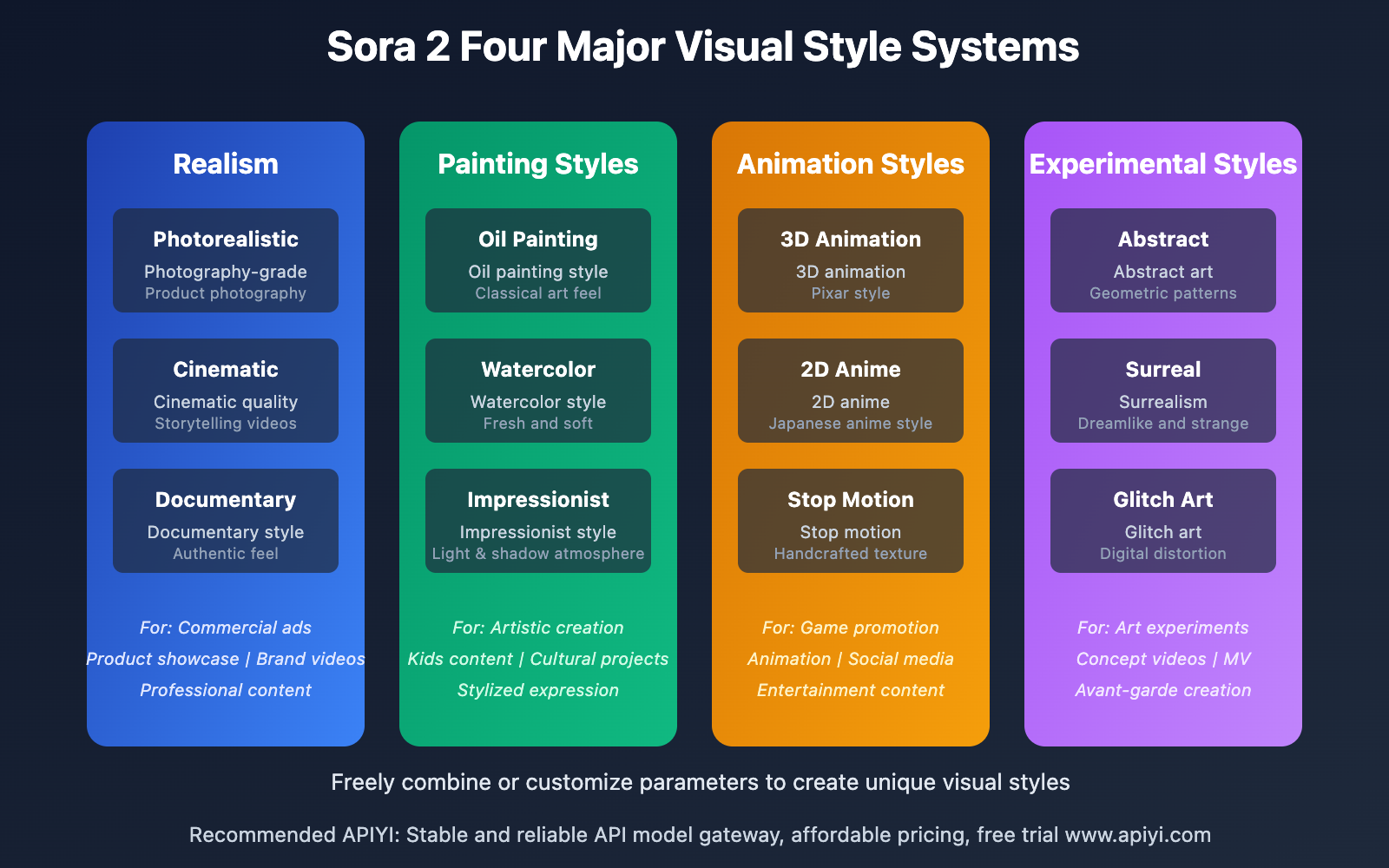

Feature 5: Diverse Visual Styles – Full Coverage from Realistic to Abstract

Visual Style Types Supported by Sora 2

Sora 2 video generation features support the following style categories:

1. Realistic Styles

- Photorealistic (Photography-grade realism)

- Cinematic (Cinematic quality)

- Documentary (Documentary style)

2. Painting Styles

- Oil Painting (Oil painting)

- Watercolor (Watercolor)

- Impressionist (Impressionist)

3. Animation Styles

- 3D Animation (3D animation)

- 2D Anime (2D anime)

- Stop Motion (Stop motion)

4. Experimental Styles

- Abstract (Abstract art)

- Surreal (Surrealism)

- Glitch Art (Glitch art)

Style Control Parameters

def generate_styled_video(prompt, style_preset, custom_params=None):

"""

Generate video with specific style

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/styled-generate"

payload = {

"prompt": prompt,

"style_preset": style_preset,

"style_strength": 0.8, # 0-1, style strength

"audio_match_style": True # Audio matches visual style

}

# Custom style parameters

if custom_params:

payload["custom_style"] = custom_params

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

response = requests.post(endpoint, json=payload, headers=headers)

return response.json()

# Example 1: Oil painting style product video

oil_painting_video = generate_styled_video(

prompt="A bottle of red wine resting on wooden table, sunset glow streaming through window",

style_preset="oil_painting",

custom_params={

"brush_texture": "thick",

"color_palette": "warm_classical"

}

)

# Example 2: Cyberpunk style city

cyberpunk_video = generate_styled_video(

prompt="Future city streets, neon lights flashing, pedestrians passing by",

style_preset="cyberpunk",

custom_params={

"neon_intensity": "high",

"rain_effect": True,

"color_scheme": "blue_purple_pink"

}

)

Style Transfer Feature

def apply_style_transfer(content_video, style_reference):

"""

Apply reference image style to video

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/style-transfer"

payload = {

"content_video_url": content_video,

"style_reference_url": style_reference,

"transfer_strength": 0.7, # Style transfer strength

"preserve_motion": True, # Preserve original video motion

"temporal_consistency": 0.9 # Temporal consistency

}

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

response = requests.post(endpoint, json=payload, headers=headers)

return response.json()

Feature 6: Complete Audiovisual Work Generation – Post-Ready Production Output

Synchronized Audio-Video Generation

Sora 2's complete audiovisual work generation features include:

Audio Generation Capabilities:

- Ambient Sound Effects – Wind, rain, city noise, etc.

- Action Sound Effects – Footsteps, door closing, mechanical sounds, etc.

- Background Music – Automatically matched based on visual mood

- Voice-Over – Supports multilingual AI voice synthesis

Post-Production Ready Features:

- Standard Format Output – Mainstream formats like MP4/MOV

- Layered Export – Independent video, audio, and subtitle layers

- Metadata Preservation – Frame rate, color space, encoding information

- High Bitrate – Supports lossless or near-lossless encoding

Complete Workflow Example

def create_ready_to_publish_video(project_config):

"""

Create post-ready complete audiovisual work

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/complete-production"

payload = {

"project_name": project_config["name"],

"video_specs": {

"prompt": project_config["prompt"],

"duration": project_config["duration"],

"resolution": "1080p",

"fps": 30,

"style": project_config.get("style", "cinematic")

},

"audio_specs": {

"include_ambient": True,

"include_foley": True, # Foley sound effects

"background_music": {

"enabled": True,

"mood": project_config.get("music_mood", "uplifting"),

"volume": -18 # dB

},

"voice_over": project_config.get("voice_over", None)

},

"output_format": {

"video_codec": "h264",

"audio_codec": "aac",

"bitrate": "10M",

"separate_tracks": True # Export separate audio tracks

},

"post_production_ready": {

"color_grade": "rec709", # Color standard

"export_edl": True, # Export edit decision list

"timecode_start": "00:00:00:00"

}

}

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

response = requests.post(endpoint, json=payload, headers=headers)

return response.json()

# Usage example: Product promotional video

product_video = create_ready_to_publish_video({

"name": "Smart Watch Launch Video",

"prompt": "Premium smartwatch product showcase, multi-angle rotation, feature interface demonstration, sports scene application",

"duration": 30,

"style": "commercial",

"music_mood": "energetic",

"voice_over": {

"text": "New smartwatch, redefining healthy living",

"language": "en-US",

"voice": "professional_male"

}

})

print(f"Video: {product_video['video_url']}")

print(f"Audio tracks: {product_video['audio_tracks']}")

print(f"EDL file: {product_video['edl_url']}")

Batch Generation and Automation

def batch_generate_video_variants(base_config, variations):

"""

Batch generate video variants (for A/B testing)

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/batch-variants"

payload = {

"base_config": base_config,

"variations": variations,

"async": True, # Async processing

"callback_url": "https://your-domain.com/webhook"

}

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

response = requests.post(endpoint, json=payload, headers=headers)

batch_job = response.json()

return batch_job["job_id"]

# Generate 5 ad variants with different styles

variations = [

{"style": "modern", "color_tone": "cool"},

{"style": "vintage", "color_tone": "warm"},

{"style": "minimalist", "color_tone": "neutral"},

{"style": "luxury", "color_tone": "gold"},

{"style": "tech", "color_tone": "neon"}

]

job_id = batch_generate_video_variants(

base_config={

"prompt": "New energy vehicle driving on city roads, showcasing technology and eco-friendly concept",

"duration": 15,

"resolution": "1080p"

},

variations=variations

)

print(f"Batch job ID: {job_id}")

🎯 Enterprise Application Recommendation: For enterprises requiring large-scale video content production, we recommend establishing automated production workflows through APIYI apiyi.com platform. The platform provides batch processing, task queue management, and cost budget control features, which can significantly improve content production efficiency and reduce labor costs.

Sora 2 视频生成功能的组合应用场景

场景1: 短视频营销完整流程

def create_marketing_short_video(product_info):

"""

完整的短视频营销内容生产流程

"""

# 步骤1: 生成产品主体视频

main_video = generate_sora2_video(

prompt=f"{product_info['description']},产品特写,专业摄影灯光",

duration=5,

resolution="1080p"

)

# 步骤2: 将产品图片转为动画补充镜头

animated_shots = []

for image in product_info['product_images']:

animation = animate_image_with_sora2(

image_path=image,

animation_prompt="产品轻微旋转,突出细节",

duration=3

)

animated_shots.append(animation['video_url'])

# 步骤3: 生成多镜头使用场景序列

usage_scenes = generate_multi_shot_sequence([

{"prompt": f"用户在{product_info['scenario_1']}使用产品", "duration": 4},

{"prompt": f"用户在{product_info['scenario_2']}使用产品", "duration": 4}

])

# 步骤4: 合成完整视听作品

final_video = create_ready_to_publish_video({

"name": f"{product_info['name']}_marketing",

"video_clips": [main_video['video_url']] + animated_shots + usage_scenes['shots'],

"duration": 20,

"music_mood": "uplifting",

"voice_over": {

"text": product_info['slogan'],

"language": "zh-CN"

}

})

return final_video

# 使用示例

marketing_video = create_marketing_short_video({

"name": "智能音箱",

"description": "现代简约设计的智能音箱,黑色外壳带LED环形灯",

"product_images": ["front.jpg", "side.jpg", "detail.jpg"],

"scenario_1": "客厅沙发旁",

"scenario_2": "卧室床头柜",

"slogan": "你的智能生活助手"

})

场景2: 教育课程视频制作

def create_educational_video(course_content):

"""

教育课程视频自动化制作

"""

# 生成讲解场景

instructor_scenes = generate_multi_shot_sequence([

{

"prompt": "专业讲师在现代化教室中讲解,白板展示知识点",

"duration": 8,

"camera": "medium_shot"

},

{

"prompt": "讲师特写,认真讲解的表情",

"duration": 4,

"camera": "close_up"

}

])

# 生成动画演示素材

demo_animations = []

for concept in course_content['key_concepts']:

animation = generate_sora2_video(

prompt=f"{concept['description']},教育动画风格,清晰的示意图",

duration=6,

resolution="720p"

)

demo_animations.append(animation['video_url'])

# 合成完整课程视频

course_video = create_ready_to_publish_video({

"name": course_content['title'],

"video_clips": instructor_scenes['shots'] + demo_animations,

"duration": 600, # 10分钟课程

"voice_over": {

"text": course_content['script'],

"language": "zh-CN",

"voice": "teacher_female"

}

})

return course_video

场景3: 社交媒体内容矩阵

def create_social_media_content_matrix(campaign_brief):

"""

为一个营销活动生成多平台内容矩阵

"""

content_matrix = {}

# 抖音/TikTok 竖屏短视频 (9:16)

content_matrix['tiktok'] = generate_sora2_video(

prompt=f"{campaign_brief['message']},竖屏构图,快节奏剪辑风格",

duration=15,

resolution="1080x1920"

)

# Instagram Reels (4:5)

content_matrix['instagram'] = generate_sora2_video(

prompt=f"{campaign_brief['message']},时尚视觉风格,Instagram美学",

duration=30,

resolution="1080x1350"

)

# YouTube Shorts (9:16)

content_matrix['youtube_shorts'] = generate_sora2_video(

prompt=f"{campaign_brief['message']},教育性叙事,清晰字幕",

duration=60,

resolution="1080x1920"

)

# 微信视频号 (1:1)

content_matrix['wechat'] = generate_sora2_video(

prompt=f"{campaign_brief['message']},适合中文用户的视觉风格",

duration=60,

resolution="1080x1080"

)

return content_matrix

Sora 2 视频生成功能的成本优化策略

分辨率与时长的成本权衡

| 分辨率 | 5秒成本 | 10秒成本 | 20秒成本 | 建议场景 |

|---|---|---|---|---|

| 480p | ¥0.5 | ¥1.0 | ¥2.0 | 快速原型、内部测试 |

| 720p | ¥1.2 | ¥2.4 | ¥4.8 | 社交媒体、在线广告 |

| 1080p | ¥3.0 | ¥6.0 | ¥12.0 | 专业内容、品牌视频 |

| 4K | ¥8.0 | ¥16.0 | ¥32.0 | 电视广告、影院内容 |

优化建议

策略 1: 分级生成

def cost_optimized_generation(prompt, quality_tier="social"):

"""

根据用途选择合适的质量等级

"""

quality_configs = {

"prototype": {"resolution": "480p", "duration": 5},

"social": {"resolution": "720p", "duration": 10},

"professional": {"resolution": "1080p", "duration": 15},

"broadcast": {"resolution": "4k", "duration": 30}

}

config = quality_configs[quality_tier]

return generate_sora2_video(

prompt=prompt,

duration=config["duration"],

resolution=config["resolution"]

)

策略 2: 批量折扣利用

# 通过 API易平台批量提交享受折扣

def submit_batch_with_discount(video_requests):

"""

批量提交任务享受折扣定价

"""

api_key = "your_apiyi_key"

endpoint = "https://api.apiyi.com/v1/sora/batch"

payload = {

"requests": video_requests,

"priority": "normal", # normal/high

"apply_discount": True

}

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

response = requests.post(endpoint, json=payload, headers=headers)

batch_info = response.json()

print(f"原价: ¥{batch_info['original_cost']}")

print(f"折扣价: ¥{batch_info['discounted_cost']}")

print(f"节省: ¥{batch_info['saved_amount']}")

return batch_info

常见问题与最佳实践

Q1: 如何确保多镜头序列的一致性?

A: 使用以下技巧:

- 提供角色或场景的参考图片

- 在提示词中明确描述需要保持一致的元素

- 使用

character_consistency_weight参数加强一致性 - 先生成关键镜头,再基于关键镜头生成过渡镜头

Q2: 文本生成视频的提示词有字数限制吗?

A:

- 单次提示词建议控制在 500 字符以内

- 过长的提示词可能导致模型抓不住重点

- 建议使用结构化的提示词格式,分层描述主体、动作、环境、风格

Q3: 生成的视频音效可以单独导出吗?

A: 可以。使用 separate_tracks: true 参数可以导出独立的音轨文件,支持后期重新混音。

Q4: Sora 2 支持生成多长的视频?

A:

- 标准模式: 最长 20 秒

- 扩展模式: 可通过视频扩展功能分段生成更长内容

- 建议: 长视频采用分镜头生成后合并的方式

Q5: 如何选择合适的视觉风格?

A: 根据内容类型选择:

- 商业广告: Cinematic(电影级)或 Commercial(广告级)

- 教育内容: Clean(简洁)或 Explainer(说明性)

- 艺术创作: 根据目标受众选择绘画风格或实验风格

- 社交媒体: Trendy(潮流)或 Viral(病毒式)风格

总结与实战建议

Sora 2 的视频生成功能为内容创作者提供了一套完整的工具链,从简单的文本生成视频,到复杂的多镜头序列制作,再到后期就绪的完整作品输出,覆盖了现代视频制作的全流程。

🎯 新手入门路径:

- 从文本生成视频开始,掌握提示词编写技巧

- 尝试图片转动画,理解运动和风格控制

- 学习视频扩展,掌握场景延续的方法

- 最后挑战多镜头序列,实现完整叙事

🎯 专业用户建议:

- 通过 API易 apiyi.com 平台集成 Sora 2 能力到现有工作流

- 建立素材库和风格预设,提升生成效率

- 使用批量接口和异步处理降低成本

- 结合传统后期工具进行精细调整

🎯 成本控制要点:

- 根据发布平台选择合适的分辨率

- 利用批量折扣处理大量任务

- 先用低分辨率测试,确认效果后再高清生成

- 通过 API易平台的用量分析工具优化使用策略

Sora 2 的视频生成功能正在改变内容创作的方式,从创意到成品的时间从数周缩短到数小时。掌握这些核心能力,你就能站在 AI 视频创作的最前沿,为观众带来更丰富、更高质量的视听体验。

相关资源:

- API易平台: apiyi.com

- Sora 2 API文档: api.apiyi.com/docs/sora

- 示例代码仓库: github.com/apiyi/sora2-examples