Author's Note: Detailed guide on creating long videos beyond Sora 2's duration limit, including API multi-segment stitching, maintaining consistency with reference frames, coherent prompt techniques, and post-editing workflow to produce professional-grade long-form content.

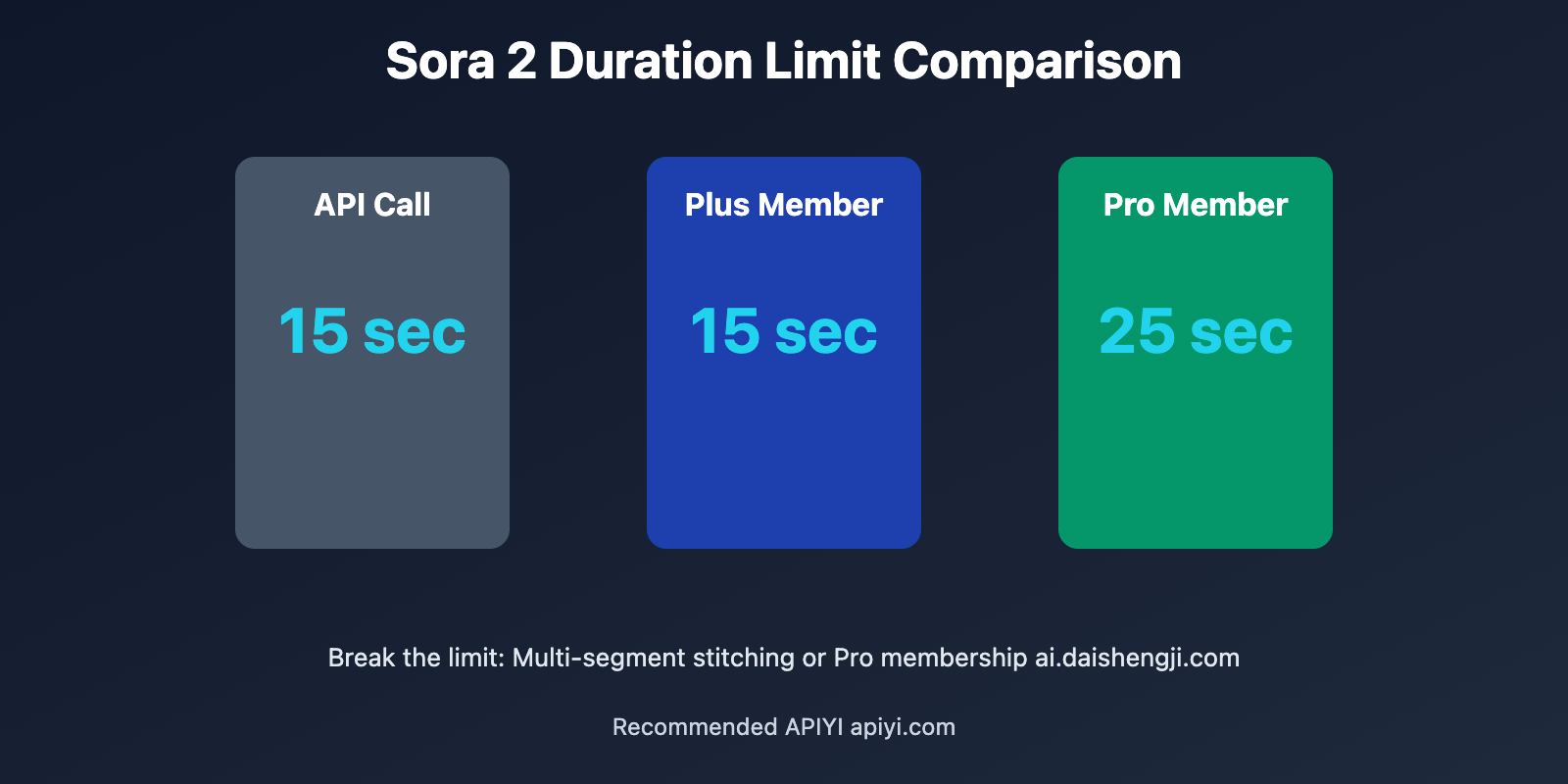

Sora 2's video generation capabilities are impressive, but duration limitations remain a core challenge for creators. API calls default to only 15 seconds, and even ChatGPT Pro members (Sora Pro) can only generate up to 25 seconds at once on the web version. So how do you create videos lasting one minute or more?

The answer: Multi-segment stitching + Reference frame consistency + Coherent prompt design + Post-editing optimization. This article will detail this complete long-video production solution, from technical principles to practical cases, helping you break through Sora 2's duration limits.

The article covers API multi-segment calling techniques, reference frame technology for character consistency, coherent prompt design methods, seamless video stitching techniques, and real-world experience from Bilibili entertainment creators (like Chinese football comedy goal compilations).

Core Value: Through this article, you'll learn how to use Sora 2 to create professional long videos over one minute, master core multi-segment stitching technology, and significantly enhance flexibility and possibilities in video creation.

Sora 2 Duration Limit Background

Official Duration Limits

Sora 2 duration limits under different access methods:

| Access Method | Max Duration | Target Users | Cost |

|---|---|---|---|

| API Call | 15 seconds | Developers, batch generation | Pay-per-use, ~$0.5-2/video |

| Web (Free Users) | 15 seconds | Regular users | ChatGPT Plus $20/month |

| Web (Pro Members) | 25 seconds | Pro members | ChatGPT Pro $200/month |

Limitation Reasons:

- Computation Cost: Video generation computation grows exponentially with duration

- Resource Allocation: Duration limits allow serving more users

- Quality Assurance: Shorter videos are easier to quality-control

- Business Strategy: Duration differentiation distinguishes membership tiers

Real-World Needs

However, actual creation often requires longer videos:

- Entertainment Shorts: Complete stories for Bilibili, TikTok (1-3 minutes)

- Product Demos: Complete product introductions and usage (30-60 seconds)

- Educational Content: Step-by-step tutorial demonstrations (1-2 minutes)

- Creative Works: Like football goal compilations, music MVs (1-5 minutes)

Case Study: Chinese football comedy goal compilation videos on Bilibili typically need 1-3 minutes to fully showcase multiple funny shots and editing effects, far exceeding Sora 2's single generation limit.

Multi-Segment Stitching Core Solution

The only viable solution to Sora 2's duration limit is multi-segment stitching. This is also the method widely adopted by Bilibili entertainment creators.

| Solution Element | Core Technology | Challenge | Solution |

|---|---|---|---|

| Multi-Segment Generation | Multiple API calls or web generations | Cost control, efficiency | Use APIYI apiyi.com to reduce costs |

| Consistency Maintenance | Reference frame technique | Character, scene changes | Use last frame as next segment's reference |

| Coherence Design | Prompt optimization | Story logic, shot transitions | Design coherent segmented prompts |

| Seamless Stitching | Video editing software | Abrupt transitions, frame jumps | Use fades, match cuts |

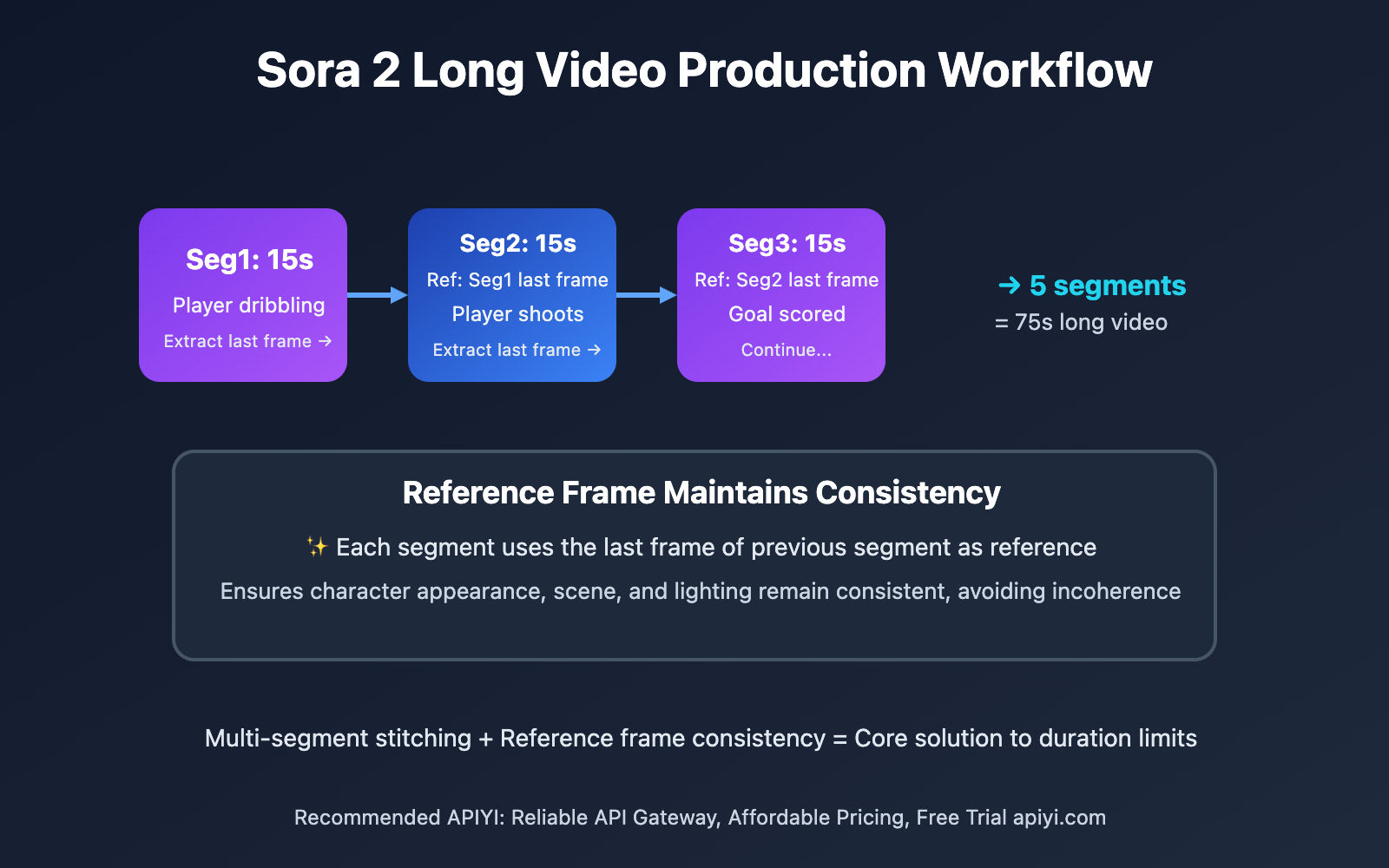

🔥 Core Process Details

Step 1: Segment Planning

Split target video into multiple 10-15 second segments, generating each independently.

Planning Points:

- Shot Change Points: Segment at natural transitions (scene changes, action completion)

- Duration Allocation: Each segment 10-15 seconds, reserve 1-2 seconds for transitions

- Story Coherence: Ensure each segment's ending naturally connects to next beginning

Example (Chinese Football Goal Compilation):

Segment 1 (15s): Player dribbling past defenders, preparing to shoot

Segment 2 (15s): Shooting action, ball flying toward goal

Segment 3 (15s): Ball in net, player celebrating

Segment 4 (15s): Teammates gathering to celebrate, funny gestures

Segment 5 (15s): Whole stadium cheering, comedy effects

Step 2: Reference Frame Technique for Consistency

Reference Frame Purpose:

- Maintain character appearance, clothing, scene consistency

- Ensure camera angle and lighting continuity

- Improve video coherence and professionalism

Reference Frame Extraction Method:

- Extract static image from last frame of Segment 1

- Use this image as reference frame (image-to-video) for Segment 2

- Describe actions and scenes following the reference in prompt

- Repeat process for subsequent segments

Technical Details:

# Pseudocode example

segment_1_video = generate_sora_video(prompt_1, duration=15)

last_frame_1 = extract_last_frame(segment_1_video) # Extract last frame

# Use last frame as reference for Segment 2

segment_2_video = generate_sora_video(

prompt_2,

image=last_frame_1, # Reference frame

duration=15

)

last_frame_2 = extract_last_frame(segment_2_video)

# Continue with next segment...

Step 3: Coherent Prompt Design

Core Principles:

- Continuity: Each segment's prompt describes previous segment's ending state

- Action Progression: Describe natural action evolution

- Scene Consistency: Maintain coherent scene descriptions

Example Prompts (Chinese Football Goal Compilation):

Segment 1:

A Chinese football player in red jersey dribbling past defenders,

approaching the penalty box, preparing to shoot.

Dynamic camera following the action, sunny day, stadium crowd cheering.

Segment 2 (based on Segment 1 last frame):

Continue from the previous frame: The player shoots powerfully,

ball flying through the air in slow motion towards the goal.

Goalkeeper diving, dramatic moment, crowd holding breath.

Segment 3 (based on Segment 2 last frame):

Continue: Ball hits the back of the net, goal scored!

Player running with arms wide open, celebrating,

teammates starting to rush towards him.

Keyword Techniques:

- Use "Continue from the previous frame" for explicit continuation

- Describe specific action progression (dribbling → shooting → goal → celebration)

- Maintain consistent environment descriptions (red jersey, stadium, sunny day)

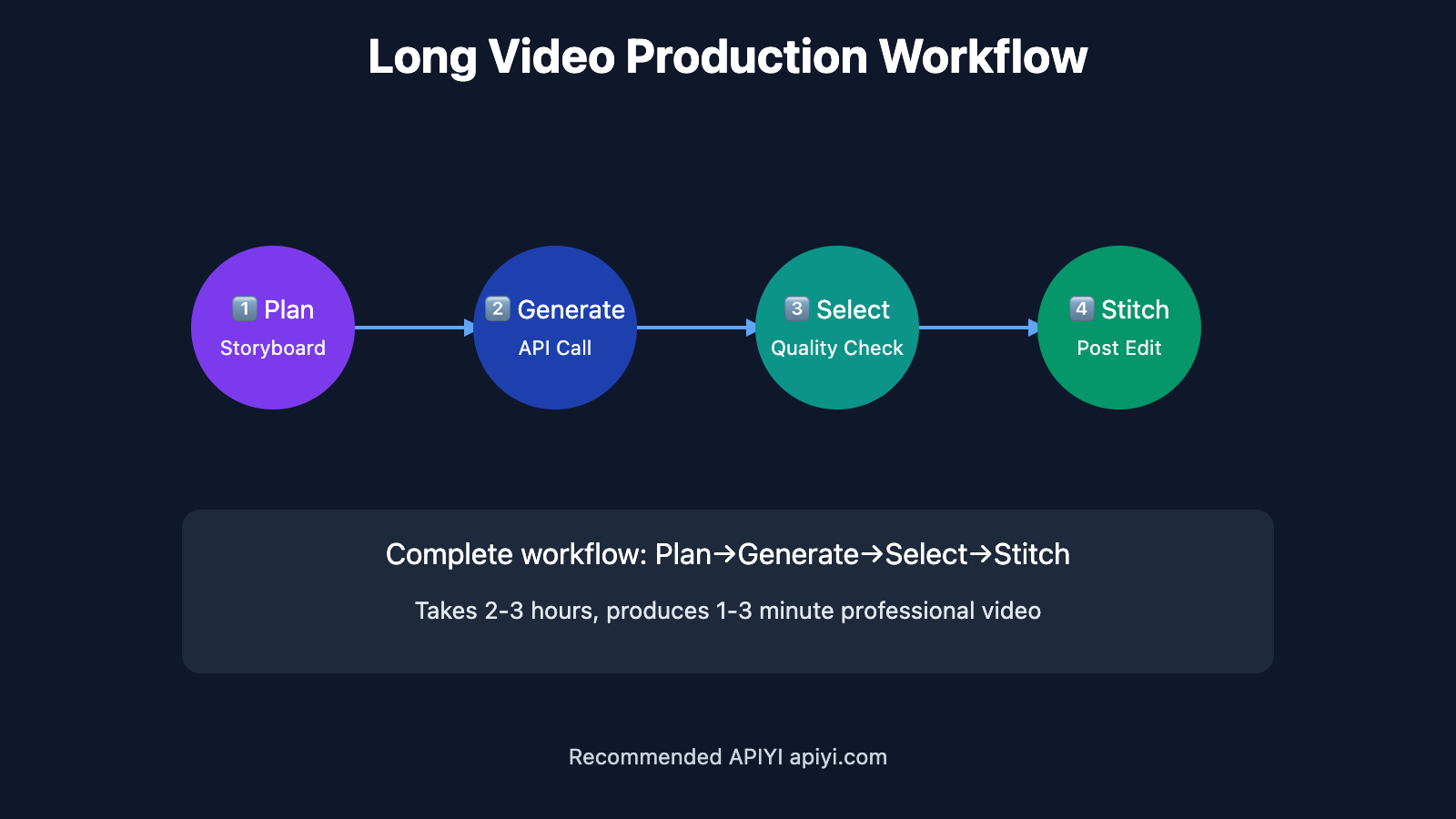

Sora 2 Long Video Production Practical Workflow

Complete long video production workflow includes the following steps:

| Production Phase | Core Tasks | Recommended Tools | Estimated Time |

|---|---|---|---|

| 🎯 Pre-Production | Storyboard script, duration planning | Word/Notion | 30-60 minutes |

| 🚀 Video Generation | API multi-segment calls, reference generation | APIYI apiyi.com | 2-5 minutes per segment |

| 💡 Quality Selection | Pick best results, regenerate | Local player | 10-20 minutes |

| 📊 Post-Stitching | Video editing, transition optimization | Premiere/CapCut | 30-60 minutes |

Sora 2 API Multi-Segment Call Technical Implementation

💻 Quick Start – Automated API Generation

Using APIYI platform for Sora 2 multi-segment video generation (Python):

import requests

import time

import json

# Configure APIYI platform

api_key = "your_api_key"

base_url = "https://api.apiyi.com/v1/video/generations"

# Segmented prompt list

prompts = [

"A Chinese football player in red jersey dribbling past defenders...",

"Continue: The player shoots powerfully, ball flying towards goal...",

"Continue: Ball hits the net, goal! Player celebrates...",

"Continue: Teammates rush to celebrate, funny gestures...",

"Continue: Whole team celebrates, confetti effect..."

]

# Store generated videos

videos = []

last_frame = None

for i, prompt in enumerate(prompts):

print(f"Generating segment {i+1}...")

# Build request

data = {

"model": "sora-2",

"prompt": prompt,

"duration": 15, # 15 seconds

"resolution": "1080p"

}

# Add reference frame if available

if last_frame:

data["image"] = last_frame # Base64 or URL

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

# Send request

response = requests.post(base_url, json=data, headers=headers)

result = response.json()

# Get video URL

video_url = result.get("video_url")

videos.append(video_url)

# Extract last frame for next segment

# (Actually need to download and extract frame, simplified here)

last_frame = extract_last_frame_from_url(video_url)

# Wait to avoid rate limiting

time.sleep(5)

print(f"Successfully generated {len(videos)} segments!")

print("Video list:", videos)

🎯 Reference Frame Extraction Technique

Using FFmpeg or OpenCV to extract video's last frame:

import cv2

import base64

def extract_last_frame(video_path):

"""Extract video's last frame and convert to Base64"""

# Open video

cap = cv2.VideoCapture(video_path)

# Get total frame count

total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

# Jump to last frame

cap.set(cv2.CAP_PROP_POS_FRAMES, total_frames - 1)

ret, frame = cap.read()

if ret:

# Save as image

cv2.imwrite("last_frame.jpg", frame)

# Convert to Base64 (for API call)

with open("last_frame.jpg", "rb") as f:

image_base64 = base64.b64encode(f.read()).decode()

return f"data:image/jpeg;base64,{image_base64}"

cap.release()

return None

# Usage example

last_frame = extract_last_frame("segment_1.mp4")

🚀 Cost Optimization Comparison

Sora 2 generation cost comparison based on actual testing:

| Provider | Per Segment (15s) | 5 Segments (75s) | API Stability | Rating |

|---|---|---|---|---|

| OpenAI Official API | $1.5-2.0 | $7.5-10.0 | ⭐⭐⭐⭐ | ⭐⭐⭐ |

| APIYI apiyi.com | $1.2-1.5 | $6.0-7.5 | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

| Web Pro Membership | $200/month | Unlimited (25s limit) | ⭐⭐⭐⭐ | ⭐⭐⭐ |

🔍 Cost Recommendation: For creators needing frequent long video generation, using APIYI apiyi.com platform is more economical. Compared to OpenAI official API, saves about 20-25% cost while providing more stable service and technical support.

💰 Membership Upgrade Options

For users needing Sora Pro (25 second duration):

Official Channel:

- ChatGPT Pro Membership: $200/month

- Includes Sora Pro, GPT-4, DALL-E and all features

Third-party Recharge (cost optimization):

- Visit ai.daishengji.com for membership upgrade

- Discounted compared to official pricing

- Suitable for individual users or small-scale use

💰 Selection Recommendation:

- API Solution (Recommended): For batch generation, automation, use APIYI apiyi.com

- Web Pro: For users not needing API, occasional long videos, consider third-party recharge

- Hybrid Approach: Web for testing, API for batch generation

✅ Sora 2 Long Video Production Best Practices

| Practice Point | Specific Recommendations | Considerations |

|---|---|---|

| 🎯 Segment Duration | Each segment 10-15 seconds, don't exceed 15 | API limit, timeout causes failure |

| ⚡ Reference Quality | Extract high-quality last frame, ensure clarity | Blurry reference affects next segment quality |

| 💡 Prompt Design | Each segment must include "Continue from…" | Maintain semantic coherence |

| 🔧 Transition Handling | Design natural shot changes at segment points | Avoid abrupt frame jumps |

📋 Recommended Video Stitching Tools

| Tool Type | Recommended Tools | Features |

|---|---|---|

| Professional Editing | Adobe Premiere Pro | Powerful, supports complex transitions |

| Simple Stitching | CapCut | Easy to use, suitable for quick stitching |

| Automated Processing | FFmpeg | Command-line tool, suitable for batch processing |

| API Platform | APIYI | One-stop Sora 2 API access |

🛠️ Tool Selection Recommendation: For Sora 2 long video production, recommend using APIYI apiyi.com as video generation platform, combined with CapCut for post-stitching. APIYI provides stable Sora 2 API access, supports batch calls and automated workflow—ideal for long video production.

🔍 Stitching Technique Details

1. Match Cut:

- Cut at continuous action points

- Example: Player shooting action continues from Segment 1 to Segment 2

- Effect: Audience doesn't notice the edit point

2. Fade In/Out:

- Add 0.3-0.5 second fade transition between segments

- Suitable for larger scene changes

- Effect: Smooth transition, not abrupt

3. Music Cover:

- Add continuous background music

- Music rhythm can mask frame jumps

- Effect: Common technique in entertainment videos

FFmpeg Auto-Stitching Example:

# Create file list

cat > filelist.txt << EOF

file 'segment_1.mp4'

file 'segment_2.mp4'

file 'segment_3.mp4'

file 'segment_4.mp4'

file 'segment_5.mp4'

EOF

# Seamless stitching

ffmpeg -f concat -safe 0 -i filelist.txt -c copy output_final.mp4

# Add fade transitions (requires re-encoding)

ffmpeg -i segment_1.mp4 -i segment_2.mp4 \

-filter_complex "[0:v]fade=out:st=14:d=1[v0];[1:v]fade=in:st=0:d=1[v1];[v0][v1]concat=n=2:v=1[outv]" \

-map "[outv]" output_with_fade.mp4

🚨 Important Notes: Seamless stitching (

-c copy) is fast but may stutter in some players, re-encoding (-c:v libx264) is more stable but time-consuming. Recommend testing seamless stitching first, re-encode if unsatisfied.

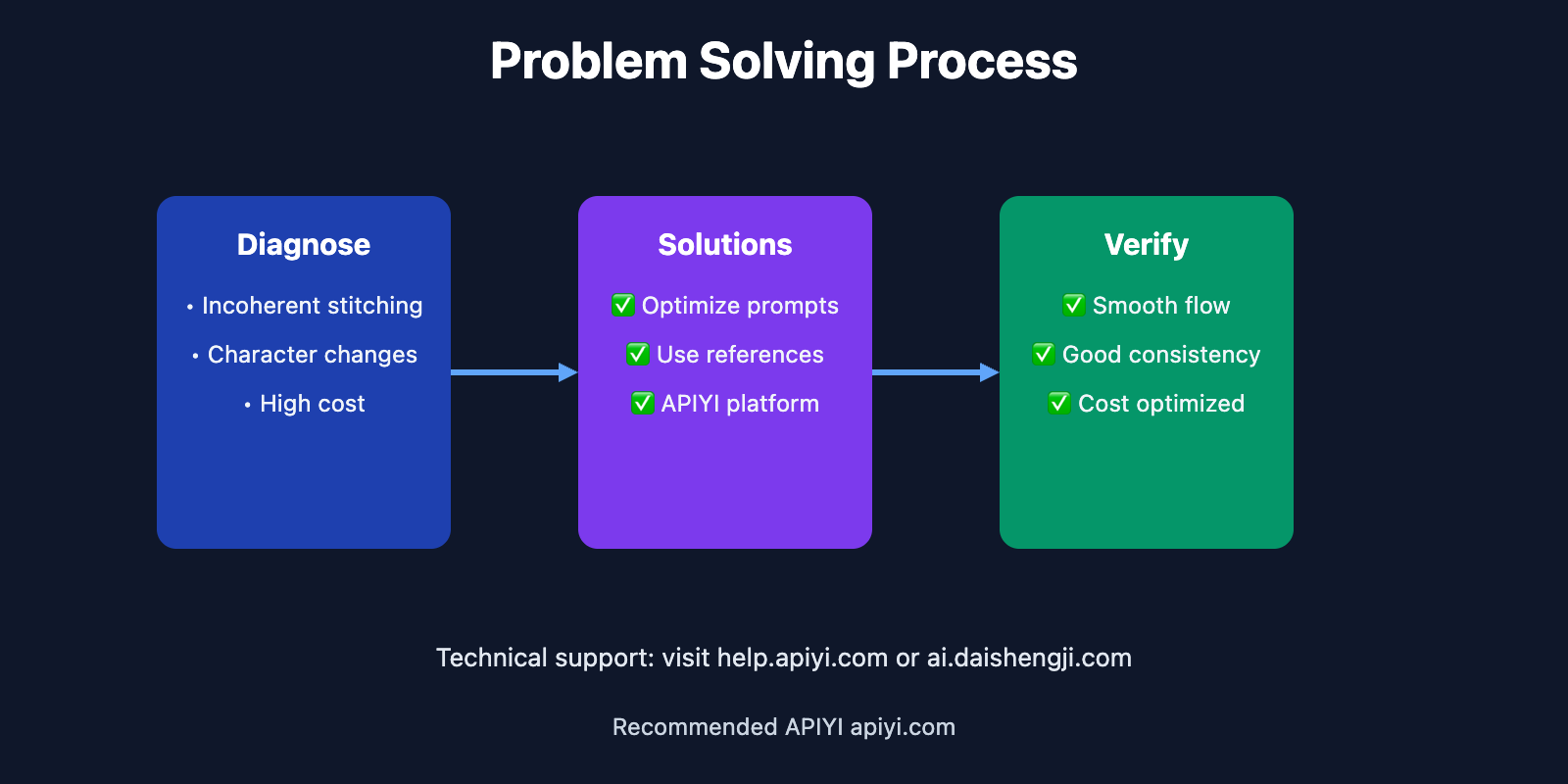

❓ Sora 2 Long Video Production FAQ

Q1: Why use reference frames? What happens without them?

Core Purpose of Reference Frames:

- Maintain Character Consistency: Ensure same character has consistent appearance and clothing across segments

- Continue Scene State: Maintain scene, lighting, and camera angle continuity

- Improve Coherence: Makes audience feel it's continuous footage, not stitched

Consequences Without Reference Frames:

Segment 1: Red jersey player, short hair, sunny day

Segment 2 (no reference): May become blue jersey player, long hair, cloudy ❌

Segment 2 (with reference): Maintains red jersey player, short hair, sunny day ✅

Real Case: Bilibili Chinese football goal compilations—without reference frames, players would change appearance in each segment, confusing viewers "Is this the same person?".

Recommend testing the difference with and without reference frames on APIYI apiyi.com platform. You'll find reference frames significantly improve consistency.

Q2: How to ensure multi-segment stitching coherence?

Three Guarantee Measures:

1. Coherent Prompt Design:

- Each segment starts with "Continue from the previous frame"

- Describe previous segment's ending state

- Specify upcoming actions

2. Reference Frame Technique:

- Use last frame of previous segment as reference

- Ensures visual continuity

3. Post-Editing Optimization:

- Match cutting: Cut at continuous action points

- Transition effects: Fades, dissolves

- Music cover: Use music rhythm to mask frame jumps

Professional Advice: Coherence relies primarily on prompt design and reference frames, post-editing is icing on the cake. If pre-production prompts aren't well-designed, post-production can't fix it. Recommend spending more time optimizing prompts to ensure logical coherence in each segment.

You can learn from successful Bilibili entertainment creator cases on designing coherent storyboards.

Q3: API or Web Pro—which is better for long videos?

API Solution Advantages:

- ✅ Can automate batch generation

- ✅ Programmable control, high flexibility

- ✅ Predictable cost (pay-per-use)

- ✅ Suitable for creating multiple long videos

API Solution Disadvantages:

- ❌ Requires programming skills

- ❌ Segments limited to 15 seconds

- ❌ Need to handle frame extraction yourself

Web Pro Advantages:

- ✅ No programming needed, GUI operation

- ✅ Segments up to 25 seconds (10 seconds more than API)

- ✅ Can preview effects in real-time

Web Pro Disadvantages:

- ❌ Requires $200/month membership (or third-party recharge)

- ❌ Manual operation, low efficiency

- ❌ Not suitable for batch generation

Selection Recommendation:

- Occasional 1-2 long videos: Web Pro (upgrade via ai.daishengji.com)

- Frequent production, batch generation: API solution (APIYI apiyi.com)

- Limited budget: API solution, pay-as-you-go more flexible

For Bilibili entertainment creators requiring frequent output, strongly recommend API solution.

Q4: How to avoid obvious stitching marks?

Core Techniques:

1. Segment at Natural Transition Points:

- ✅ Shot changes: From close-up to wide shot

- ✅ Scene transitions: From indoor to outdoor

- ✅ Action completion: After shooting action completes

- ❌ Avoid cutting mid-action

2. Use High-Quality Reference Frames:

- Ensure last frame is clear, not blurry

- Avoid motion blur from fast movement

- Can extract 2-3 frames before last (clearer)

3. Post-Transition Handling:

- Add 0.3-0.5 second fade transitions

- Use match cutting techniques

- Adjust cut points to find most natural transitions

4. Music and Sound Effects:

- Add sound effects at cut points (like "click")

- Use continuous background music

- Music rhythm can mask frame jumps

Real Case: Bilibili Chinese football goal compilations—creators cut at "ball in net" moment for each goal, as this is natural climax point where audience attention is captured and won't notice the edit point.

Recommend testing different cut points and transition effects in CapCut first to find the most natural combination.

📚 Further Reading

🛠️ Real-World Case Analysis

Bilibili Entertainment Creation: Chinese Football Comedy Goal Compilation

These videos typically 1-3 minutes, containing multiple funny shots and creative editing:

Production Process:

- Planning: Design 10-15 funny goal scenarios

- Segment Generation: Each scenario 10-15 seconds, batch generate using API

- Reference Maintenance: Ensure player and scene consistency

- Creative Editing: Add funny sound effects, slow motion, special effects

- Music: Use upbeat background music

Key Techniques:

- Cut at "climax moments" (ball in net) for each goal

- Use exaggerated celebration moves for entertainment

- Add bullet comments and effects to enhance impact

📖 Learning Recommendation: Suggest starting with simple 3-5 segment stitching practice, then try complex long videos after mastering basics. You can get testing credits at APIYI apiyi.com to experience complete multi-segment generation and stitching workflow.

🔗 Related Resources

| Resource Type | Recommended Content | Access Method |

|---|---|---|

| API Documentation | Sora 2 API Call Guide | https://docs.apiyi.com |

| Membership Recharge | ChatGPT Pro Upgrade | https://ai.daishengji.com |

| API Platform | Sora 2 API Access | https://api.apiyi.com |

| Technical Support | APIYI Help Center | https://help.apiyi.com |

Deep Learning Recommendation: Stay updated on Sora 2 technical updates. We recommend regularly visiting APIYI docs.apiyi.com for latest API features and optimizations. Platform synchronizes OpenAI official updates first, ensuring you use the latest version.

🎯 Summary

Sora 2 long video production, while limited by single-generation duration, can fully produce professional long videos over one minute through multi-segment stitching + reference frame consistency + coherent prompts + post-editing optimization.

Key Takeaways:

- Multi-Segment Stitching: Split long video into multiple 10-15 second segments, generate sequentially

- Reference Frame Technique: Use last frame of previous segment as reference to maintain character and scene consistency

- Prompt Design: Each segment includes "Continue from…", ensuring semantic coherence

- Post-Optimization: Use match cutting, fades and other techniques for seamless stitching

- Cost Control: API solution more flexible than Web Pro, suitable for batch generation

Recommendations for Practice:

- Create good storyboard first, plan each segment's content and duration

- Use high-quality reference frames to ensure visual consistency across segments

- Optimize prompts for logical story coherence in each segment

- Focus on natural transitions in post-editing, avoid abrupt stitching

Final Recommendation: For creators needing frequent long video production, we strongly recommend using APIYI apiyi.com to access Sora 2 API. The platform not only provides stable API service and favorable pricing, but also comprehensive technical documentation and support, significantly improving long video production efficiency and quality. For individual users, consider upgrading ChatGPT Pro via ai.daishengji.com for longer 25-second single segments.

📝 Author Bio: Veteran video creator specializing in AI video generation technology research. Regularly shares Sora 2 usage tips and long video production experience. More technical materials available at APIYI apiyi.com tech community.

🔔 Technical Exchange: Welcome to discuss Sora 2 long video production techniques in comments and share your creative experience. For technical support, contact our technical team through APIYI apiyi.com.