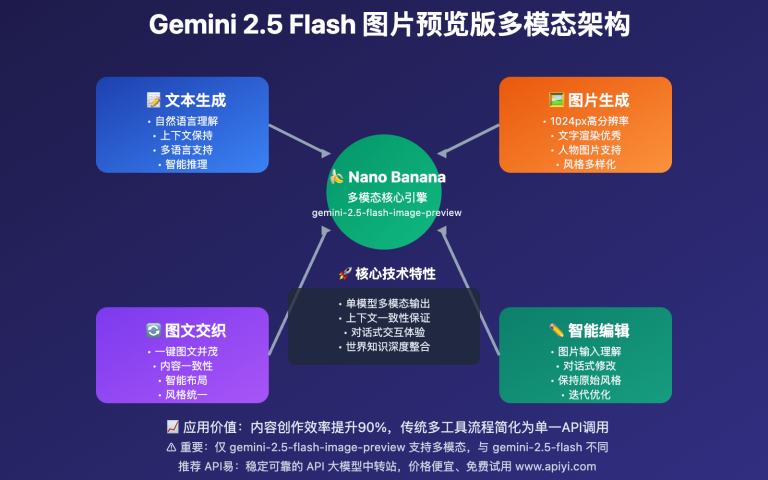

Author's Note: Comprehensive comparison of Gemini 2.5 Flash and Claude Haiku 4.5 across 7 key dimensions including performance, pricing, speed, and reasoning capabilities to help developers choose the optimal small model

In the small high-performance model market, Gemini 2.5 Flash and Claude Haiku 4.5 are undoubtedly the two most noteworthy products in 2025. The former comes from Google, excelling in reasoning capabilities and tool calling; the latter from Anthropic, focusing on near-frontier performance and ultimate cost-effectiveness.

Through in-depth analysis across 7 dimensions including performance benchmarks, pricing strategies, speed comparisons, and ecosystem considerations, this article will help you find the small model that best fits your project. Whether it's real-time chat, coding assistance, or automation tasks, you'll find clear selection strategies here.

Core Value: After reading this article, you'll understand where Gemini Flash and Haiku 4.5 each have advantages, avoiding project impact or cost increases due to poor model selection.

Background Introduction: Gemini 2.5 Flash and Claude Haiku 4.5

Gemini 2.5 Flash and Claude Haiku 4.5 are both small high-performance models launched around October 2025, representing the latest trend of "small but refined" in the AI industry.

Gemini 2.5 Flash is a small model from Google DeepMind's Gemini 2.5 series released in September 2025. Its core positioning is "a high-cost-effective model with controllable reasoning capabilities." The latest version (Preview 09-2025) achieves 54% on SWE-bench Verified, supports reasoning budget control (0-24576 tokens), and reaches 887 tokens/second output speed.

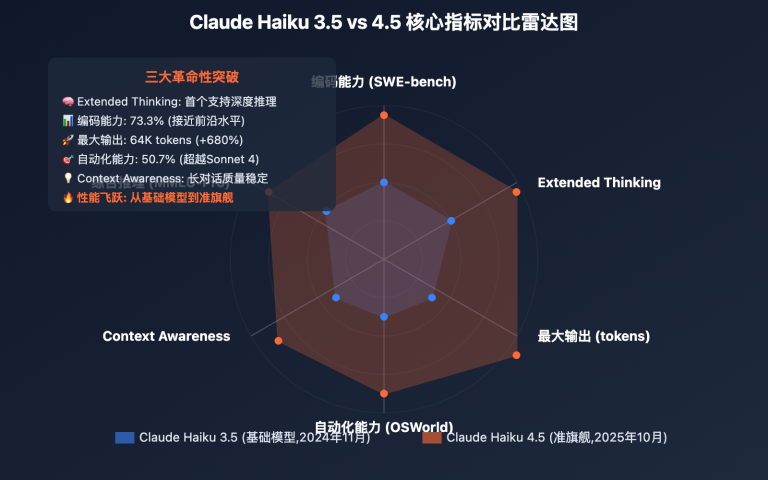

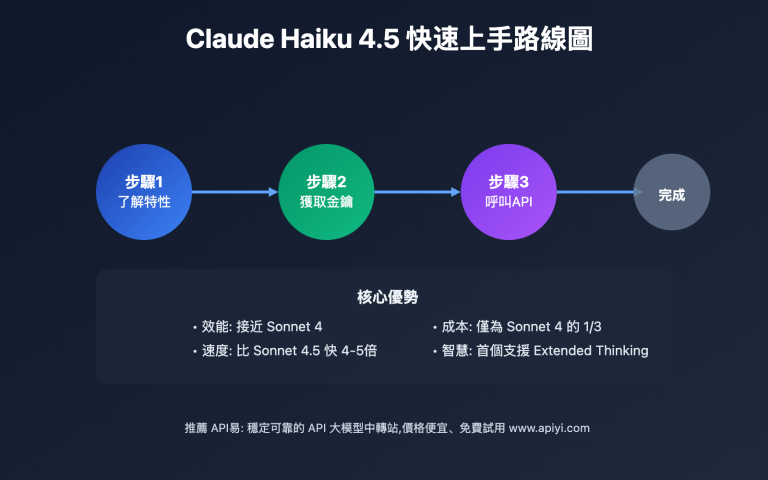

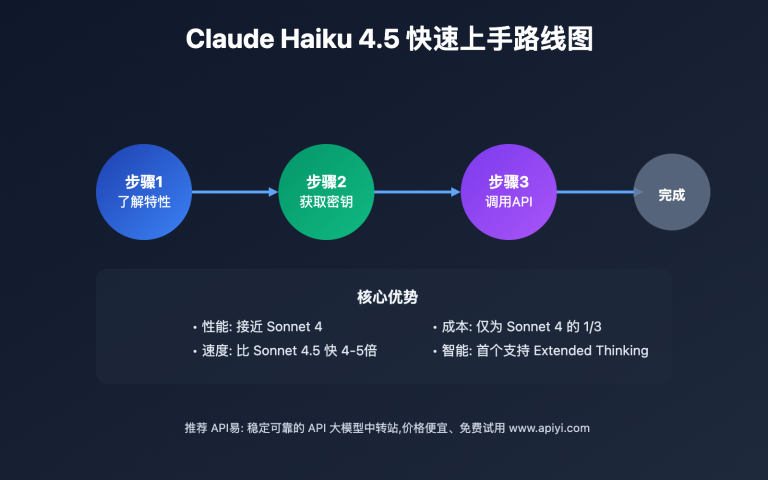

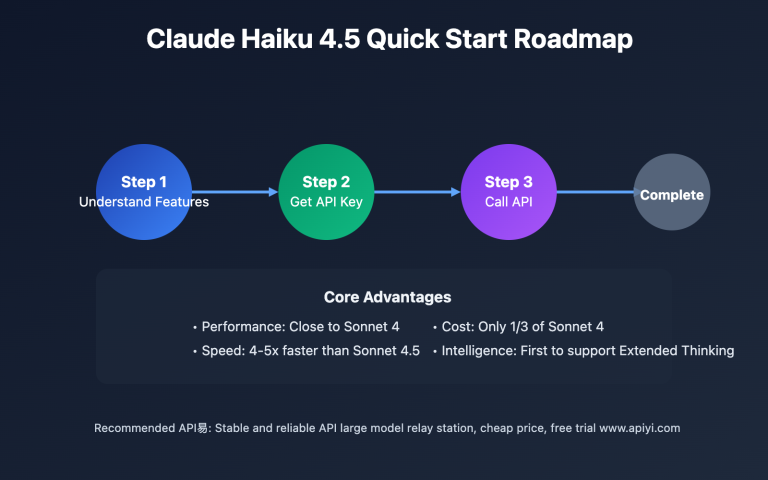

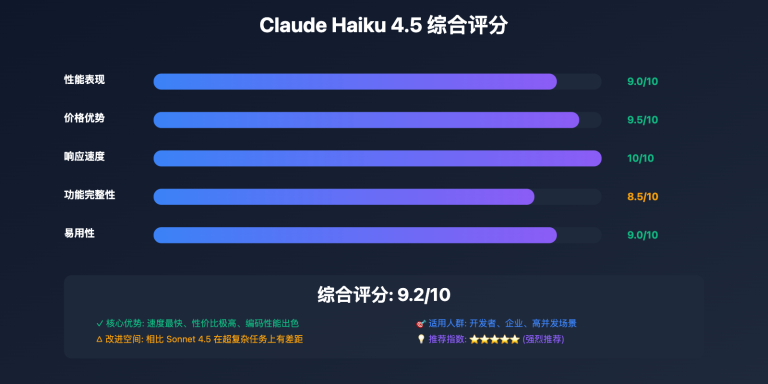

Claude Haiku 4.5 is a small model from Anthropic's Claude 4 series released on October 15, 2025, officially positioned as "the fastest near-frontier intelligent model." The key breakthrough is: achieving Sonnet 4-level coding performance (73.3% SWE-bench), but at only one-third the cost of Sonnet 4.5 and 4-5 times faster.

Market Positioning Differences:

- Gemini Flash: Google ecosystem, emphasizing reasoning capabilities and tool calling, lower price

- Haiku 4.5: Anthropic independent ecosystem, emphasizing near-frontier performance, balanced speed and cost

The emergence of these two models marks that small models can now challenge previous flagship models, providing developers with more high-cost-effective choices.

Technical Specifications Comparison: Gemini 2.5 Flash vs Claude Haiku 4.5

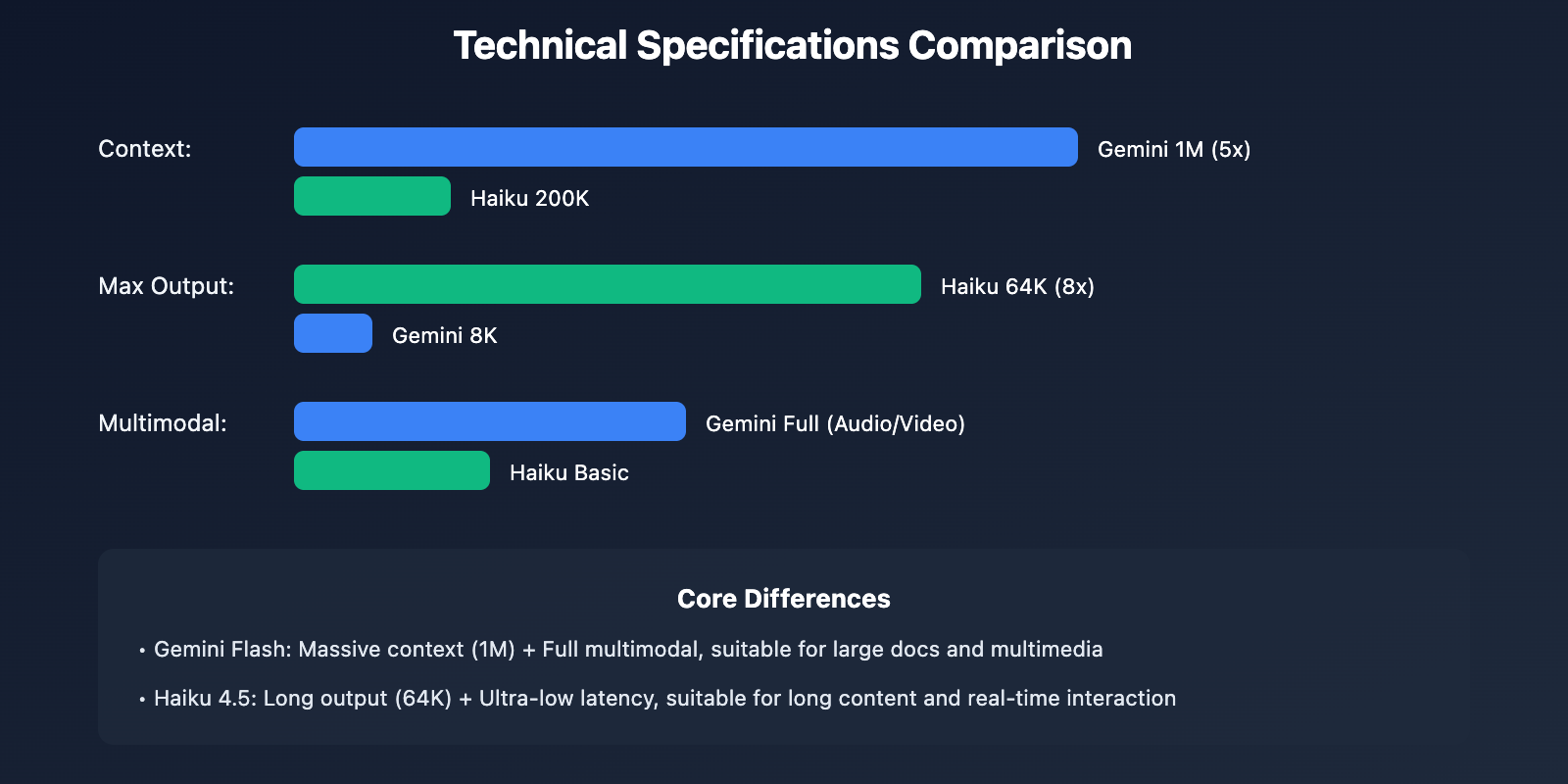

Here's a detailed comparison of Gemini 2.5 Flash and Claude Haiku 4.5 in core technical specifications:

| Technical Specs | Gemini 2.5 Flash | Claude Haiku 4.5 | Advantage Analysis |

|---|---|---|---|

| Model ID | gemini-2.5-flash | claude-haiku-4-5-20251001 | – |

| Context Window | 1,000,000 tokens | 200,000 tokens | Gemini 5x advantage |

| Max Output | 8,192 tokens | 64,000 tokens | Haiku 8x advantage |

| Output Speed | 887 tokens/sec | Not disclosed | Gemini data complete |

| First Token Latency | Not disclosed | Ultra-low (millisecond) | Haiku faster response |

| Training Cutoff | September 2025 | July 2025 | Gemini more recent |

| Multimodal | Text+Image+Audio+Video | Text+Image | Gemini more comprehensive |

| Reasoning | Controllable (0-24576 tokens) | Extended Thinking | Both support |

| Tool Calling | Parallel tools+Advanced | Parallel tools+Full | Both powerful |

🔥 Technical Specification Highlights Analysis

Gemini 2.5 Flash Advantages

-

Massive Context Window (1M tokens)

- Can process entire books or massive codebases

- Suitable for tasks requiring extensive context

- 5x Haiku 4.5's context capability

-

Comprehensive Multimodal Support

- Supports text, image, audio, video

- Haiku 4.5 only supports text and image

- Clear advantage in multimedia processing scenarios

-

Controllable Reasoning Capabilities

- Supports 0-24576 tokens reasoning budget control

- Developers can precisely control reasoning depth and cost

- Higher flexibility

-

Clear Output Speed

- Officially announced 887 tokens/second

- 40% improvement over previous version

- One of the "fastest proprietary models"

Claude Haiku 4.5 Advantages

-

Larger Output Capacity (64K tokens)

- Can generate ultra-long documents and code

- 8x Gemini Flash's output capability

- Suitable for long-output scenarios

-

Ultra-low First Token Latency

- Officially emphasizes "ultra-low latency"

- Better experience in real-time interaction scenarios

- Suitable for chat and coding assistants

-

Complete Feature Support

- First Haiku supporting Extended Thinking

- First Haiku supporting Context Awareness

- Features consistent with flagship models

Difference Summary

- Gemini Flash: Better for scenarios requiring large context and multimodal

- Haiku 4.5: Better for scenarios requiring long output and low latency

Performance Benchmark Comparison: Gemini 2.5 Flash vs Claude Haiku 4.5

The performance differences between Gemini 2.5 Flash and Claude Haiku 4.5 directly impact model selection. Let's compare their actual capabilities through authoritative benchmark tests.

📊 Core Performance Benchmark Comparison

| Benchmark | Gemini 2.5 Flash | Claude Haiku 4.5 | Performance Analysis |

|---|---|---|---|

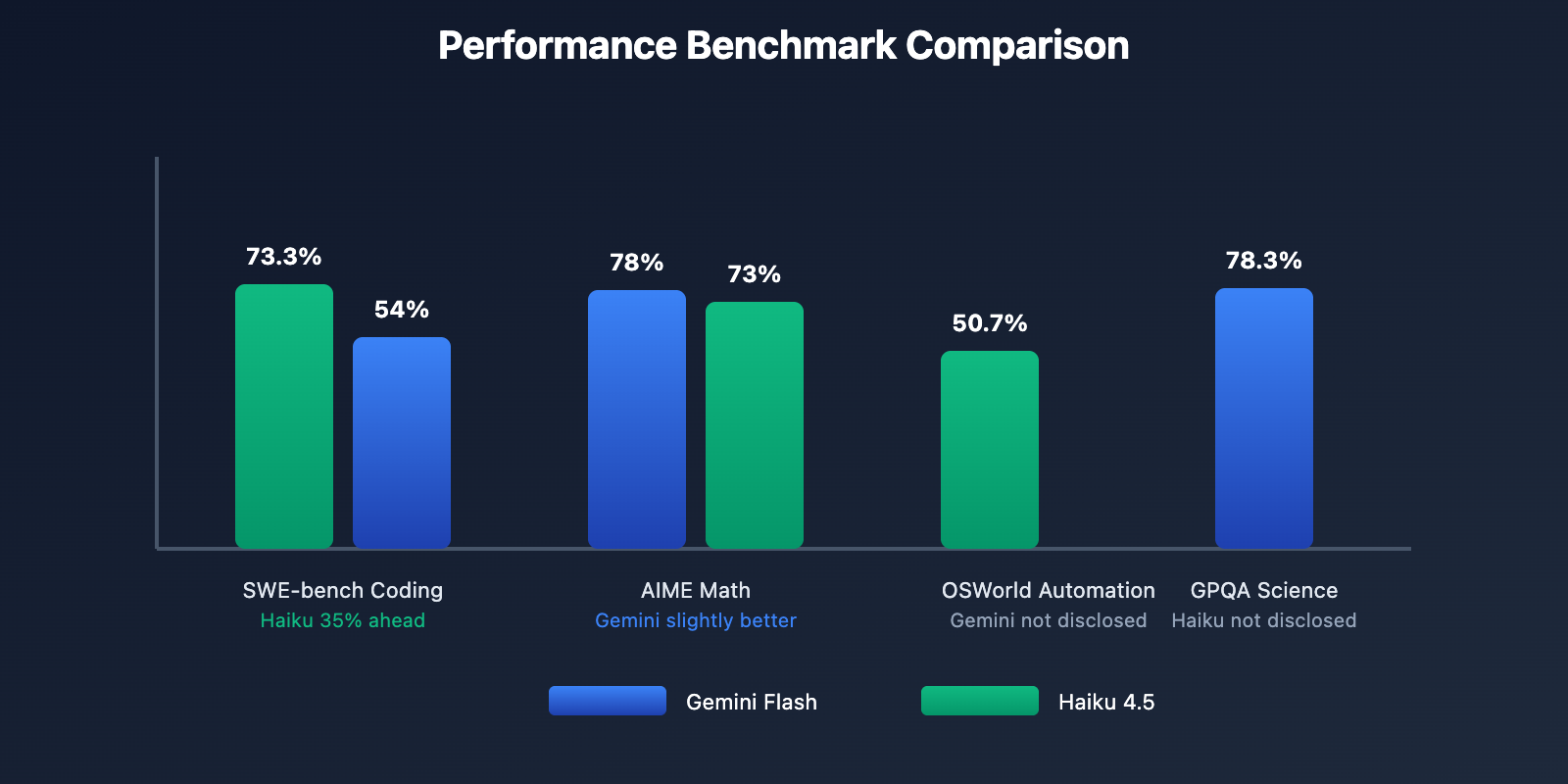

| SWE-bench Verified (Coding) | 54% | 73.3% | ⚡ Haiku 35% ahead |

| OSWorld (Computer Use) | Not disclosed | 50.7% | Haiku data complete |

| Terminal-Bench (Terminal) | Not disclosed | 41% | Haiku data complete |

| GPQA Diamond (Science) | 78.3% | Not disclosed | Gemini data complete |

| AIME 2025 (Math) | 78.0% | ~73% | Gemini slightly better |

| Humanity's Last Exam | 12.1% | Not disclosed | Gemini data complete |

| Reasoning Mode Score (AI Index) | 54 points | Not disclosed | Gemini has reasoning score |

| Non-reasoning Mode Score (AI Index) | 47 points | Not disclosed | Gemini has score |

🎯 In-depth Performance Analysis

1. Coding Capability Comparison (SWE-bench Verified)

This is the most critical performance indicator, testing the model's ability to solve real GitHub problems.

Claude Haiku 4.5: 73.3%

- Significantly ahead in coding tasks

- 35% higher than Gemini Flash

- Reaches previous flagship Sonnet 4 level

- Suitable for complex programming tasks

Gemini 2.5 Flash: 54%

- 5% improvement over previous version (48.9% → 54%)

- Good performance among small models

- Still has clear gap with Haiku 4.5

Conclusion: Haiku 4.5 is the first choice for coding scenarios, with obvious performance advantages.

2. Mathematical Reasoning Comparison (AIME 2025)

Gemini 2.5 Flash: 78.0%

- Excellent performance in math competition problems

- Slightly better than Haiku 4.5

- Suitable for scientific computing and mathematical reasoning

Claude Haiku 4.5: ~73%

- Also performs well

- Slightly inferior to Gemini Flash

- But gap is small (5%)

Conclusion: In mathematical reasoning scenarios, Gemini Flash has slight advantage, but gap is small.

3. Scientific Reasoning Comparison (GPQA Diamond)

Gemini 2.5 Flash: 78.3%

- Excellent performance in scientific reasoning problems

- Haiku 4.5 hasn't disclosed this data

- Suitable for research and professional fields

Claude Haiku 4.5: Not disclosed

- Cannot directly compare

Conclusion: Gemini Flash has data advantage in scientific reasoning scenarios.

4. Computer Use Capability (OSWorld)

Claude Haiku 4.5: 50.7%

- Excellent performance in desktop automation tasks

- Even surpasses Sonnet 4.5 (48%)

- Suitable for RPA and automation testing

Gemini 2.5 Flash: Not disclosed

- Cannot directly compare

Conclusion: Haiku 4.5 has proven data in automation scenarios.

5. Reasoning Capability Comparison

Gemini 2.5 Flash:

- Reasoning Mode: 54 points (AI Intelligence Index)

- Non-reasoning Mode: 47 points

- Reasoning Budget: 0-24576 tokens controllable

- More flexible and controllable reasoning

Claude Haiku 4.5:

- Supports Extended Thinking

- First Haiku model supporting this feature

- Specific scores not disclosed

Conclusion: Gemini Flash is more transparent in reasoning capabilities, with clear scores and controllable budget.

💡 Performance Selection Recommendations

Scenarios for choosing Haiku 4.5:

- ✅ Coding tasks (73.3% vs 54%, 35% ahead)

- ✅ Desktop and browser automation

- ✅ Terminal automation tasks

- ✅ Scenarios requiring long output (64K tokens)

Scenarios for choosing Gemini Flash:

- ✅ Mathematical and scientific reasoning (78%+)

- ✅ Tasks requiring large context (1M tokens)

- ✅ Multimodal processing (Audio+Video)

- ✅ Scenarios requiring controllable reasoning

🎯 Testing Recommendation: Both models have their strengths, selection should be based on specific application scenarios. We recommend testing through the API易 apiyi.com platform, which supports both Gemini and Claude series models, facilitating quick comparison and switching to help you make the most suitable choice.

Pricing and Cost Comparison: Gemini 2.5 Flash vs Claude Haiku 4.5

The pricing differences between Gemini 2.5 Flash and Claude Haiku 4.5 have huge cost implications for large-scale applications. Gemini Flash is cheaper, but Haiku 4.5 can be more cost-controllable in certain optimization scenarios.

💰 Official Pricing Comparison

| Pricing Type | Gemini 2.5 Flash | Claude Haiku 4.5 | Cost Difference |

|---|---|---|---|

| Input Pricing | $0.30 / million tokens | $1.00 / million tokens | Gemini 70% cheaper |

| Output Pricing | $2.50 / million tokens | $5.00 / million tokens | Gemini 50% cheaper |

| Prompt Caching | Not supported | Save 90% | Haiku stronger optimization |

| Message Batches | Not supported | Save 50% | Haiku stronger optimization |

| Reasoning Cost | Included in output | Included in output | Same |

📊 Actual Cost Calculation

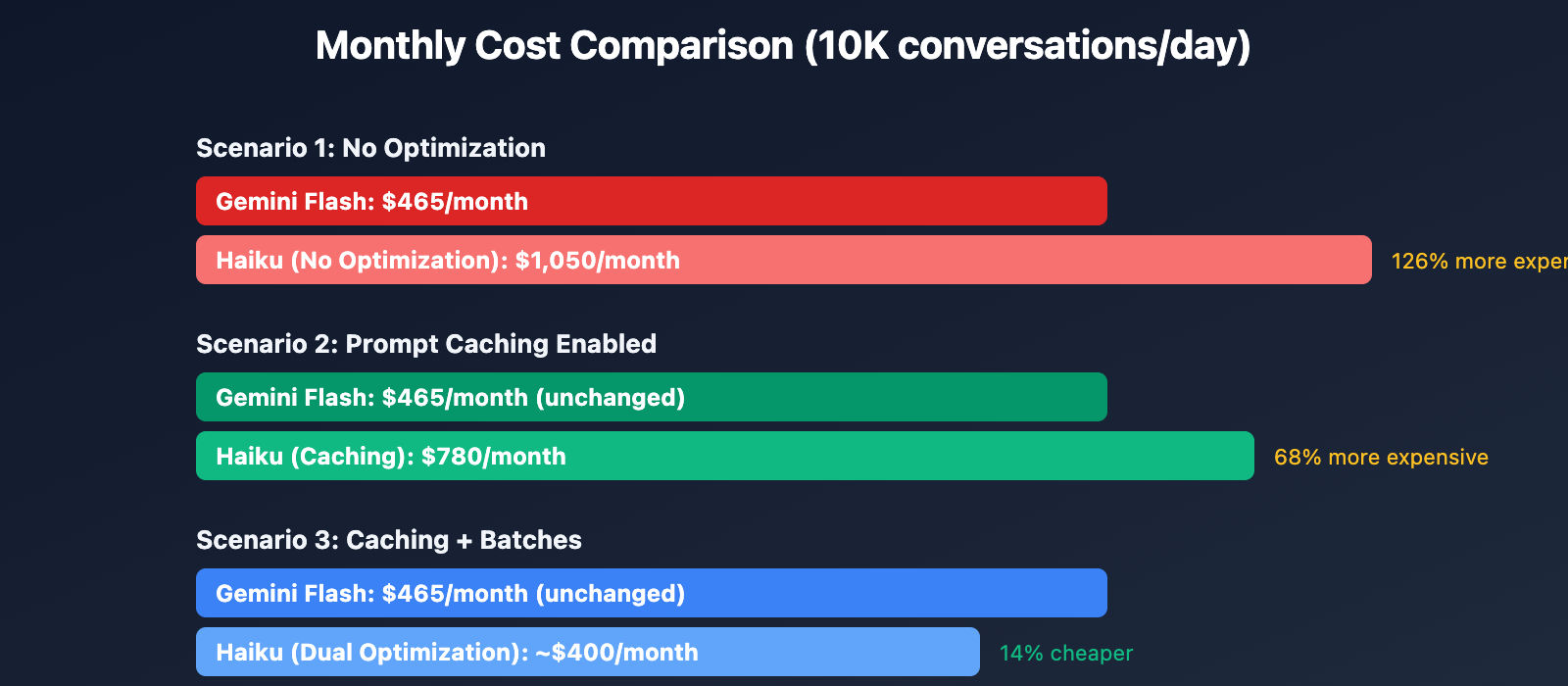

Scenario 1: Medium-scale Chat System

Assumption: Process 10,000 conversations daily, average 1,000 tokens input, 500 tokens output per conversation

Gemini Flash Monthly Cost:

- Input: 10,000 × 30 × 1,000 / 1,000,000 × $0.30 = $90

- Output: 10,000 × 30 × 500 / 1,000,000 × $2.50 = $375

- Total: $465/month

Haiku 4.5 Monthly Cost (No Optimization):

- Input: 10,000 × 30 × 1,000 / 1,000,000 × $1.00 = $300

- Output: 10,000 × 30 × 500 / 1,000,000 × $5.00 = $750

- Total: $1,050/month

Haiku 4.5 Monthly Cost (Prompt Caching Enabled):

- Input: $300 × 10% = $30 (cache saves 90%)

- Output: $750 (unchanged)

- Total: $780/month

Comparison Conclusion:

- No optimization: Gemini Flash cheaper by $585/month (55.7%)

- Cache enabled: Gemini Flash cheaper by $315/month (40.4%)

Scenario 2: Large-scale Coding Assistant

Assumption: 1,000 coding requests daily, average 2,000 tokens input, 1,500 tokens output

Gemini Flash Monthly Cost:

- Input: 1,000 × 30 × 2,000 / 1,000,000 × $0.30 = $18

- Output: 1,000 × 30 × 1,500 / 1,000,000 × $2.50 = $112.5

- Total: $130.5/month

Haiku 4.5 Monthly Cost (No Optimization):

- Input: 1,000 × 30 × 2,000 / 1,000,000 × $1.00 = $60

- Output: 1,000 × 30 × 1,500 / 1,000,000 × $5.00 = $225

- Total: $285/month

Haiku 4.5 Monthly Cost (Prompt Caching Enabled):

- Input: $60 × 10% = $6 (cache saves 90%)

- Output: $225 (unchanged)

- Total: $231/month

Comparison Conclusion:

- No optimization: Gemini Flash cheaper by $154.5/month (54.2%)

- Cache enabled: Gemini Flash cheaper by $100.5/month (43.5%)

🚀 Cost Optimization Strategies

Gemini Flash Advantages

-

Lowest Base Price

- 70% cheaper input, 50% cheaper output

- Significant cost savings without additional optimization

- Suitable for small-medium scale or budget-constrained projects

-

Suitable for High Input Scenarios

- 1M context window + low input cost

- Extremely low cost for processing large documents

- Input cost only 30% of Haiku's

Haiku 4.5 Advantages

-

Prompt Caching Saves 90%

- Dramatic cost reduction in fixed system prompt scenarios

- Input: $1.00 → $0.10

- Cost approaches Gemini in cache-optimized scenarios

-

Message Batches Saves 50%

- Non-real-time tasks can use batch processing

- Input: $1.00 → $0.50

- Output: $5.00 → $2.50

-

Combining Both Optimizations

- Can achieve up to 95% cost savings

- With proper optimization, Haiku may be cheaper than Gemini

💡 Cost Selection Recommendations

Scenarios prioritizing Gemini Flash:

- ✅ Scenarios unable to use Prompt Caching

- ✅ Real-time interactive applications (cannot use Batches)

- ✅ High input, low output scenarios

- ✅ Need to process large documents (1M context)

Scenarios prioritizing Haiku 4.5:

- ✅ Fixed system prompts (can use Prompt Caching)

- ✅ Non-real-time batch processing (can use Batches)

- ✅ High output scenarios (64K output capability)

- ✅ Need optimal cost control (combining both optimizations)

💰 Cost Optimization Recommendation: For projects with cost budget considerations, we recommend price comparison and cost estimation through API易 apiyi.com. The platform provides transparent pricing systems and usage statistics tools, supporting both Gemini and Claude series models, helping you better control and optimize API call costs.

Speed and Ecosystem Comparison: Gemini 2.5 Flash vs Claude Haiku 4.5

Gemini 2.5 Flash and Claude Haiku 4.5 in speed and ecosystem aspects each have their characteristics, affecting development experience and integration difficulty.

⚡ Speed Performance Comparison

| Speed Metrics | Gemini 2.5 Flash | Claude Haiku 4.5 | Speed Analysis |

|---|---|---|---|

| Output Speed | 887 tokens/sec | Not disclosed (estimated faster) | Both very fast |

| vs Previous Version | 40% improvement | 4-5x faster than Sonnet 4.5 | Both have major improvements |

| First Token Latency | Not emphasized | Ultra-low (officially emphasized) | Haiku likely better |

| Concurrent Processing | High | High | Both support high concurrency |

| Speed Positioning | "Fastest proprietary model" | "Fastest near-frontier model" | Both emphasize speed |

🌐 Ecosystem Comparison

Google Ecosystem (Gemini Flash)

Advantages:

-

Deep Google Cloud Integration

- Seamless integration with Google Cloud Platform

- Direct use of Google Cloud authentication

- Integration with other Google services (BigQuery/Cloud Storage, etc.)

-

Diverse Access Methods

- Google AI Studio (free trial)

- Vertex AI (enterprise)

- Direct API (developers)

-

Rich Tool Ecosystem

- Gemini CLI

- Official Python/Node.js SDK

- Native Langchain/LlamaIndex support

-

Free Quota

- Google AI Studio provides free trial

- Suitable for small-scale testing and personal projects

Disadvantages:

- Restricted access in mainland China

- Requires VPN or proxy services

Anthropic Ecosystem (Haiku 4.5)

Advantages:

-

Multi-platform Support

- Claude API (official)

- AWS Bedrock (Amazon)

- Google Cloud Vertex AI (Google)

- Widespread third-party proxy platform support

-

Developer Friendly

- Claude.ai web version (free trial)

- Mobile apps (iOS/Android)

- Unified API interface

-

Enterprise Features

- Prompt Caching

- Message Batches API

- Priority scheduling

- Context Awareness

-

Mainland China Availability

- Accessible through third-party proxy platforms

- Widespread support from aggregation platforms like API易

Disadvantages:

- Ecosystem relatively smaller than Google's

- Fewer official tools

💡 Speed and Ecosystem Selection Recommendations

Scenarios choosing Gemini Flash:

- ✅ Already using Google Cloud services

- ✅ Need integration with Google ecosystem

- ✅ Overseas projects or have VPN access

- ✅ Need free trial quota

Scenarios choosing Haiku 4.5:

- ✅ Need ultra-low first token latency

- ✅ Using AWS or multi-cloud architecture

- ✅ Mainland China projects (through proxy)

- ✅ Need enterprise optimization features

🛠️ Platform Selection Recommendation: For developers in mainland China, we recommend using API易 apiyi.com as the main API aggregation platform. It supports both Gemini and Claude series models, provides unified interface management, real-time monitoring, and cost analysis features, usable without VPN, making it an ideal choice for developers.

Application Scenario Comparison: Gemini 2.5 Flash vs Claude Haiku 4.5

The application scenarios of Gemini 2.5 Flash and Claude Haiku 4.5 have obvious differences due to their performance characteristics. Choosing the right model can make projects twice as effective with half the effort.

🎯 Best Application Scenario Comparison

| Application Scenario | Gemini 2.5 Flash | Claude Haiku 4.5 | Recommended Choice |

|---|---|---|---|

| 🤖 Smart Customer Service | ⭐⭐⭐⭐ (Low cost) | ⭐⭐⭐⭐⭐ (Low latency) | Haiku 4.5 |

| 💻 Coding Assistant | ⭐⭐⭐ (Average performance) | ⭐⭐⭐⭐⭐ (73.3%) | Haiku 4.5 |

| 📚 Document Analysis | ⭐⭐⭐⭐⭐ (1M context) | ⭐⭐⭐ (200K context) | Gemini Flash |

| 🎥 Multimedia Processing | ⭐⭐⭐⭐⭐ (Full support) | ⭐⭐ (Text+Image only) | Gemini Flash |

| 🧮 Mathematical Reasoning | ⭐⭐⭐⭐⭐ (78%) | ⭐⭐⭐⭐ (73%) | Gemini Flash |

| 🎨 Content Creation | ⭐⭐⭐⭐ (Fast+cheap) | ⭐⭐⭐⭐⭐ (High quality) | Haiku 4.5 |

| 🤝 Multi-agent Systems | ⭐⭐⭐⭐ (Low cost) | ⭐⭐⭐⭐⭐ (Officially recommended) | Haiku 4.5 |

| 🎯 Desktop Automation | ⭐⭐⭐ (No data) | ⭐⭐⭐⭐⭐ (50.7%) | Haiku 4.5 |

| 🔬 Scientific Research | ⭐⭐⭐⭐⭐ (78.3%) | ⭐⭐⭐⭐ (No data) | Gemini Flash |

| 💰 Budget Constraints | ⭐⭐⭐⭐⭐ (Cheapest) | ⭐⭐⭐⭐ (Cheap after optimization) | Gemini Flash |

🔥 Detailed Scenario Analysis

Scenario 1: Smart Customer Service System

Why choose Haiku 4.5:

- Ultra-low first token latency, better user experience

- 73.3% coding performance sufficient for complex dialogue logic

- Cost controllable after enabling Prompt Caching

Why not Gemini Flash:

- Although cheaper, latency might be slightly higher

- 1M context is excessive for customer service scenarios

Recommendation: Haiku 4.5

Scenario 2: Coding Assistant

Why choose Haiku 4.5:

- 73.3% SWE-bench, far exceeding Gemini's 54%

- 64K output can generate ultra-long code

- Fast speed, doesn't interrupt development flow

Why not Gemini Flash:

- Coding performance clearly weaker than Haiku

- 35% performance gap is obvious in actual development

Recommendation: Haiku 4.5

Scenario 3: Large Document Analysis

Why choose Gemini Flash:

- 1M context window, can process entire books

- Input cost only $0.30/M, extremely low cost for processing large documents

- 5x Haiku's context capability

Why not Haiku 4.5:

- 200K context might be insufficient

- Input cost is 3.3x Gemini's

Recommendation: Gemini Flash

Scenario 4: Multimedia Processing

Why choose Gemini Flash:

- Supports text, image, audio, video

- Comprehensive multimodal capabilities

- Suitable for video analysis, audio transcription scenarios

Why not Haiku 4.5:

- Only supports text and image

- Cannot process audio and video

Recommendation: Gemini Flash

Scenario 5: Multi-agent Systems

Why choose Haiku 4.5:

- Officially recommended for Sub-Agent architecture

- Extended Thinking supports complex reasoning

- Fast speed, suitable for agent collaboration

Why not Gemini Flash:

- Although cheaper, performance slightly weaker

- Might become bottleneck in multi-agent collaboration

Recommendation: Haiku 4.5

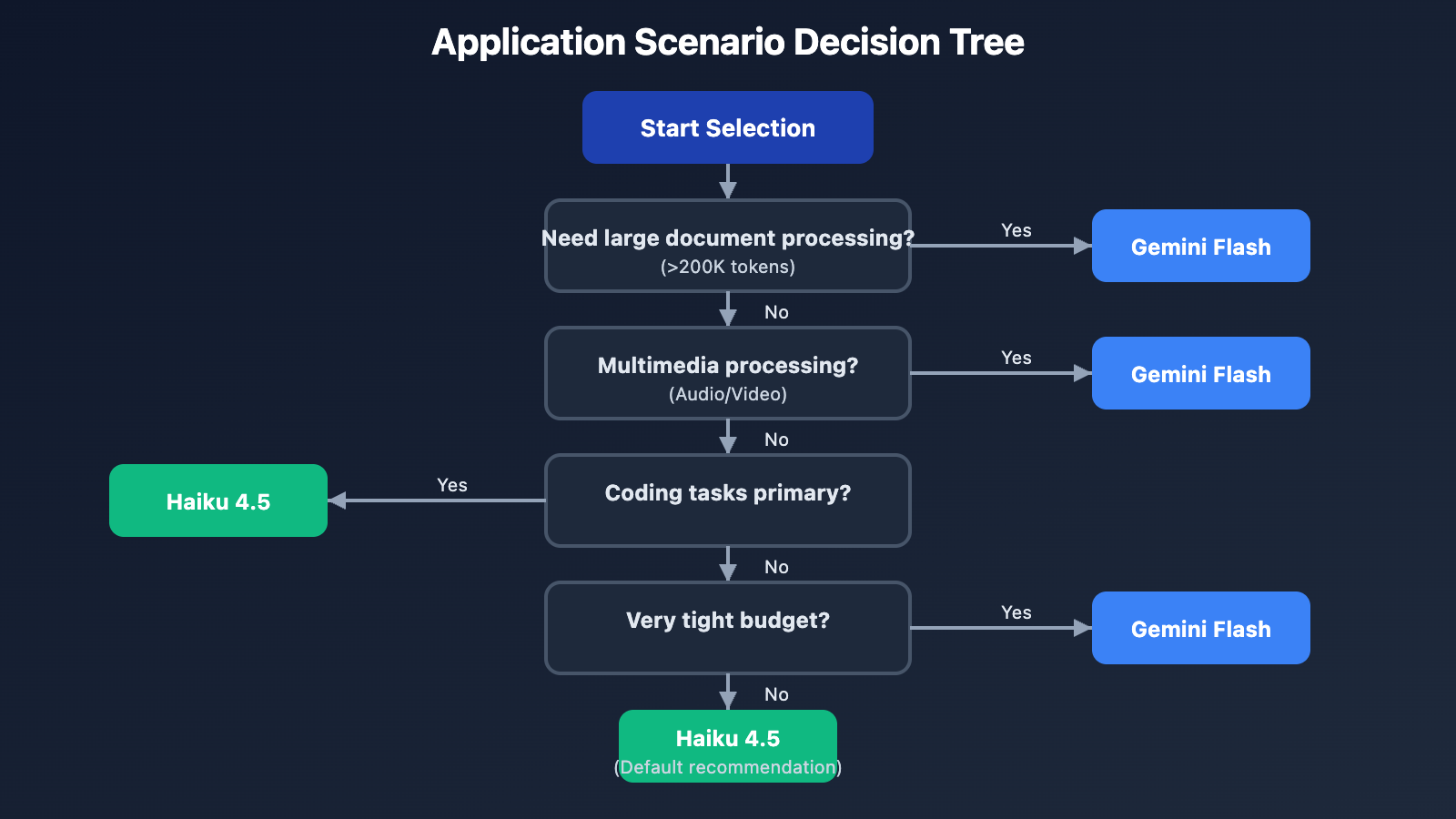

💡 Scenario Selection Decision Tree

Start Selection

|

├─ Need large document processing? (>200K tokens)

| ├─ Yes → **Gemini Flash** (1M context)

| └─ No → Continue

|

├─ Need multimedia processing? (Audio/Video)

| ├─ Yes → **Gemini Flash** (Full support)

| └─ No → Continue

|

├─ Coding tasks primary?

| ├─ Yes → **Haiku 4.5** (73.3% vs 54%)

| └─ No → Continue

|

├─ Need ultra-low latency? (Real-time interaction)

| ├─ Yes → **Haiku 4.5** (Ultra-low first token latency)

| └─ No → Continue

|

├─ Very tight budget?

| ├─ Yes → **Gemini Flash** (Lowest cost)

| └─ No → Continue

|

└─ Math/Science reasoning primary?

├─ Yes → **Gemini Flash** (78%+)

└─ No → **Haiku 4.5** (Default recommendation)

❓ Frequently Asked Questions: Gemini 2.5 Flash vs Claude Haiku 4.5

Q1: Which is faster, Gemini Flash or Haiku 4.5?

Both are extremely fast small models, but with different speed characteristics:

Gemini 2.5 Flash:

- Output Speed: 887 tokens/second (official data)

- Advantage: Fast sustained output speed

- Improvement: 40% faster than previous version

- Positioning: "Fastest proprietary model"

Claude Haiku 4.5:

- Output Speed: Specific numbers not disclosed, but officially emphasizes "4-5x faster than Sonnet 4.5"

- Advantage: Ultra-low first token latency

- Characteristic: More immediate response, better user perception

- Positioning: "Fastest near-frontier intelligent model"

Actual Experience Differences:

- Gemini Flash: Faster sustained output, suitable for long text generation

- Haiku 4.5: Faster startup, suitable for real-time interaction

Conclusion: Choose Haiku for real-time interaction, Gemini Flash for long text generation.

Q2: How much stronger is Haiku 4.5 than Gemini Flash in coding scenarios?

In SWE-bench Verified benchmark tests, Haiku 4.5 significantly leads:

Performance Data:

- Haiku 4.5: 73.3%

- Gemini Flash: 54%

- Gap: 19.3 percentage points, 35% ahead

Actual Impact:

- When solving real GitHub problems, Haiku 4.5 has significantly higher success rate

- For complex programming tasks, Haiku 4.5 has better quality

- Gemini Flash suitable for simple code completion, Haiku 4.5 suitable for complex refactoring

Why such a large gap:

- Haiku 4.5 reaches previous flagship Sonnet 4 level

- Gemini Flash, while improving (48.9% → 54%), still has basic performance gap

Recommendation: Strongly recommend Haiku 4.5 for coding scenarios, with obvious performance advantages.

Q3: How much cheaper is Gemini Flash in cost? What about after enabling optimization?

Base Pricing Comparison:

- Gemini Flash: $0.30/$2.50 (input/output)

- Haiku 4.5: $1.00/$5.00 (input/output)

- Gap: Gemini input 70% cheaper, output 50% cheaper

Actual Cost (Chat system, 10K/day):

- Gemini Flash: $465/month

- Haiku 4.5 (No optimization): $1,050/month (126% more expensive)

- Haiku 4.5 (Prompt Caching): $780/month (68% more expensive)

- Haiku 4.5 (Caching + Batches): ~$400/month (close)

Conclusion:

- No optimization scenarios: Gemini Flash obviously cheaper

- Dual optimization enabled: Haiku 4.5 can approach or even be lower than Gemini Flash

- Selection Strategy: If optimization features can be used, Haiku 4.5 has better cost-performance; otherwise choose Gemini Flash

Q4: Which model is easier to use in mainland China?

Gemini 2.5 Flash:

- ❌ Google services restricted in China

- ❌ Requires VPN to access official API

- ✅ Can be used through third-party proxy platforms

Claude Haiku 4.5:

- ✅ Widespread third-party proxy platform support

- ✅ Fully supported by aggregation platforms like API易

- ✅ Usable without VPN

Recommended Solution:

- For developers in mainland China, we recommend using both models through API易 apiyi.com

- The platform supports both Gemini and Claude series, providing unified interface

- No VPN required, fast access, good stability

- Provides comprehensive technical support and monitoring tools

Conclusion: Using both models through API易 is most convenient.

Q5: How to dynamically switch between Gemini Flash and Haiku 4.5?

Method 1: Task Type-based Routing

import openai

client = openai.OpenAI(

api_key="YOUR_API_KEY",

base_url="https://vip.apiyi.com/v1"

)

def choose_model(task_type):

"""Choose model based on task type"""

if task_type in ["coding", "automation", "chat"]:

return "claude-haiku-4-5-20251001" # Use Haiku for coding and real-time scenarios

elif task_type in ["document", "video", "audio", "math"]:

return "gemini-2.5-flash" # Use Gemini for large docs and multimedia

else:

return "gemini-2.5-flash" # Default to cheaper option

# Use Haiku for coding tasks

result1 = client.chat.completions.create(

model=choose_model("coding"),

messages=[{"role": "user", "content": "Write a quicksort algorithm"}]

)

# Use Gemini for document analysis

result2 = client.chat.completions.create(

model=choose_model("document"),

messages=[{"role": "user", "content": "Summarize this report..." + long_document}]

)

Method 2: Document Length-based Routing

def choose_model_by_length(input_length):

"""Choose model based on input length"""

if input_length > 200000: # Over 200K tokens

return "gemini-2.5-flash" # Use Gemini's 1M context

else:

return "claude-haiku-4-5-20251001" # Use better performing Haiku

Recommended Strategy: We recommend model switching through the API易 apiyi.com platform. The platform provides unified interface and real-time cost monitoring, enabling convenient dynamic model routing strategies while tracking performance and cost of different models.

🎯 Summary

Gemini 2.5 Flash and Claude Haiku 4.5 are the two most noteworthy small high-performance models in 2025, each with their strengths.

Core Difference Summary:

- Coding Performance: Haiku 4.5 significantly ahead (73.3% vs 54%, 35% ahead)

- Context: Gemini Flash 5x advantage (1M vs 200K)

- Cost: Gemini Flash lower base price, Haiku can approach after optimization

- Multimodal: Gemini Flash full audio/video support, Haiku only text/image

- Output Capability: Haiku 4.5 8x advantage (64K vs 8K)

- Latency: Haiku 4.5 lower first token latency

- Ecosystem: Google vs Anthropic, each has advantages

Selection Recommendations:

- Coding/Automation/Real-time Interaction: Haiku 4.5 (obvious performance and latency advantages)

- Large Documents/Multimedia/Math Research: Gemini Flash (1M context and multimodal)

- Ultimate Cost Optimization: Gemini Flash (lowest base price)

- Enterprise Optimization: Haiku 4.5 (Caching + Batches)

Best Strategy: Dynamic selection based on task type, mixed usage can achieve optimal balance of performance and cost.

In practical applications, we recommend:

- First clarify main task types and requirements

- Compare both models through actual testing

- Enable Haiku 4.5's optimization features to reduce costs

- Use unified API platform for easy switching

Final Recommendation: For enterprise applications, we strongly recommend using professional API aggregation platforms like API易 apiyi.com. It not only provides unified interface for Gemini and Claude series models, but also supports rapid model switching, real-time cost monitoring, and comprehensive technical support, helping you flexibly choose between different models to achieve optimal balance of performance and cost.

📝 Author Bio: Senior AI application developer, focused on large model API integration and architecture design. Regularly shares AI development practical experience, more technical materials and best practice cases available at API易 apiyi.com technical community.

🔔 Technical Exchange: Welcome to discuss Gemini and Claude model selection issues in comments, continuously sharing AI development experience and industry trends. For in-depth technical support, contact our technical team through API易 apiyi.com.